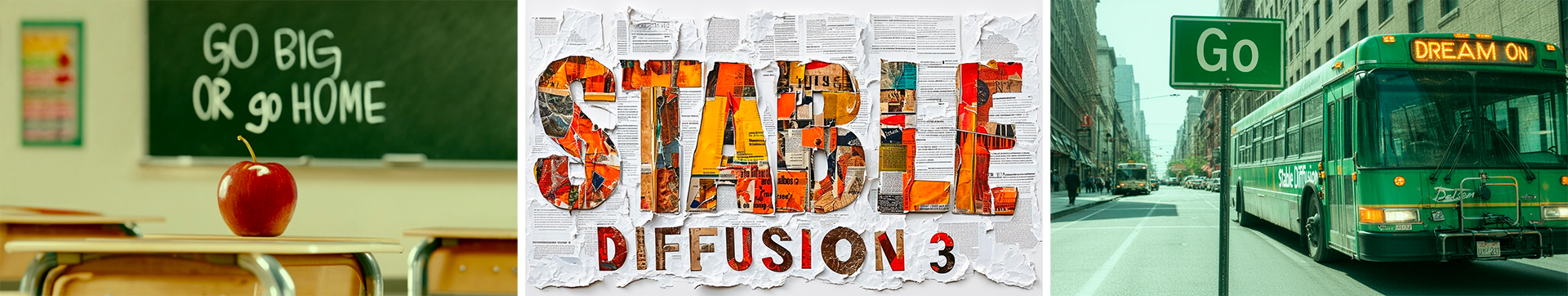

Stability AI has unveiled an early preview of Stable Diffusion 3.0, its next-generation text-to-image generative AI model that aims to deliver significant improvements in image quality, spelling abilities, and performance with multi-subject prompts.

The company has steadily advanced its image synthesis capabilities over multiple model iterations in the past year. Stable Diffusion 3.0 represents a major upgrade, incorporating a new diffusion transformer architecture and flow matching (a simulation-free approach for training models) that promise to accelerate diffusion model performance.

"Stable Diffusion 3 is a diffusion transformer, a new type of architecture similar to the one used in the recent OpenAI Sora model," said Stability AI CEO Emad Mostaque on X. "It is the real successor to the original Stable Diffusion."

Transformers have become a staple of natural language models, but diffusion transformers specifically target image generation by operating on latent image patches. Combined with flow matching, Stability AI states these methods enabled enhanced training speed, sampling efficiency, and overall output quality.

Accurate text rendering has been a historical challenge for image synthesis models (however DALLE-3 is not bad at it). Mostaque highlighted the new model's spelling improvements stemming from its transformer architecture and additional text encoders. "Full sentences are now possible as is coherent style," he said.

The company says the Stable Diffusion 3 family of models range from 800M to 8B parameters, providing users a spectrum of quality and scalability options and promise that a detailed technical report will be coming soon.

While presented as a text-to-image model, Mostaque suggested Stable Diffusion 3.0 will serve as the foundation for Stability AI's upcoming video, 3D, and multi-modal generative AI systems. "We make open models that can be used anywhere and adapted to any need," he stated.

Pretty much. The SD3 arch can accept more than video and image, more details soon.

— Emad (@EMostaque) February 22, 2024

We have 100x less (literally) the resources of some of the others in this field though, have to work hard. https://t.co/6udkySZWMx

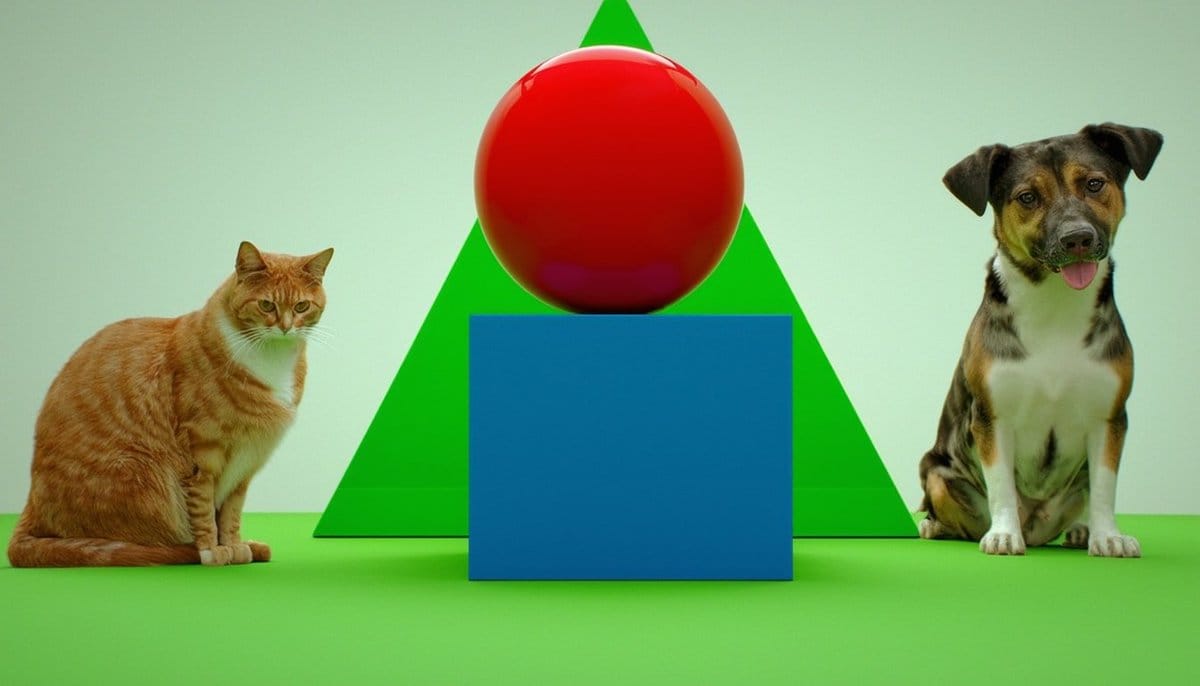

Mostaque also took time to share a sneak peek at upcoming editing and control capabilities that the company is working on. In the demo video, he shows how various advanced granular control that users will have in modifying specific parts of an image.