Chinese tech giant Alibaba has open-sourced its 7 billion parameter generative AI model Tongyi Qianwen (Qwen). The release positions Qwen as a direct competitor to Meta's similarly sized LLaMA model, setting up a showdown between the two tech titans.

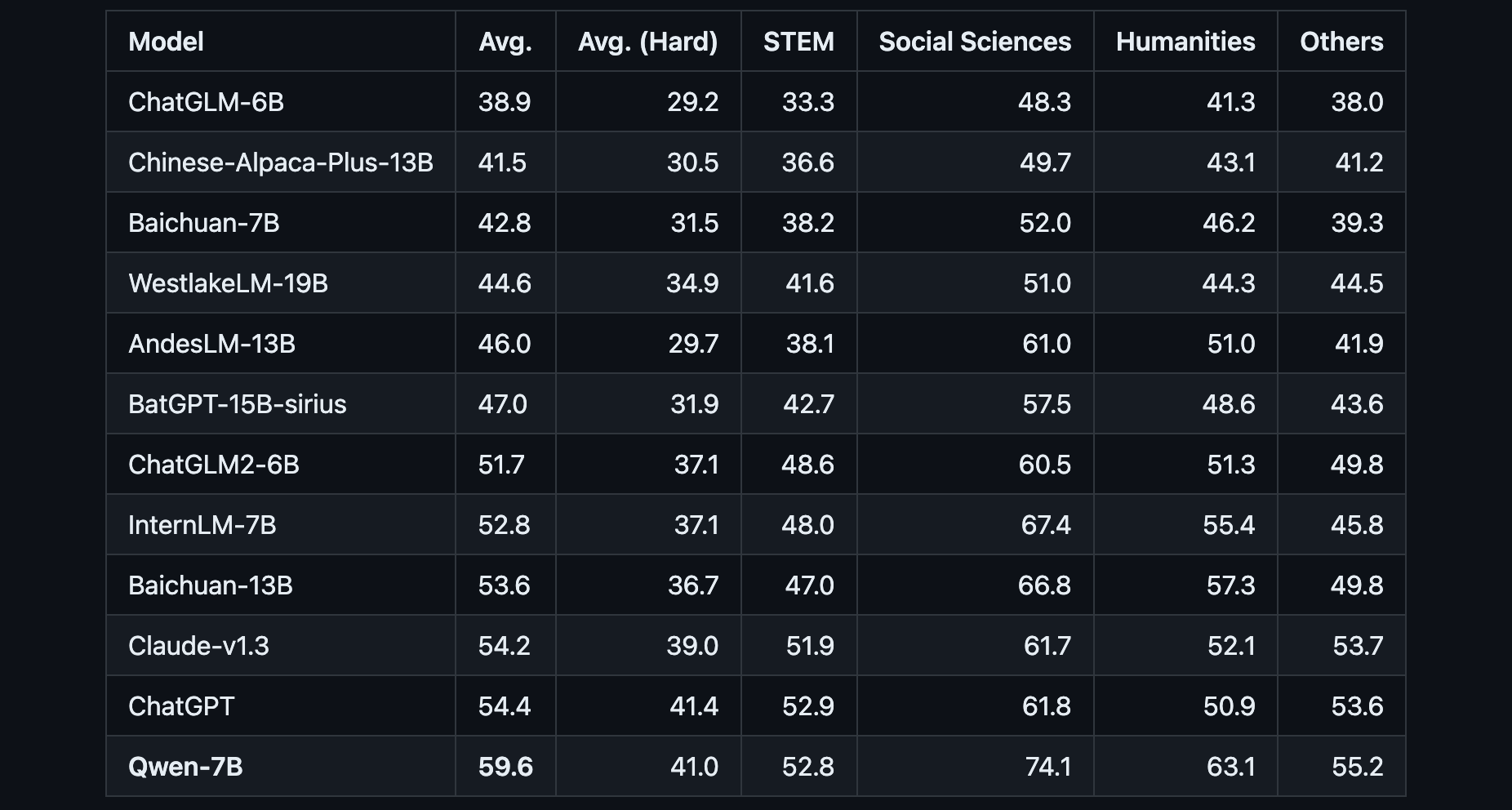

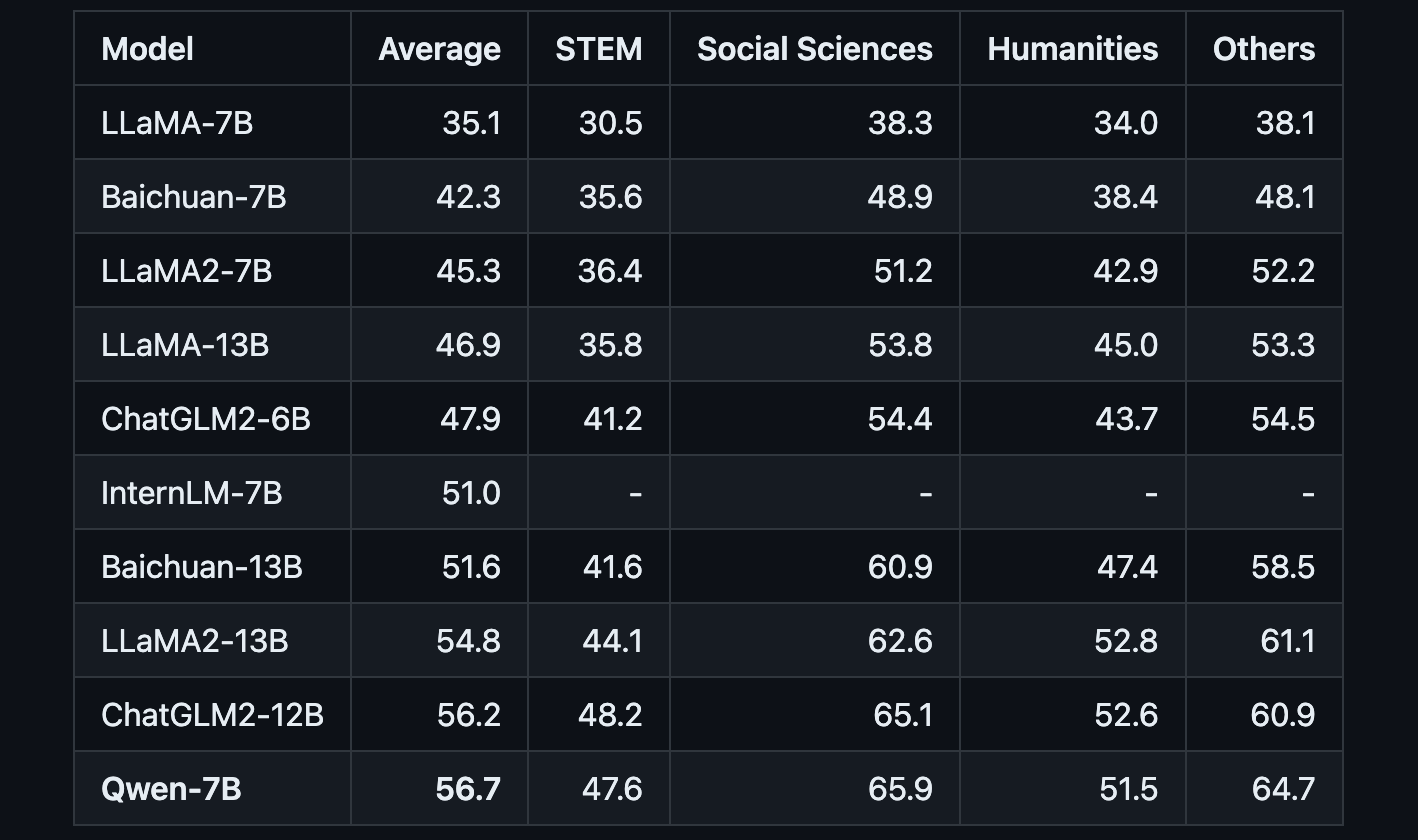

Qwen is a transformer-based language model that has been pre-trained on over 2.2 trillion text tokens covering a diverse range of domains and languages. Benchmark testing shows that Qwen achieves state-of-the-art results compared to LLaMA and other leading models on tests of knowledge, coding, mathematical reasoning and translation capabilities.

The release includes model weights and codes for pre-trained and human-aligned language models of 7B parameters:

Qwen-7Bis the pretrained language model, andQwen-7B-Chatis fine-tuned to align with human intent.Qwen-7Bis pretrained on over 2.2 trillion tokens with a context length of 2048. On the series of benchmarks we tested, Qwen-7B generally performs better than existing open models of similar scales and appears to be on par with some of the larger models.Qwen-7B-Chatis fine-tuned on curated data, including not only task-oriented data but also specific security- and service-oriented data, which seems insufficient in existing open models.- Example codes for fine-tuning, evaluation, and inference are included. There are also guides on long-context and tool use in inference.

To showcase Qwen's versatility, Alibaba tested the model on standardized benchmarks like C-Eval for Chinese knowledge, MMLU for English comprehension, HumanEval for coding, GSM8K for mathematical reasoning, and WMT for translation. On all these tests, Qwen outperformed or matched larger 13 billion parameter models, demonstrating the power packed into its 7 billion parameters.

Beyond the base Qwen model, Alibaba has also released Qwen-7B-Chat, a version fine-tuned specifically for dialog applications aligned with human intent and instructions. This chat-capable version of Qwen also supports calling plugins/tools/APIs through ReAct Prompting. This gives Qwen an edge in tasks like conversational agents and AI assistants where integration with external functions is invaluable.

The launch of Qwen highlights the intensifying competition between tech giants like Alibaba, Meta, Google and Microsoft as they race to develop more capable generative AI models.

By open-sourcing Qwen, Alibaba not only matches Meta's LLaMA but also leapfrogs the capabilities of its own previous model releases. Its formidable performance across a range of NLP tasks positions Qwen as a true general purpose model that developers can potentially adopt instead of LLaMA for building next-generation AI applications.