Amazon recently announced a series of upgrades to its conversational AI service Lex, leveraging the latest advances in generative AI to provide more natural, dynamic conversations. These new capabilities promise to greatly enhance self-service experiences across a variety of use cases.

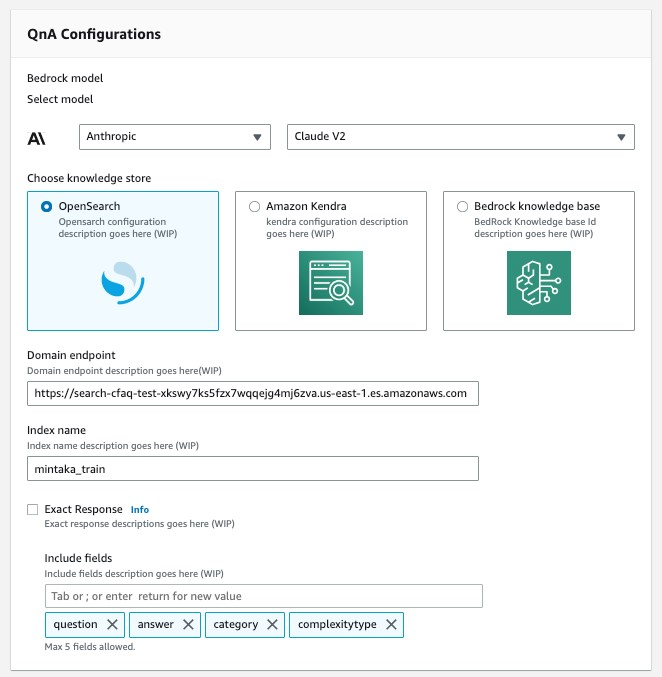

Most notably, Lex now includes built-in support for conversational FAQ experiences. By connecting Lex to authorized knowledge repositories and large language models (LLMs), developers can enable chatbots to understand and respond to many common questions in a more fluent, human-like way. Rather than relying solely on rigid, pre-defined responses, Lex bots can now provide customized answers on the fly using its Retrieval Augmented Generation (RAG) approach. This allows combining the breadth of curated knowledge content with the language fluency of LLMs.

According to Amazon, this conversational FAQ feature allows organizations to achieve higher containment rates for customer inquiries with lower escalation costs. Highly regulated industries like healthcare and finance can also take advantage by pointing Lex only to approved knowledge bases, ensuring regulatory compliance.

Additionally, Amazon is introducing new descriptive bot building capabilities, which allow non-technical users to simply describe the goal of a conversational assistant in natural language. Leveraging the power of LLMs, Lex will then automatically generate required intents, sample utterances, slots, prompts and flows to create a baseline bot ready for further refinement. This drastically reduces what was previously an intensive, manual coding effort.

Other upgrades aim to directly improve the user experience. With assisted slot resolution, Lex can now leverage LLMs to help disambiguate slots when its natural language understanding models fail to capture a user’s more conversational response. This helps ensure more seamless, successful conversations. Similarly, intent training utterances can be auto-generated to account for wider linguistic variability.

Together, these new generative AI-enabled features represent a major step forward for Lex and conversational AI. By combining language understanding, knowledge access and language generation, Amazon promises more capable, intuitive chatbot experiences across self-service scenarios.