Anthropic just committed to what might be the largest TPU deal in history—up to one million of Google's tensor processing units coming online in 2026, worth tens of billions of dollars and delivering over a gigawatt of capacity. CFO Krishna Rao says the company's approaching a $7 billion annual revenue run rate, with large enterprise accounts (those generating $100k+ annually) growing 7x in the past year.

Key Points:

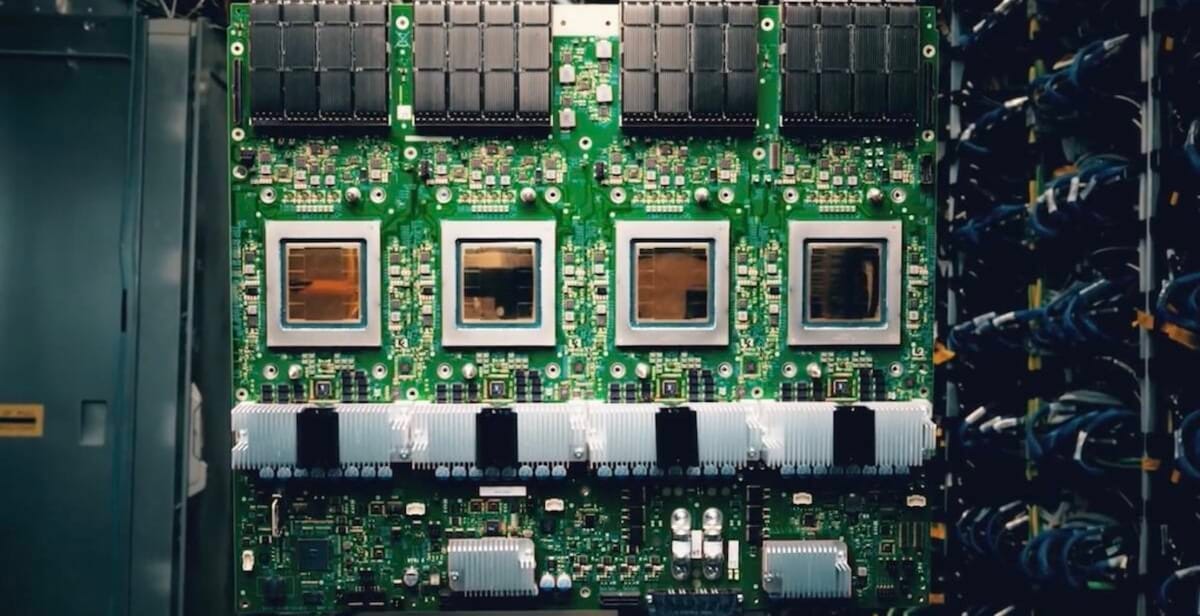

- The deal adds Google's seventh-generation "Ironwood" TPUs to Anthropic's existing mix of Amazon Trainium and Nvidia GPUs, letting the company optimize for cost and performance across workloads

- Anthropic now serves 300,000+ business customers with Claude, and enterprise accounts make up roughly 80% of revenue—a sharp contrast to OpenAI's consumer-heavy model

- Amazon remains the primary training partner through Project Rainier, a custom supercomputer using hundreds of thousands of Trainium2 chips

Anthropic's revenue is on track to hit $9 billion by year-end, with internal targets calling for $20-26 billion in 2026—growth rates that demand more compute than any single vendor can provide. Industry estimates put a 1-gigawatt data center at roughly $50 billion to build, with about $35 billion going to chips.

What's interesting is the strategy itself. Most AI labs marry themselves to one chip vendor, paying premium margins and accepting whatever capacity constraints come with that choice. Anthropic's spreading workloads across three platforms—Google TPUs for certain training runs, Amazon Trainium for the massive Project Rainier cluster, NVIDIA GPUs where needed. According to someone familiar with the company's infrastructure approach, every dollar of compute stretches further under this model than single-vendor architectures.

Google has invested about $3 billion in Anthropic total, while Amazon has committed $8 billion and is building Project Rainier, which will provide five times more computing power than Anthropic's current largest training cluster. The question isn't whether Anthropic is playing favorites—it clearly isn't—but whether this diversified approach actually delivers on its cost-efficiency promise at frontier-model scale.

Google gets a major AI customer win at a time when it's pushing TPUs to external clients after years of keeping them mostly internal. Amazon gets a guaranteed buyer for hundreds of thousands of Trainium2 chips, proving out silicon that hasn't been battle-tested for complex LLM training at scale. Anthropic gets optionality and presumably better pricing by keeping three vendors competing for its business.

Project Rainier plans to divide a single compute cluster across multiple buildings, connecting them to act as one computer—ambitious but unproven at this scale. TPUs have their own networking constraints. Multi-vendor orchestration adds complexity that could eat the theoretical cost savings.

Anthropic's betting it can make this work while OpenAI crossed $13 billion in annualized revenue in August and is on pace for over $20 billion by year-end. Anthropic's closing the gap, but both companies face the same fundamental challenge: improving models fast enough to justify infrastructure bills that now measure in gigawatts.