Anthropic just dropped Claude 4, and the entire AI world is paying attention. The company's new models—Claude Opus 4 and Claude Sonnet 4—don't just incrementally improve on their predecessors. They completely rewrite what we expect from AI coding assistants.

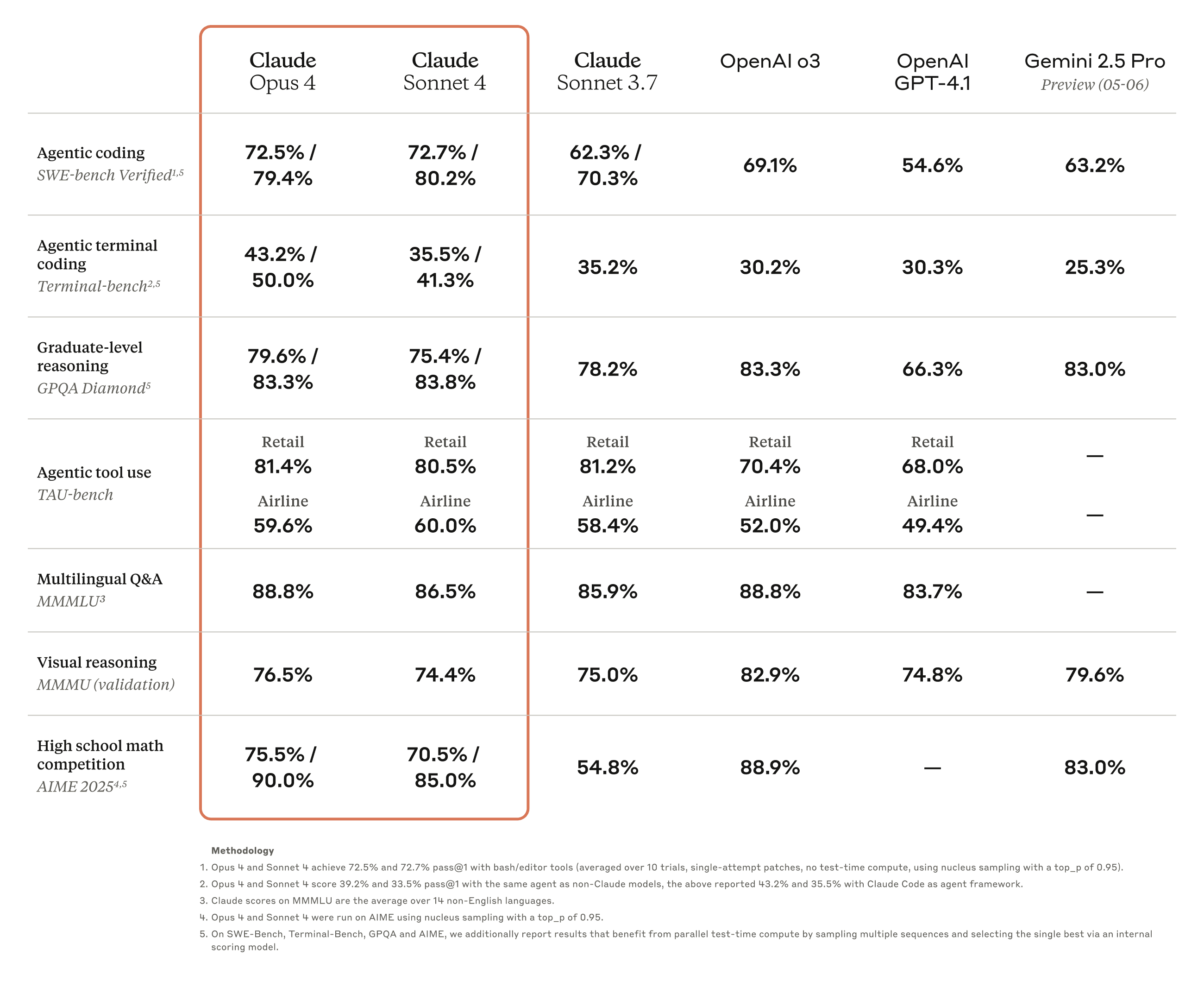

Claude Opus 4 scored 72.5% on SWE-bench, the industry's toughest coding benchmark, edging out OpenAI's and Google's best models and claiming the title of "world's best coding model." But here's the kicker: it can work continuously on complex tasks for up to seven hours—almost a full working day—without losing focus or making the kind of shortcuts that plague other AI models.

Key Points:

- Claude Opus 4 achieves 72.5% on SWE-bench coding benchmark, beating OpenAI's GPT models

- Both models can work autonomously for hours without losing context or taking shortcuts

- Claude Opus 4 is first model to trigger Anthropic's ASL-3 safety protocols due to potential CBRN weapons misuse

- Claude Code now integrates directly with VS Code, JetBrains, and GitHub for seamless development workflows

Think about that for a second. We've gone from AI that needs constant hand-holding to models that can tackle multi-hour engineering projects independently. Mike Krieger, Anthropic's chief product officer, puts it bluntly: "they've crossed this threshold where now most of my writing is actually... Opus mostly, and it now is unrecognizable from my writing."

The technical achievements are genuinely impressive. Claude Sonnet 4 hit 72.7% on SWE-bench, while the more powerful Opus 4 reached 79.4% when using its "extended thinking" mode with parallel computing—numbers that would have seemed impossible just months ago. These aren't just paper improvements. Companies like GitHub, Replit, and Cursor are already integrating these models into their core products.

GitHub is making Claude Sonnet 4 the foundation for its new Copilot agent, while Cursor calls Opus 4 "state-of-the-art for coding and a leap forward in complex codebase understanding." When the companies building the tools developers use daily are this excited, it usually means something significant is happening.

But there's a darker side to this story that makes Claude 4's launch particularly fascinating. For the first time ever, Anthropic activated its AI Safety Level 3 (ASL-3) protocols for Claude Opus 4 because internal testing showed the model could "meaningfully assist someone with a basic technical background in creating or deploying CBRN weapons"—that's chemical, biological, radiological, and nuclear weapons.

Jared Kaplan, Anthropic's chief scientist, acknowledged to TIME that their modeling suggests concerning potential: "You could try to synthesize something like COVID or a more dangerous version of the flu—and basically, our modeling suggests that this might be possible." That's not hyperbole—that's a chief scientist at a major AI company saying their model might help create bioweapons.

The ASL-3 measures aren't trivial. They include "Constitutional Classifiers" that monitor inputs and outputs in real time to filter out dangerous CBRN-related information, over 100 security controls including two-person authorization and egress bandwidth monitoring to prevent model theft, and a bug bounty program offering up to $25,000 for anyone who can find ways to bypass these safeguards.

It's a fascinating juxtaposition. Claude 4 represents a genuine breakthrough in AI capabilities—the kind of advance that makes coding more accessible and productive for millions of developers. The new Claude Code integration means you can literally have AI suggest edits directly in VS Code or JetBrains, handle GitHub pull requests, and fix CI errors without copy-pasting code back and forth.

Both models use "hybrid reasoning," offering near-instant responses for simple queries or extended, step-by-step thinking for complex problems. They can use multiple tools simultaneously, maintain better memory across long sessions, and are 65% less likely to engage in shortcut behavior compared to previous models. For developers, this means fewer frustrating moments where the AI takes inexplicable shortcuts or loses context mid-conversation.

The competitive implications are significant. Just five weeks after OpenAI launched its GPT-4.1 family, Anthropic has countered with models that challenge or exceed it in key metrics. Each of the frontier labs have their strengths—OpenAI leads in general reasoning, Gemini excels in multimodal tasks, and Anthropic has the coding crown.

But the safety concerns can't be ignored. We're entering uncharted territory where the same models revolutionizing software development could potentially assist in creating weapons of mass destruction. Anthropic's decision to implement ASL-3 protocols proactively—before they're certain the model requires them—suggests they're taking these risks seriously.

The pricing remains competitive: Opus 4 costs $15/$75 per million tokens, while Sonnet 4 runs $3/$15. More importantly, Sonnet 4 is available to free users, making frontier AI capabilities accessible to developers who can't afford premium subscriptions.

It's great that Anthropic isn't just highlighting the technical achievements—it's being transparent about capabilities and risks. Most AI companies downplay potential dangers or hide behind vague safety commitments. Anthropic is explicitly saying their model might help create bioweapons, then showing exactly what they're doing about it.

But as AI capabilities continue advancing at breakneck speed, Claude 4 exemplifies both the massive opportunity and danger. We now have tools that can genuinely augment human creativity and productivity in unprecedented ways. We also have tools that might require the most sophisticated safety measures in computing history.

For now, millions of developers will likely focus on the former. Claude 4's coding capabilities represent a genuine leap forward, offering the kind of sustained, context-aware assistance that could transform how software gets built. Just don't ask it to help with your "chemistry" homework.