Apple has introduced CoreNet, a new library for training deep neural networks, and OpenELM, a state-of-the-art open language model family. The models are now available on the Hugging Face hub, alongside the complete framework for training and evaluation on publicly available datasets.

CoreNet, available on GitHub, is a versatile toolkit supporting various tasks, including object detection and semantic segmentation. The OpenELM models, built using CoreNet, achieve enhanced accuracy through efficient parameter allocation within its transformer model. Apple has release both pretrained and instruction tuned models with 270M, 450M, 1.1B and 3B parameters.

What sets OpenELM apart is its layer-wise scaling strategy. This method utilizes smaller latent dimensions in the attention and feed-forward modules of the transformer layers closer to the input, gradually widening as they approach the output. This approach allows for more efficient use of the parameter budget, resulting in improved accuracy compared to existing models.

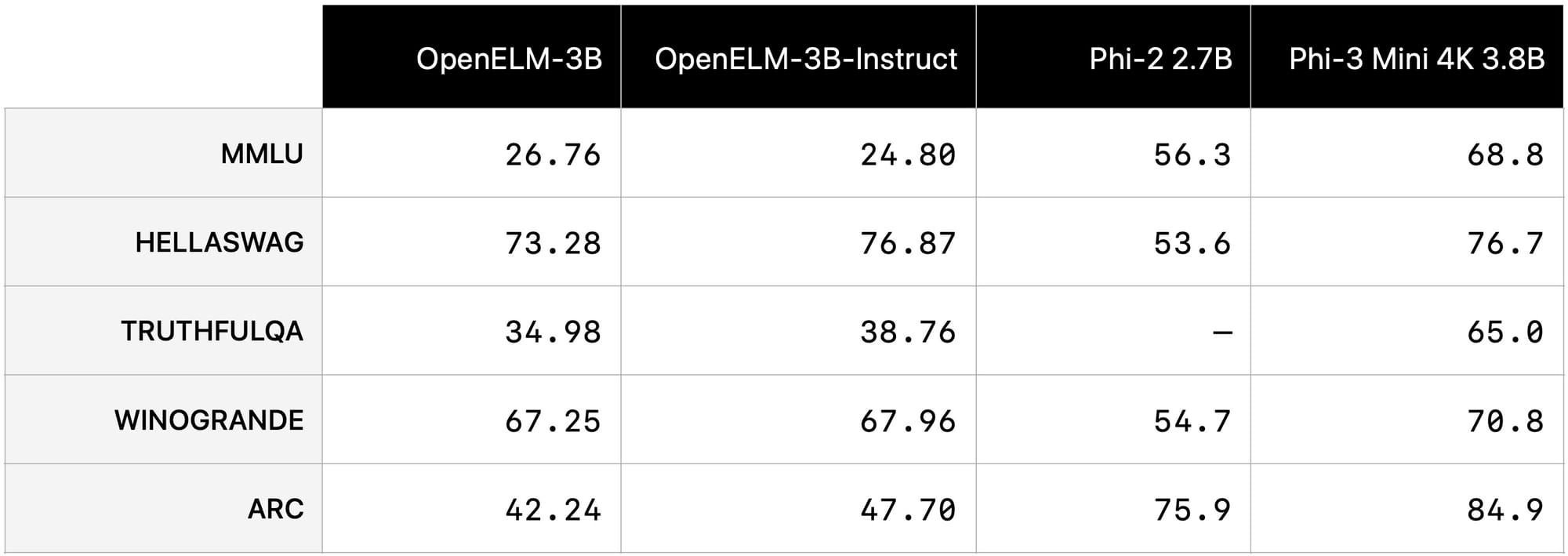

Notably, the 1 billion parameter variant of OpenELM outperforms the comparable OLMo model by 2.36% while requiring only half the pre-training tokens. However, it still falls considerably behind Microsoft's recently released Phi-3 small language models.

Apple's release of CoreNet and OpenELM is a significant contribution to the open research community and a first for the Cupertino giant. The company is not just open-sourcing the model weights and inference code, but also training logs, checkpoints, and pre-training configurations. They have also released code to convert models to the MLX library for efficient inference and fine-tuning on Apple devices.

Apple's decision to open-source OpenELM may be influenced by the increasing popularity and success of its peers, such as Google, Microsoft, and Meta, who have all contributed cutting-edge open models to the AI community. Apple's move reflects a need to remain competitive and relevant in an industry that increasingly values not only innovation but also accessibility and community collaboration.

Furthermore, Apple's focus on small parameter models, like OpenELM, aligns with its strategy of running AI locally on devices rather than in the cloud. This approach not only enhances user privacy but also enables efficient on-device inference, which is crucial for Apple's ecosystem of products and services.