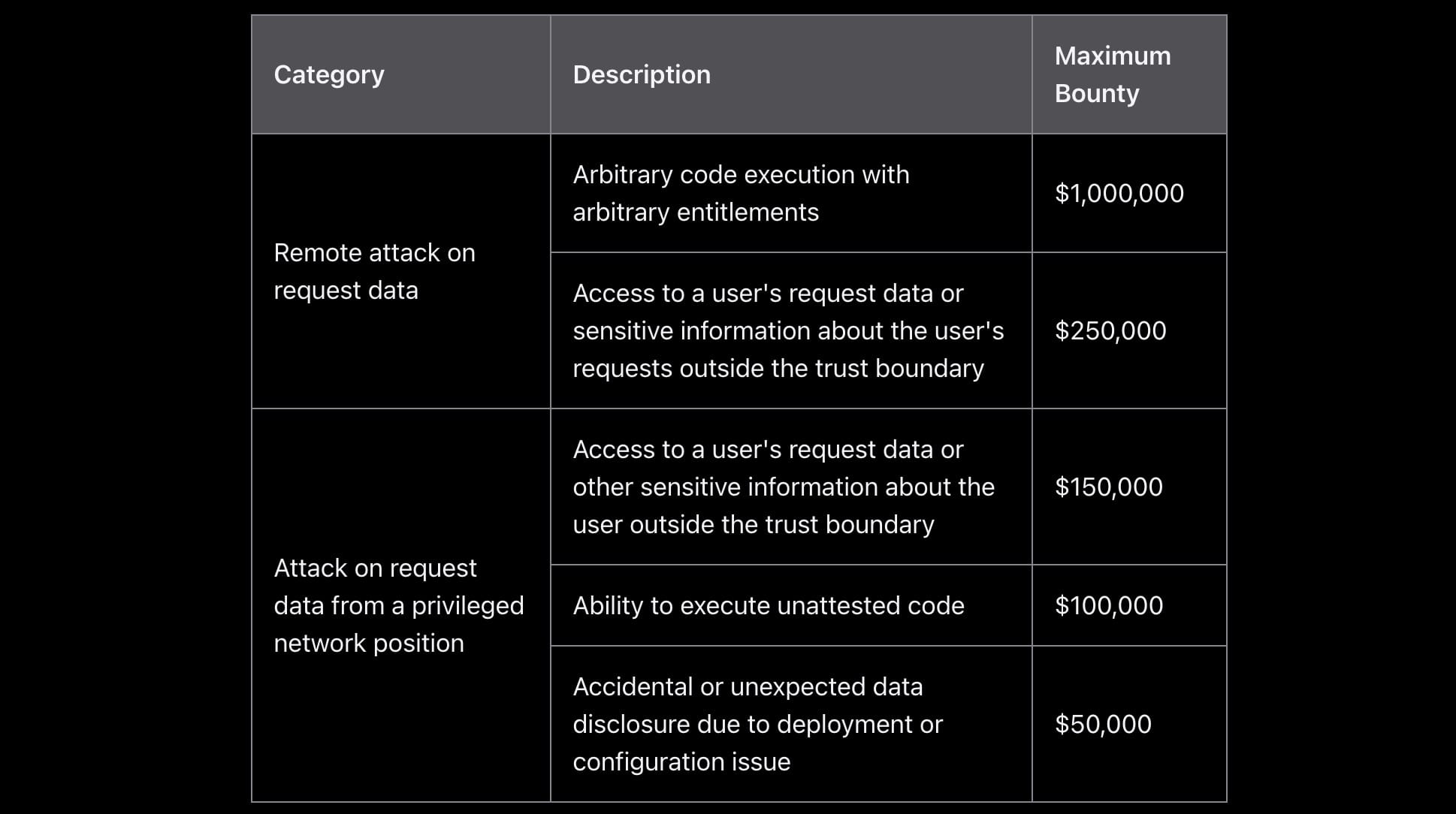

Apple is offering security researchers up to $1 million to find vulnerabilities in its upcoming AI cloud service, marking one of the largest bounty programs for AI system security. The program launches ahead of next week's debut of Private Cloud Compute, Apple's new private AI infrastructure.

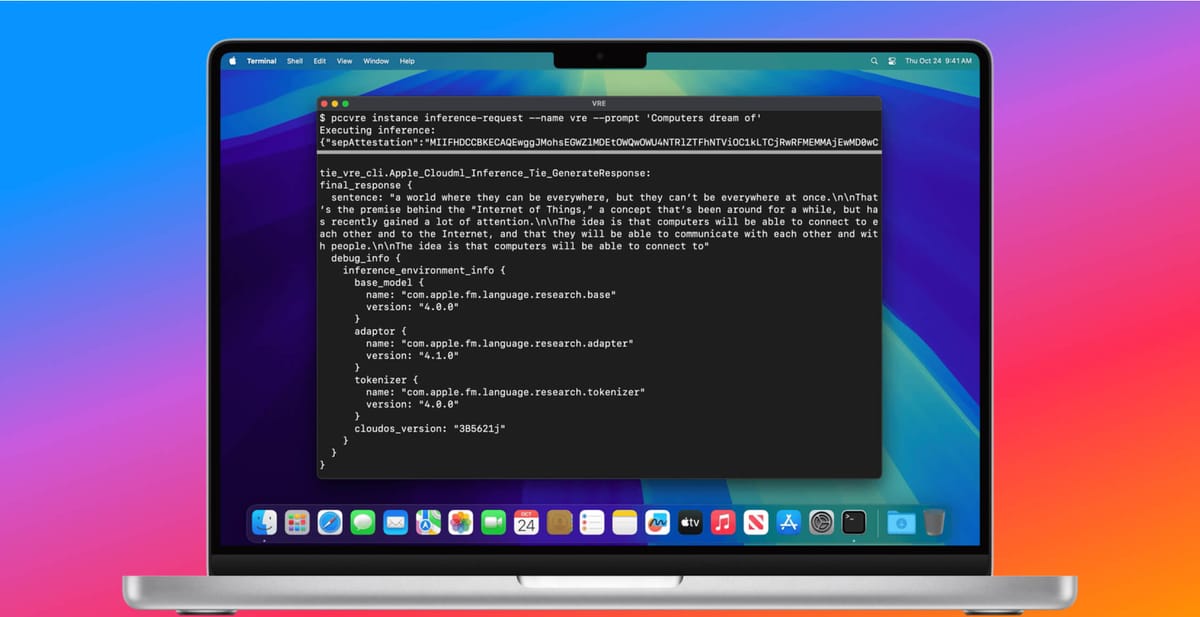

The company is taking the unusual step of providing researchers with direct access to the system's architecture through a virtual testing environment that runs on Mac computers. This is a significant departure from Apple's traditionally restrictive approach to its systems.

"We're expanding Apple Security Bounty to include rewards for vulnerabilities that demonstrate a compromise of the fundamental security and privacy guarantees of Private Cloud Compute," Apple's security team wrote in a blog post announcing the program.

The bounty program focuses on three main threat categories: accidental data exposure, external attacks through user requests, and vulnerabilities requiring physical or internal access. The highest rewards are reserved for finding ways to execute unauthorized code or access user data outside the system's security boundaries.

To help researchers probe the system, Apple is releasing source code for key security components and providing a Virtual Research Environment that works on Apple Silicon Macs with at least 16GB of memory. This environment lets researchers examine how the system processes AI requests while maintaining user privacy.

Apple is positioning PCC as an industry leader in secure AI compute, claiming that it extends the security protections that iOS users have come to trust into the cloud environment. By incentivizing researchers to test and verify these protections, Apple hopes to establish PCC as a gold standard in privacy-first AI cloud services.

This initiative comes as tech companies face increasing scrutiny over AI systems' privacy and security. By opening its AI infrastructure to external verification, Apple appears to be trying to differentiate itself from competitors through a focus on verifiable privacy protections.

The Private Cloud Compute service, which launches next week, will handle intensive AI processing tasks for Apple's devices while aiming to maintain the company's device-level security standards in the cloud.

Interested researchers can get started by accessing the Private Cloud Compute Virtual Research Environment in the latest macOS Sequoia 15.1 Developer Preview, provided they have the necessary Apple hardware.