Apple researchers have developed a new AI framework called UI-JEPA (User Interface Joint Embedding Predictive Architecture) that can predict what you want to do on your phone based on your interactions. It's a fascinating glimpse into what iPhones of the future coud be capable of.

Right now, the frontier multimodal models (like GPT-4 Turbo or Claude 3) that can be used for this capability need tons of compute power. Using API calls and sending requests back and forth a remote server isn't great for our phones. It eats up battery life, makes things slow, and could put our privacy at risk.

What's really impressive here is that UI-JEPA runs entirely on your device, anticipating what you’re trying to do quickly and privately. It does almost the same job as those big, powerful systems, but it's way faster and uses a lot less power. In fact, it's about 50 times more efficient than the current top-tier systems.

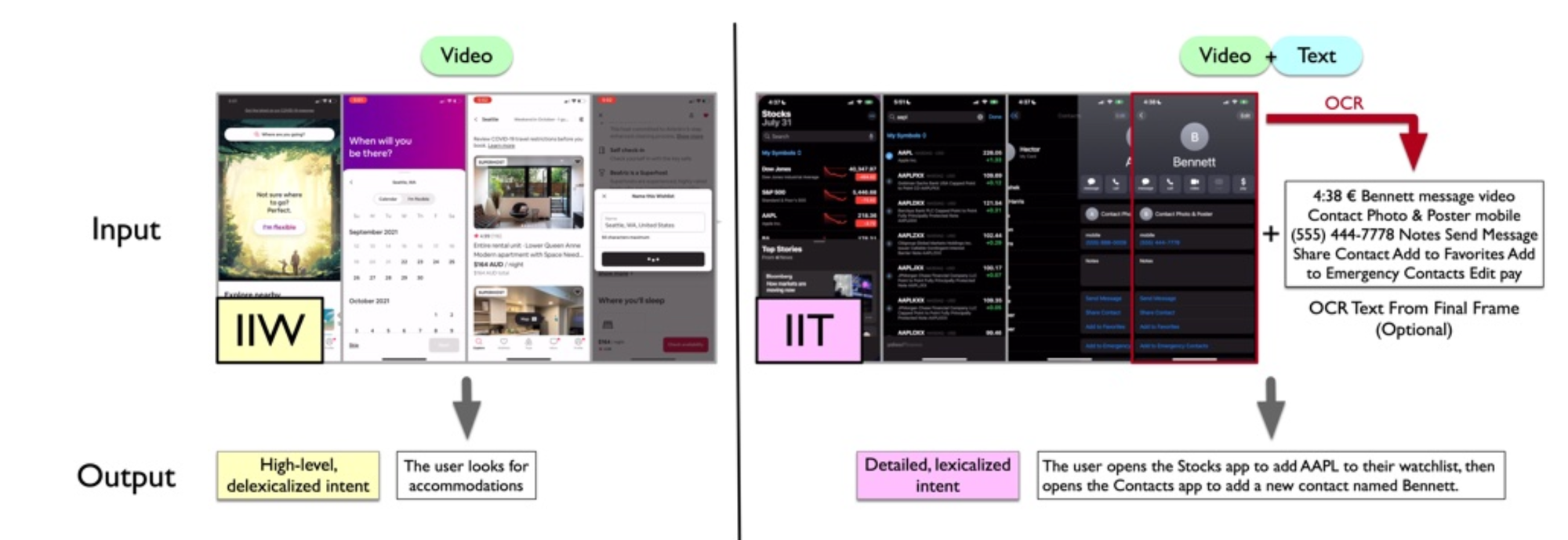

To benchmark UI-JEPA, Apple created two datasets containing videos showing people using their phones. One set, called "Intent in the Wild," has 1,700 videos spanning 219 intent categories of all sorts of random phone tasks. The other, "Intent in the Tame," focuses on more common tasks across 10 categories, with 914 labeled videos.

When evaluated on these datasets, UI-JEPA actually beat leading multimodal LLMs like GPT-4 Turbo and Claude 3.5 Sonnet by an average of 10.0% and 7.2% respectively in intent similarity scores. And again, keep in mind UI-JEPA's significantly smaller footprint.

If you're interested in the technical details, check out the paper here. For most people however, the important question is: How will this tech actually change your iPhone experience?

Well, for starters, imagine Siri getting a whole lot smarter. UI-JEPA could help it understand exactly what you're trying to do, even if you're not able to clearly articulate it. e.g. If you ask for something and Siri gets it right, UI-JEPA can remember that. If Siri gets it wrong, it will learn from that too. Over time, this means your phone could get better and better at helping you out, without shipping off your personal data to a remote server.

Additionally, UI-JEPA can keep track of what you're doing across different apps. So if you're planning a trip and bouncing between your calendar, a travel app, and your notes, your phone could understand the bigger picture of what you're up to and offer more helpful suggestions.

Of course, this is early research and the tech isn't perfect yet. Apple points out that the AI sometimes gets confused when it sees apps or tasks it's never encountered before. And they haven't tested it with voice commands, so that's still in the works.

But here's the bottom line: This could be the start of smartphones that really get us. They'd learn from how we use them, making our daily digital lives a whole lot easier. No more fighting with your phone to do simple tasks – it'll just know what you want.

And, no, since I know somebody will ask, don't expect to see UI-JEPA tech in the new iPhone 16 that go on sale later this month.