Apple researchers in collaboration with with Carnegie Mellon University have developed a AI system called the Never-ending UI Learner that continuously interacts with real-world mobile apps to gain a deeper understanding of user interface design patterns and trends. The system, presented at this year's ACM Symposium on User Interface Software and Technology (UIST), offers a novel approach to training machine learning models that power various accessibility, testing, and design tools for app developers.

The Never-ending UI Learner so far has crawled for more than 5,000 device-hours, performing over half a million actions on 6,000 apps to train three computer vision models for i) tappability prediction, ii) draggability prediction, and iii) screen similarity.

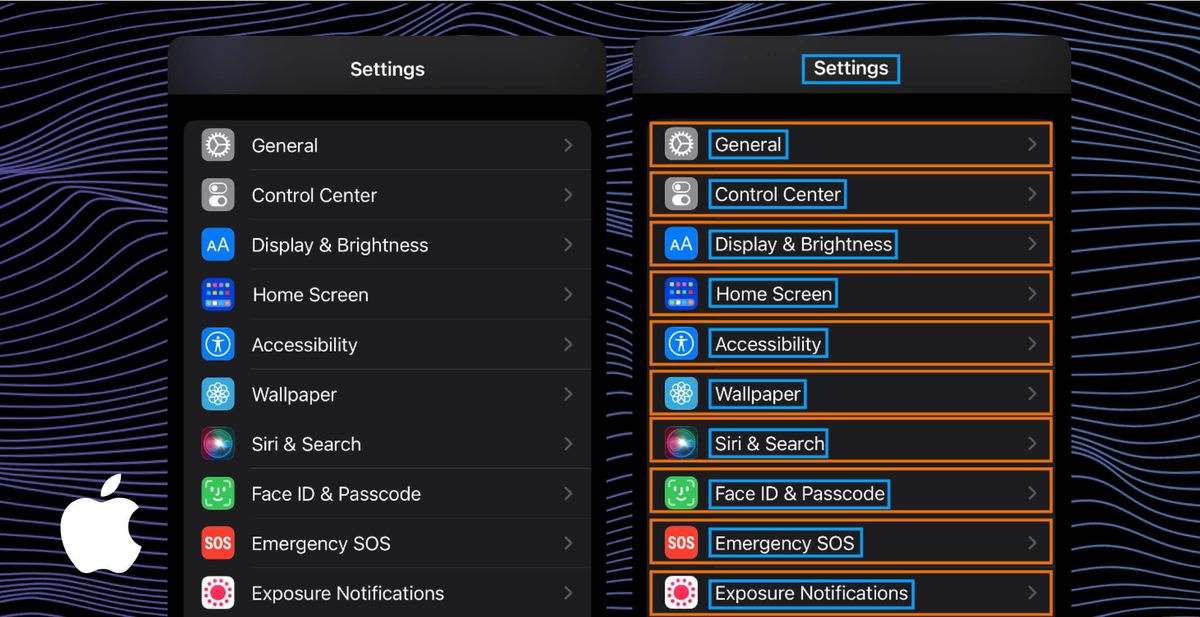

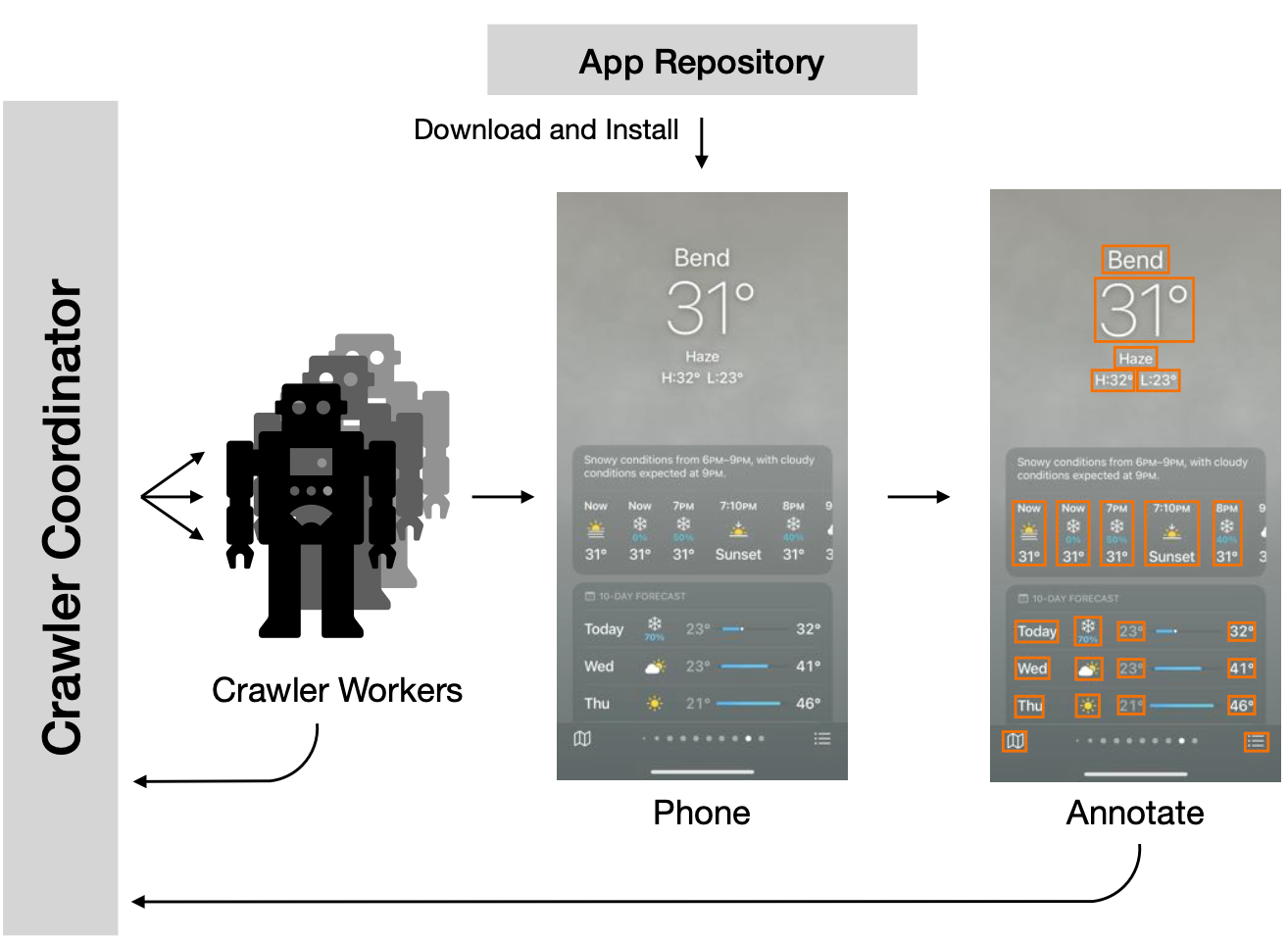

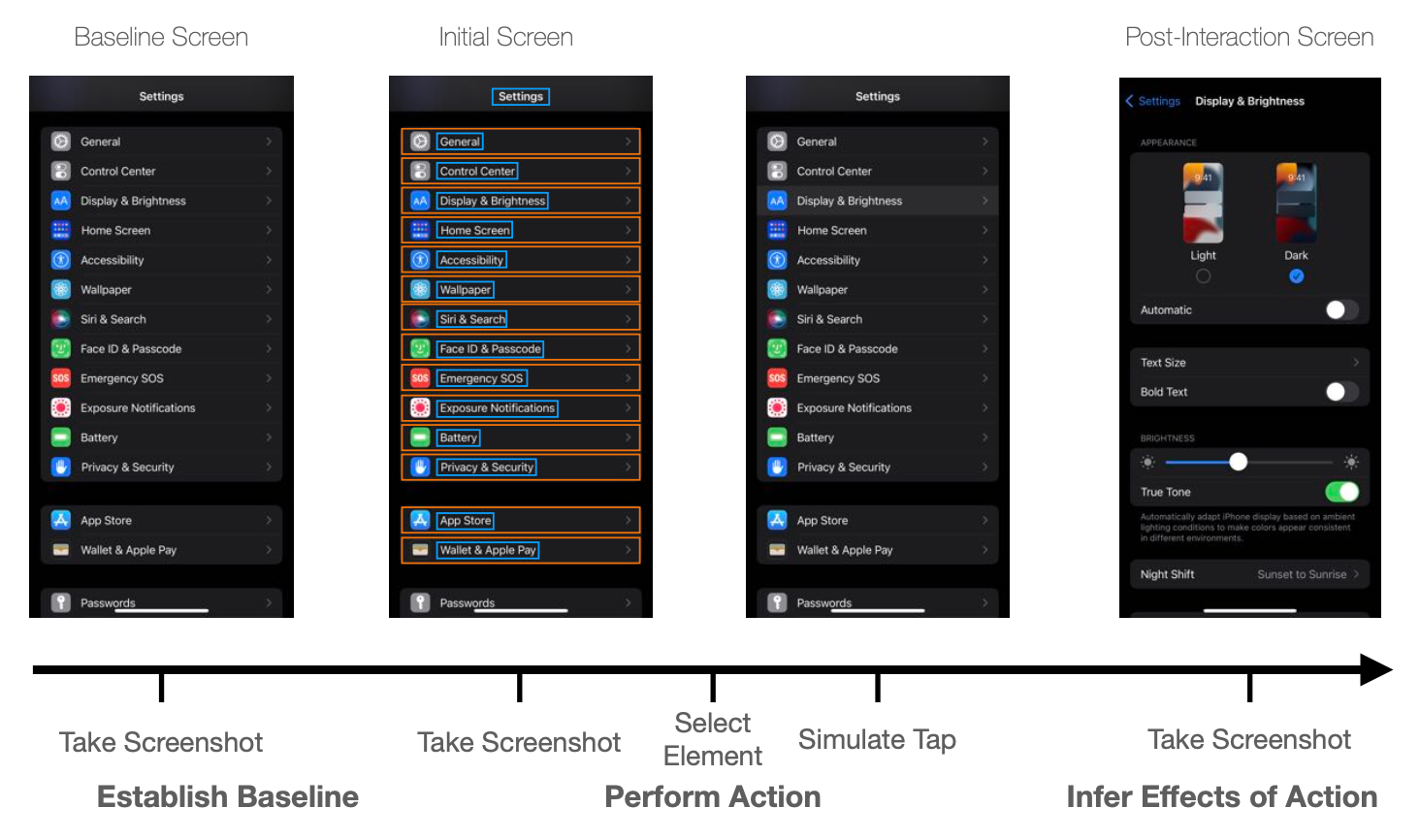

The Never-ending UI Learner automatically crawls through thousands of apps downloaded from the iOS App Store, performing taps, swipes, and other actions on elements within each app's user interface. As it interacts with apps, it applies carefully designed heuristics to label UI elements - such as whether a button can be tapped or an image can be dragged. This labeled data is then used to train computer vision models to predict UI properties like "tappability" and "draggability" directly from the screenshot image.

A key benefit of this active crawling approach is that the system can discover challenging examples that static human-labeled datasets may miss. For instance, human annotators looking at screenshots may not reliably identify all tappable elements due to ambiguous visual signifiers. By tapping on elements and observing the effects at runtime, the crawler can produce more accurate ground truth data.

Over thousands of hours of app exploration, the Never-ending UI Learner has accumulated over half a million labeled screenshots - an order of magnitude more data than previous datasets. Apple researchers demonstrated how models trained on this data improve over time, with tappability prediction reaching 86% accuracy after five rounds of training.

In the paper, the researchers explore how the frequency of re-crawling apps and retraining models impacts the Never-ending UI Learner's performance. More frequent updates (e.g. daily) allow the system to adapt to smaller changes, like dynamic content updates. However, less frequent updates, such as monthly, could better capture substantial app changes from new versions. There is a balancing act - too frequent training risks "catastrophic forgetting" of past knowledge, while too infrequent misses opportunities to learn.

During experiments, the researchers found that their chosen cadence of updates every 1-2 days worked reasonably well. But the ideal retraining frequency likely depends on the rate of evolution in app design and the downstream use cases.

For applications like accessibility repair, more frequent updates may be warranted to catch incremental changes. But for summarization or design pattern mining, longer intervals that allow the accumulation of bigger UI changes could be preferable. Further research is needed to determine the optimal retraining and update schedules.

The work however, highlights the potential of never-ending learning, allowing systems to continuously adapt and improve as they ingest more data. While the current system focuses on modeling simple semantics like tappability, Apple hopes to eventually apply similar principles to learn more sophisticated representations of mobile UIs and interaction patterns.

While the Never-ending UI Learner demonstrates promising capabilities, the current research has several limitations. For one, the system is implemented only for iOS apps, so it's unclear how well the approach would generalize to other platforms like Android.

The existing crawler also focuses primarily on free apps that don't require login, biasing the types of UIs it encounters. In addition, the semantics modeled so far are relatively simple, like tappability and draggability. More complex UI element types and interactions likely require more sophisticated techniques. The research also evaluates performance over a relatively short timeframe of just five crawl epochs. Long-term operation remains unvalidated.

Despite these limitations, the Never-ending UI Learner offers an encouraging step towards automated systems that continuously learn and adapt alongside evolutions in UI design. The implications for research like this are profound:

- Accessibility Improvements: By understanding UI semantics, we can make apps more accessible, filling critical usability gaps.

- Automated Testing and Feedback: The tool can be employed for automated testing of apps, identifying potential design flaws or user experience enhancements.

- Predictive Modeling: By understanding user engagement and interaction patterns, designers and developers can anticipate user needs, optimizing app designs proactively.

- Personalization at Scale: With its continuous learning approach, the tool can potentially understand diverse user behaviors and preferences at a granular level. This could pave the way for hyper-personalized UIs, where interfaces adapt and cater to individual user preferences, enhancing the overall user experience.

With access to a huge corpus of real-world apps, the Never-ending UI Learner offers an intriguing path towards systems that can automatically understand and intelligently interact with mobile interfaces. As user interface design evolves, never-ending learning could allow models to stay up-to-date indefinitely - a notable advantage over training on static human-curated datasets.