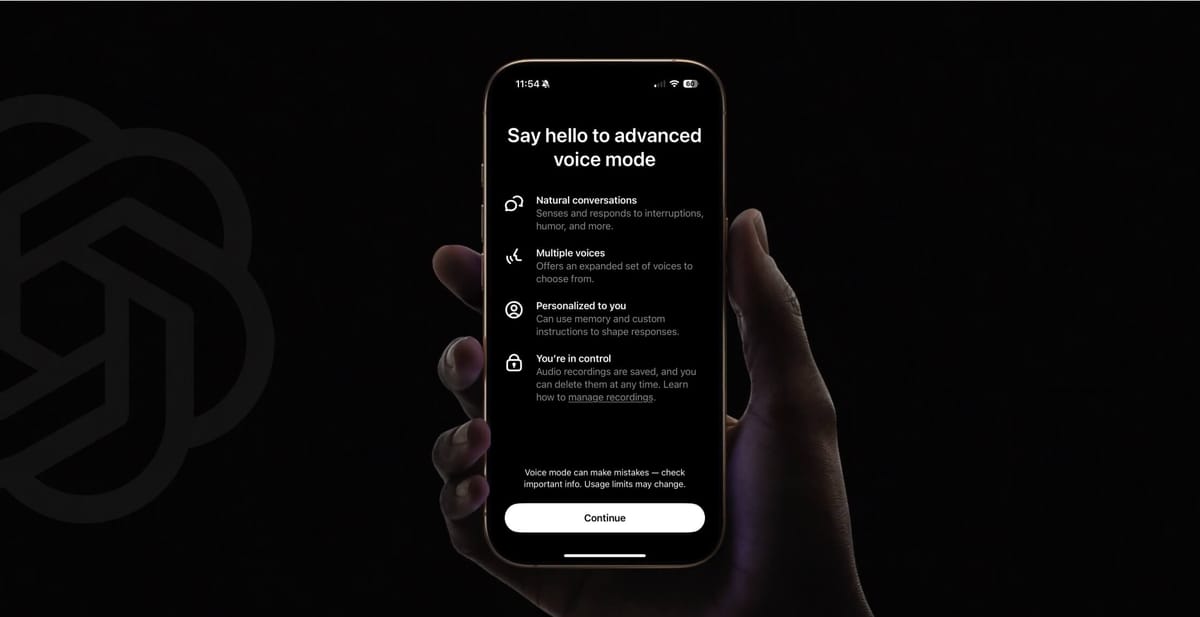

OpenAI rolled out its much-anticipated Advanced Voice to ChatGPT Plus and Teams users this week, with Enterprise and Education customers gaining access next week. This update marks a significant leap in AI-powered voice interaction, bringing more natural and responsive conversations to ChatGPT users.

rollout completed early, amazing work by the team!

— Sam Altman (@sama) September 25, 2024

(except for jurisdictions that require additional external review) https://t.co/ENCSL8VWe6

Advanced voice leverages GPT-4o, a fully multimodal model trained to natively understand speech elements. This is separate from "standard voice" conversations, which rely on separate text-to-speech and speech-to-text models. With advance voice, you have a more fluid, context-aware interaction with the AI, and it can pick up on non-verbal cues like speaking speed and respond with appropriate emotion.

To start an advanced voice conversation, simply select the Voice icon on the bottom-right of the screen:

Here are the key features that Advanced Voice brings:

- Five new voices join the existing lineup, offering users a choice of nine distinct personalities: Vale, Spruce, Arbor, Maple, Sol, Breeze, Cove, Ember, and Juniper.

- Improved accent recognition allows for more accurate communication across various English dialects.

- The system now supports over 50 languages, showcasing its enhanced multilingual capabilities.

- Custom Instructions and Memory features are now accessible in voice conversations, allowing for more personalized interactions.

We’ve also improved conversational speed, smoothness, and accents in select foreign languages. pic.twitter.com/d3QOIBFCZb

— OpenAI (@OpenAI) September 24, 2024

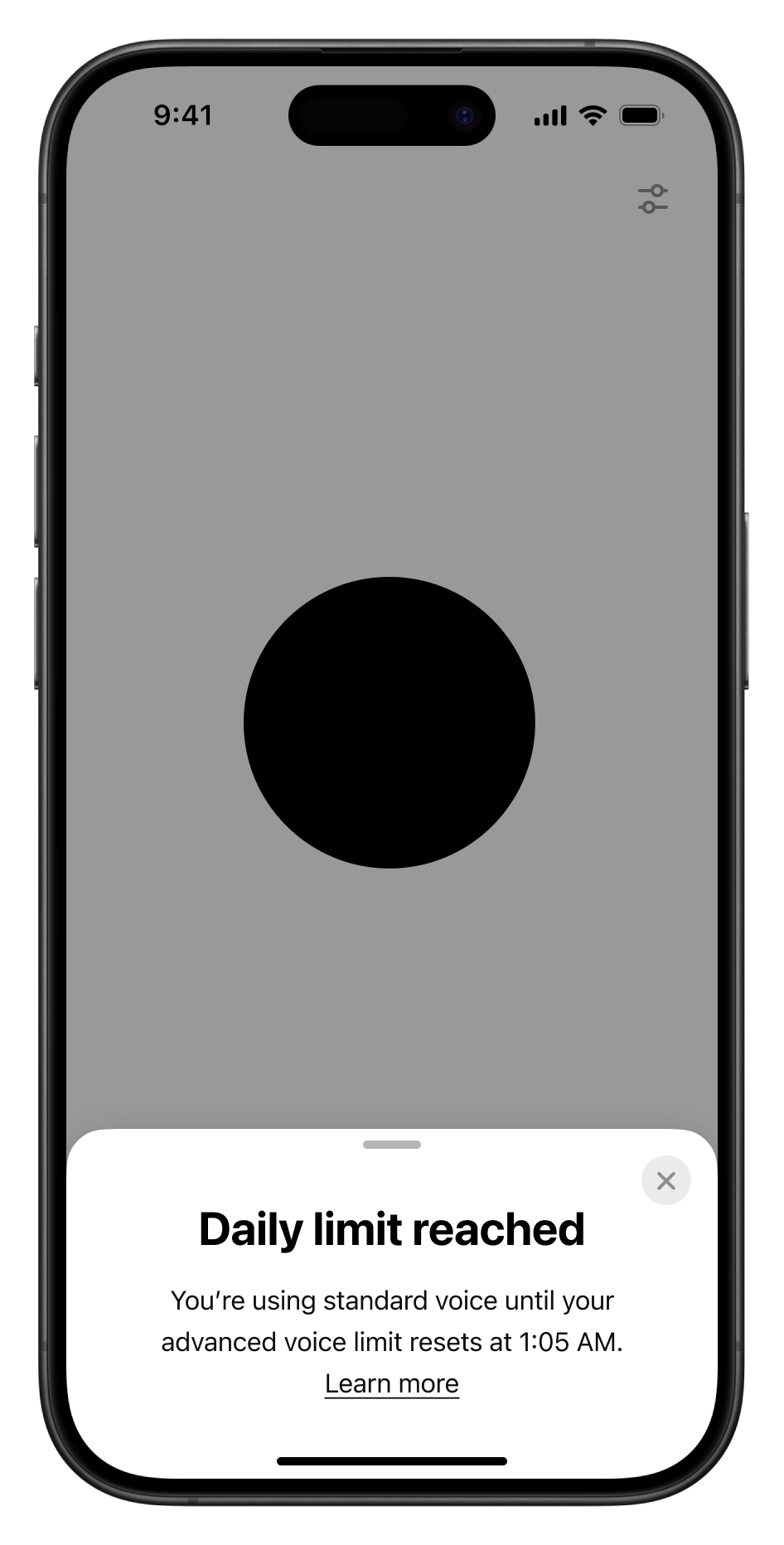

While it is easy to get lost chatting away with the new voice mode, be aware that there are usage restrictions that fluctuate based on demand. OpenAI has not specified exactly what the daily usage limits are for Plus and Team users, but you will receive notification when you have 15 minutes remaining. Once the limit is reached, users can continue with the standard voice mode.

It's worth noting that Advanced Voice is not yet available in several European countries, including the EU member states, the UK, Switzerland, Iceland, Norway, and Liechtenstein.

OpenAI has implemented important privacy measures for voice interactions. Audio clips from your conversations are stored alongside the chat transcriptions and are retained as long as the chat history exists. If you delete a chat, the audio will be removed within 30 days, barring legal or security requirements. If you archive a chat, the audio will be retained.

The company does not train its models on voice chat audio clips unless you explicitly opt in through the "Improve voice for everyone" setting under Data Controls.

It's hard to interacting with ChatGPT in advanced voice mode feels very natural. By integrating multimodal understanding, the system can provide more contextually appropriate and emotionally nuanced responses. This development could pave the way for more sophisticated AI assistants in various fields, from customer service to education and beyond.

As AI continues to evolve, voice interfaces like Advanced Voice Mode may become increasingly prevalent in our daily digital interactions, offering a more intuitive and accessible way to engage with artificial intelligence.