Cohere has introduced Command R+, the newest and most powerful member of its family of open weight language models, purpose-built for enterprise-grade workloads. The model offers a compelling value proposition for businesses looking to integrate AI into their operations.

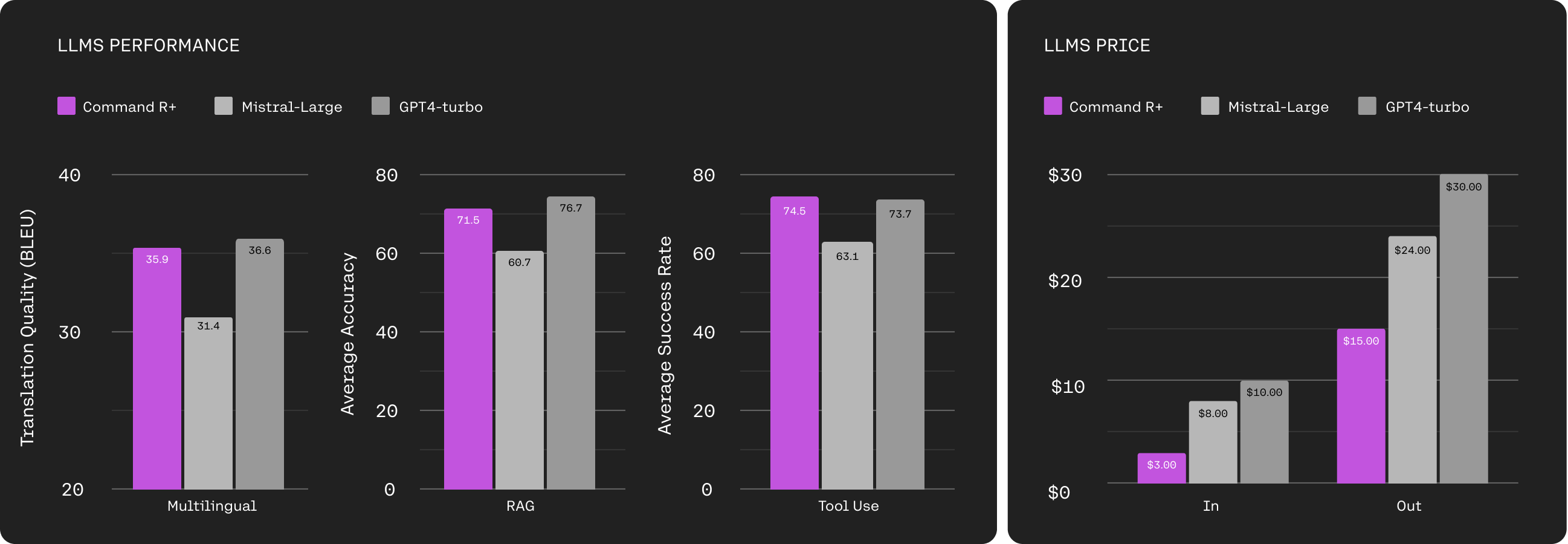

Command R+ builds upon the strengths of the recently released Command R model, boasting a 128k-token context window and excelling in key areas such as advanced retrieval augmented generation (RAG), multilingual coverage, and tool use. The 104B billion parameter model outperforms similar offerings in the scalable market category while remaining competitive with more expensive models on critical business capabilities.

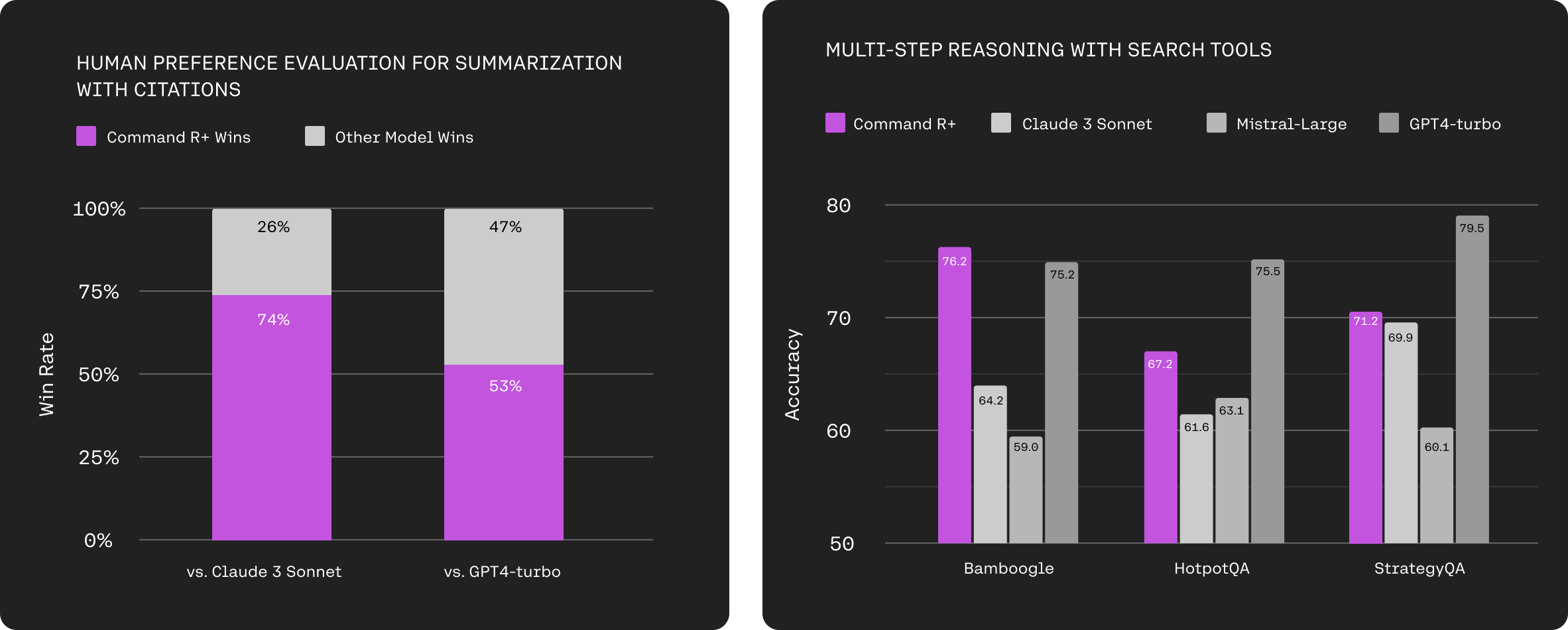

One of the standout features of Command R+ is its industry-leading RAG solution. Optimized for advanced RAG, the model improves response accuracy and provides in-line citations to mitigate hallucinations. This capability enables enterprises to scale AI across various business functions, quickly finding the most relevant information to support tasks in finance, HR, sales, marketing, and customer support.

"Enterprises are clearly looking for highly accurate and efficient AI models like Cohere's latest Command R+ to move into production," said Miranda Nash, group vice president of Applications Development & Strategy at Oracle. "Models from Cohere, integrated in Oracle NetSuite and Oracle Fusion Cloud Applications, are helping customers address real-world business problems and improve productivity across areas such as finance, HR, and marketing."

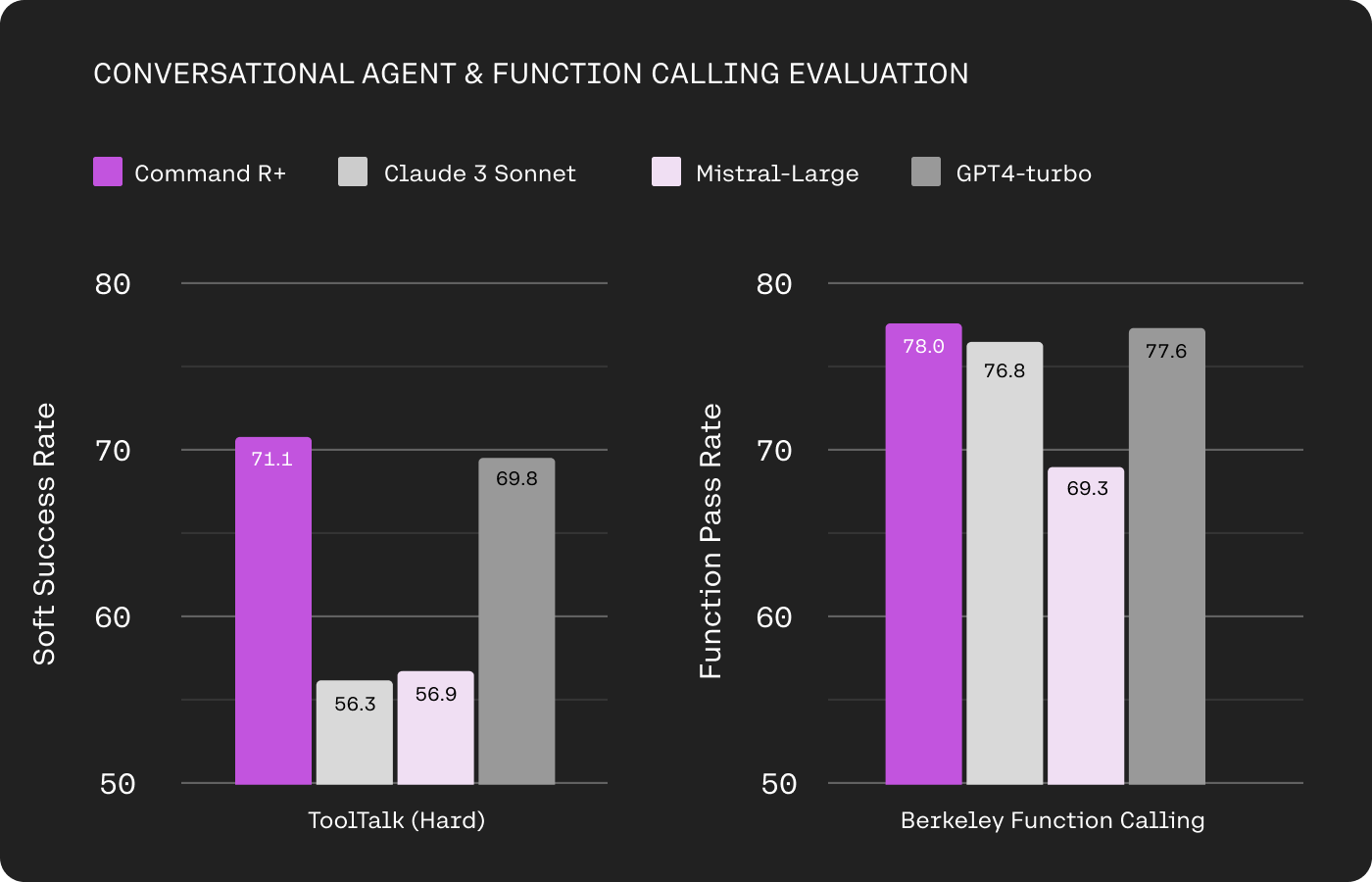

In addition to RAG, Command R+ comes with tool use capabilities accessible through Cohere's API and LangChain. This allows the model to automate complex business workflows, upgrading applications from simple chatbots to powerful agents and research tools. The new multi-step tool use feature enables the model to combine multiple tools over multiple steps, even correcting itself when encountering bugs or failures.

To serve global business operations, Command R+ excels in 10 key languages: English, French, Spanish, Italian, German, Portuguese, Japanese, Korean, Arabic, and Chinese. This multilingual capability allows users to generate accurate responses from diverse data sources, regardless of their native language.

Cohere stresses that it remains committed to data privacy and security, offering customers additional protections and the option to opt out of data sharing. The company works with major cloud providers and on-prem solutions for regulated industries and privacy-sensitive use cases.

Command R+ is also set to be available on Oracle Cloud Infrastructure (OCI) and additional cloud platforms in the coming weeks. The model will also be available immediately on Cohere’s hosted API.

Developers and businesses can access Command R+ first on Azure, starting today, and on Oracle Cloud Infrastructure (OCI) and other cloud platforms in the coming weeks. The model is also available immediately on Cohere's hosted API. Pricing for Command R+ is set at $3.00 per million input tokens and $15.00 per million output tokens. You can try a chat demo of the model using Coral playground or in this Hugging Face space.

N.B. The model is available under a non-commercial license, however Aidan Gomez, Co-founder and CEO suggests that they are willing to make exceptions on an individual basis.

As with Command R, we're releasing under a non-commercial license to make sure that we can continue to build a healthy business and support model releases like these. But if you want to self-host or build a derivative model, just reach out to us and (especially if you're a…

— Aidan Gomez (@aidangomez) April 4, 2024