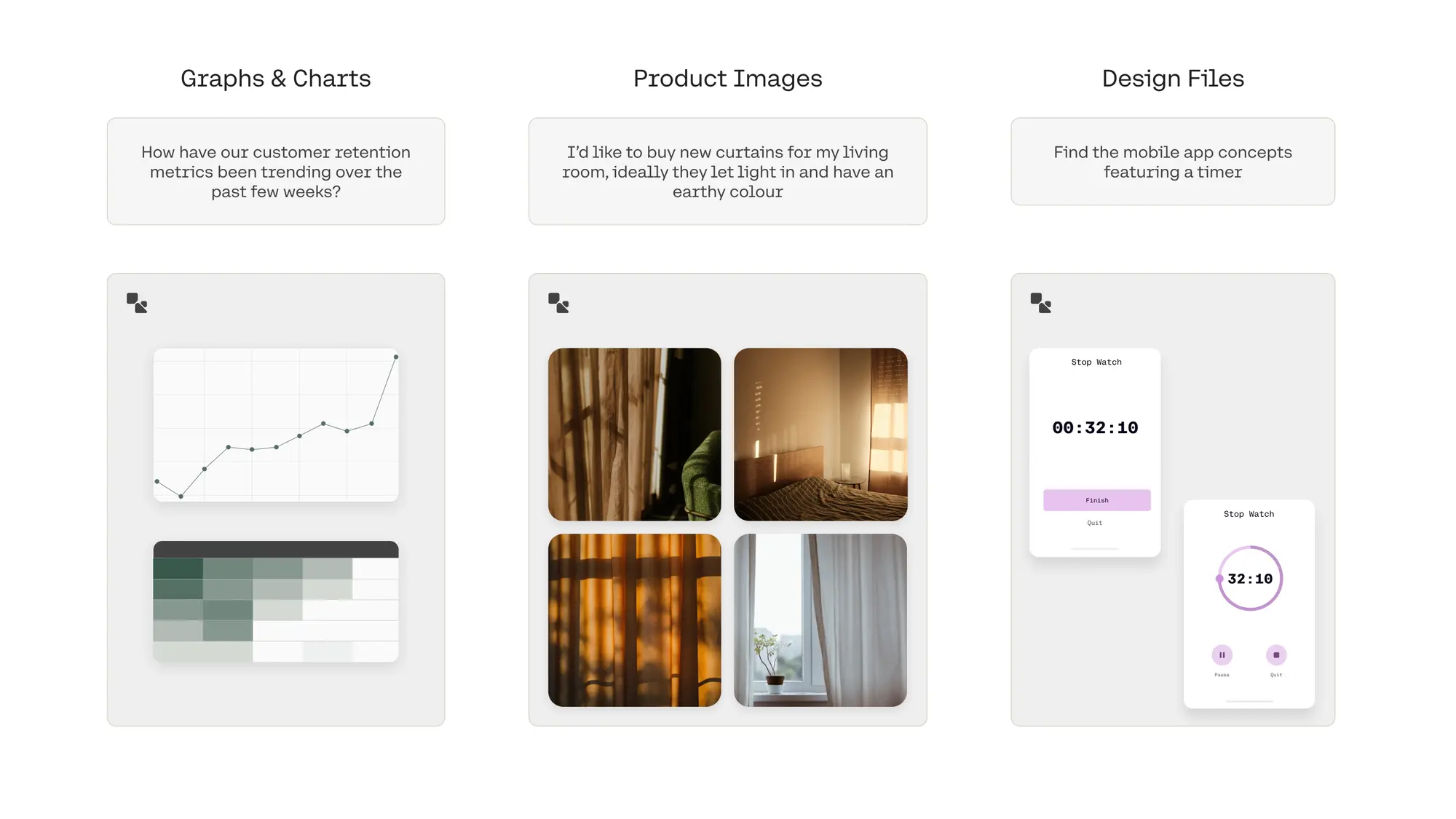

Cohere has announced that its Embed 3 model now supports multimodal search, allowing businesses to find relevant information across documents containing charts, product images, and design files.

The Embed 3 model, which Cohere describes as "industry-leading," now allows enterprises to generate embeddings from both text and images—essentially placing these two types of content in the same searchable vector space. In practical terms, this means you can now search for specific charts or graphs by describing what you're looking for, rather than relying on file names or manual tagging. For example, if you need to find a specific sales chart from last quarter, you can simply describe the information you're seeking.

"Embed 3 is an essential part of our search and retrieval stack, ensuring we're able to find relevant information and provide accurate answers in our end-user HR application," says Willson Cross, Founder & CEO at Borderless AI. "The fact that it works across 100+ languages helps us serve our global customer base."

Multimodal embeddings have important use-cases across various industries. E-commerce companies can enhance their product search capabilities by incorporating visual information alongside text descriptions. Design teams can quickly locate specific UI mockups or presentation templates based on natural language descriptions, streamlining their creative workflow.

Moreover, Embed 3 brings efficiency to corporate teams relying on data visualization. Complex data sets often live within charts and graphs, making traditional search methods cumbersome. With Embed 3, users can describe an insight they need, and the model will find the relevant visual representation. This can drive faster, more informed decision-making across different functions.

The Embed 3 model is notably proficient at mixed-modality searches. Unlike previous systems, which often prioritize text over image data or compartmentalize each type in different areas of the database, Embed 3 handles both types equally, prioritizing meaning over format. This unique approach ensures that search results are not biased towards a particular modality, offering more relevant and balanced outcomes.

It is also designed to tackle noisy, real-world data, and integrates multilingual capabilities across over 100 languages, giving it a reach that matches the needs of enterprises operating globally. Its robust multilingual performance means that, for example, a user could conduct a search in Portuguese and locate an infographic with both text and visuals in that language—or any of ten others.

The upgraded Embed 3 model is available now through Cohere's platform, Microsoft Azure AI Studio, and Amazon SageMaker, with options for private deployment in corporate environments.