NVIDIA has released Chat with RTX, an AI chatbot that runs locally on Windows PCs powered by NVIDIA RTX GPUs. First previewed at CES last month, the application is really a tech demo that showcases the potential for accelerating large language models using RTX hardware and brings fast, custom generative AI experiences to the desktop.

The true magic of Chat with RTX is that it allows users to easily connect their own content - including documents, notes, and even YouTube videos - to an open-source model like Llama 2 and Mistral. Simply point it to a folder with your data and it will index it in seconds. Queries made to the chatbot will then leverage retrieval-augmented generation (RAG), NVIDIA TensorRT-LLM software, and NVIDIA RTX acceleration to provide quick, grounded, contextually relevant answers. The tool supports various file formats, including text files, PDFs, and Word docs.

Importantly, since Chat with RTX runs fully locally on the user's Windows RTX machine, results are fast and private. There's no need to rely on cloud services or share data with third parties. NVIDIA says this also enables functionality without an internet connection.

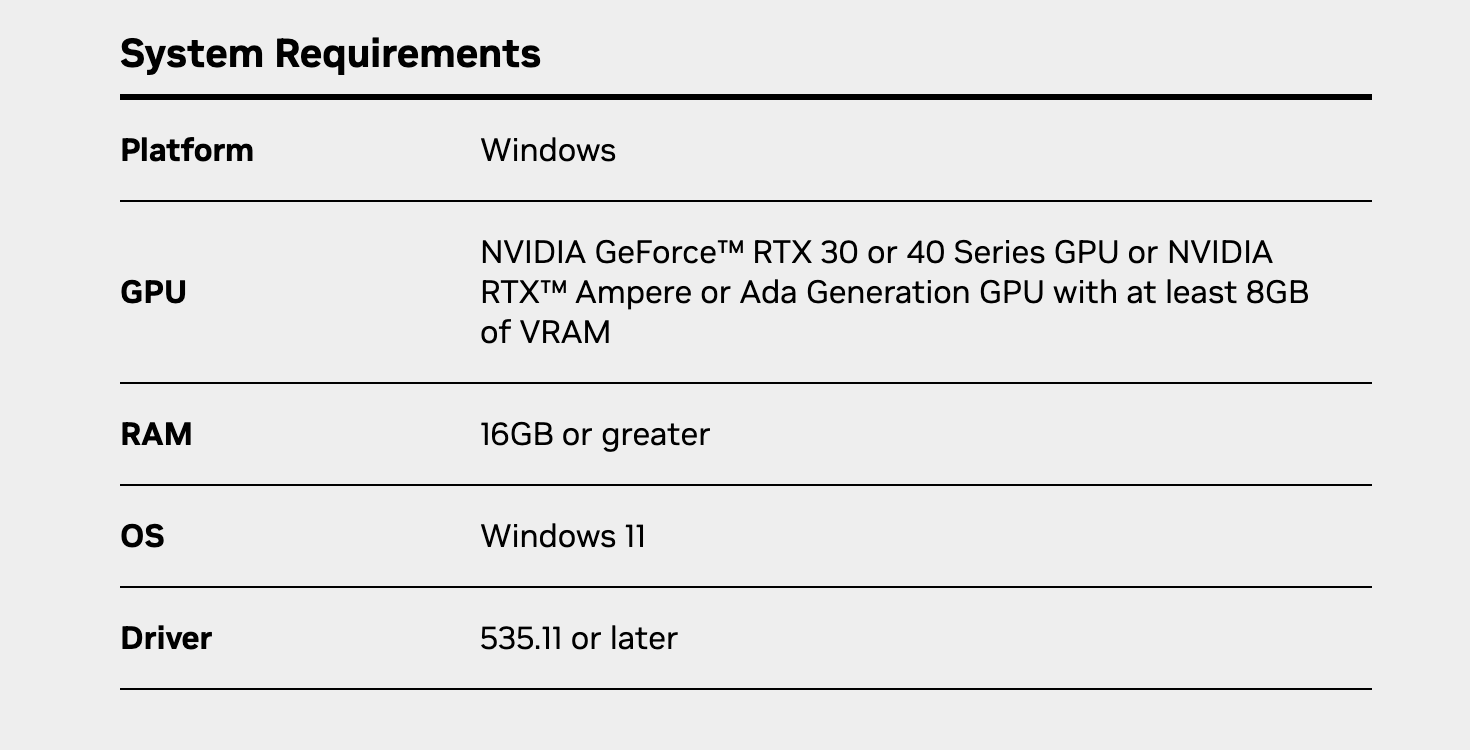

To run Chat with RTX, users need at minimum an RTX 30 series GPU with 8GB of VRAM, plus 16GB of system RAM and Windows 11.

For developers, Chat with RTX provides a blueprint for building customized LLM apps leveraging NVIDIA's TensorRT-LLM framework and GeForce RTX acceleration. The chatbot is built using the TensorRT-LLM RAG developer reference project that is available from GitHub.