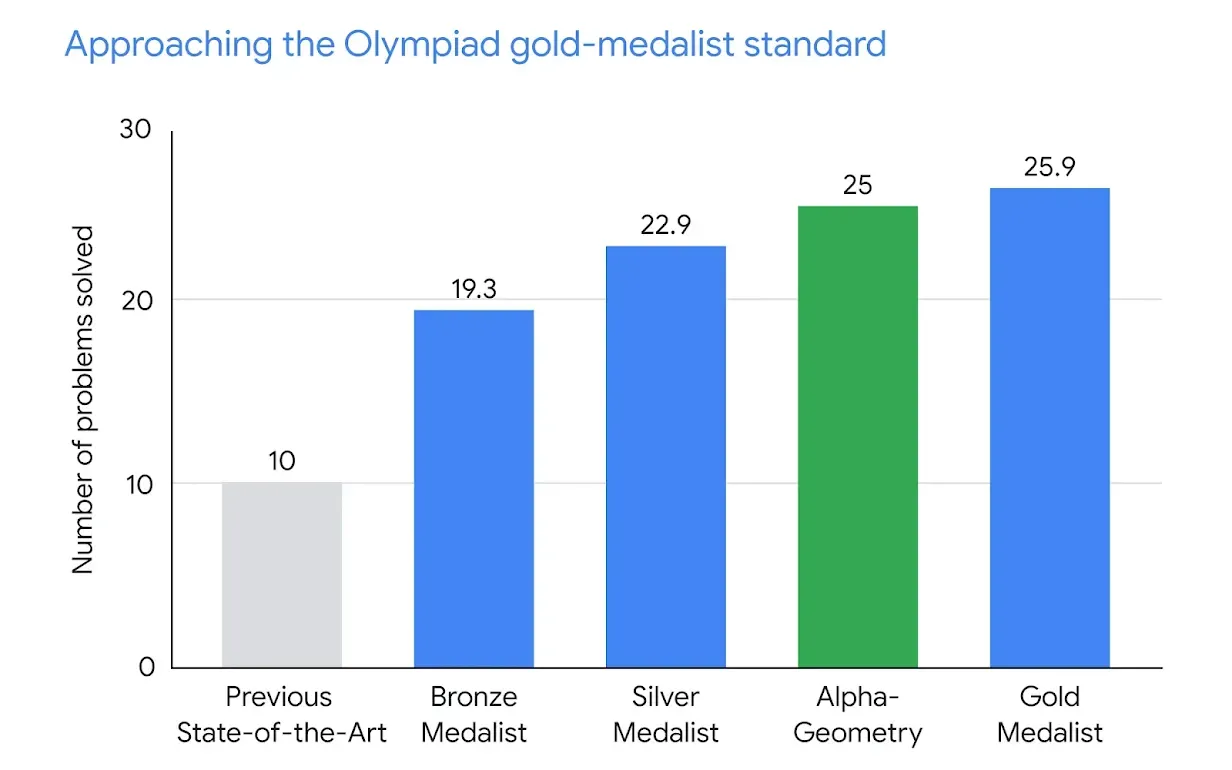

Google DeepMind and researchers at the Computer Science Department of New York University have unveiled AlphaGeometry—an AI system capable of solving university-level geometry problems at a skill level approaching the world's brightest high school math talents. As described in a paper published today in Nature, AlphaGeometry exhibited expertise on par with International Mathematical Olympiad (IMO) gold medalists* on a benchmark test of 30 challenging classic geometry problems.

The work represents a major leap forward for AI operating at the frontiers of math and reasoning. AlphaGeometry solved 25 out of 30 problems correctly under official Olympiad time constraints. By comparison, the previous state-of-the-art geometry solver could successfully tackle only 10 and the average human gold medalist solved 25.9 problems.

Competing one-on-one with gifted teens who train intensively for math competitions might seem like a narrowly defined challenge. However, geometry requires creativity in constructing valid proofs and an intuitive mastery of spatial relationships - capabilities at the core of higher-level reasoning.

So far, AI's journey in mathematics has been bumpy—especially in domains like geometry. Unlike other areas of mathematics, geometry's reliance on visual-spatial reasoning and its unique translation challenges create a data bottleneck. Existing machine-learning methods often falter here, constrained by the scarcity of human proofs translated into machine-verifiable languages.

So how does DeepMind’s system achieve olympic-caliber skill? AlphaGeometry utilizes both neural language models to intuitively suggest useful avenues of exploration, and rule-based deduction engines that rigorously check logical proof steps in the formal domain of Euclidean geometry. The neural component focuses creativity towards promising solutions, while symbolic logic provides mathematically sound verifications - a flexible pairing.

If you are familiar with Daniel Kahneman's work, Thinking, Fast and Slow, you can draw parallels. One system provides fast, “intuitive” ideas, and the other, more deliberate, rational decision-making.

By using synthetic data (generating 100 million unique geometry problems and proofs), researchers could train AlphaGeometry's language model component without costly and limited labeled examples from human experts. This near-limitless supply of novel challenges pushed the system to expand its knowledge.

AlphaGeometry's success in solving Olympiad-level geometry problems is not just an academic achievement. It shows the potential of AI to engage in sophisticated mathematical reasoning, a skill that has long been considered a bastion of human intelligence. And while its expertise currently centers on geometry, the methods could theoretically be adapted to generalize to other mathematical fields. Additionally, the system's ability to autonomously generate and solve complex problems could pave the way for AI's application in various fields, from engineering to theoretical research.

Through open-sourcing AlphaGeometry's code and models, DeepMind aims to provide a starting point for follow-up projects - both inside and outside the company - that further amplify AI's reasoning capacity. Collaborative efforts between organizations could lead to future AGIs with stronger foundations in science, logic and learning.

*The researchers point out that since each Olympiad features six problems, only two of which are typically focused on geometry, AlphaGeometry can only be applied to one-third of the problems at a given Olympiad. Nevertheless, its geometry capability alone makes it the first AI model in the world capable of passing the bronze medal threshold of the IMO in 2000 and 2015.