Meta today announced two major developments that aim to make generative AI models more capable while promoting responsible practices.

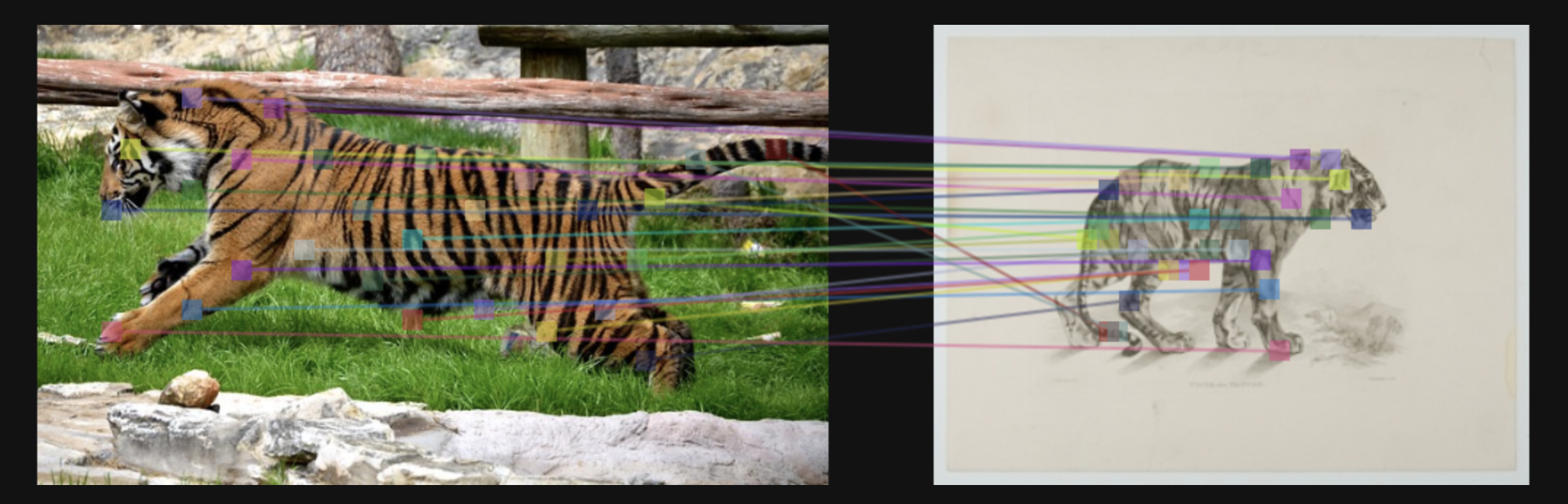

First, the company revealed that DINOv2, an open-sourced computer vision model for generating universal image features, will now be available under the permissive Apache 2.0 license. Accompanying this transition is the release of new DINOv2-based models for semantic segmentation and depth estimation.

According to Meta, this move to Apache 2.0 reflects their desire to encourage innovation and collaboration in computer vision research. With fewer licensing restrictions, DINOv2 can potentially be implemented in a wider range of applications across industries.

However, Meta simultaneously acknowledges the risks of rapidly advancing AI systems, like potential harm to marginalized groups. This concern motivated their second announcement - the introduction of FACET.

FACET (Fairness in Computer Vision Evaluation) is a benchmark dataset for evaluating demographic biases in computer vision models. It contains over 30,000 annotated images with labels for physical attributes, demographics, and person-related classes.

While not usable for training, FACET enables standardized assessment of model fairness regarding factors like perceived gender, skin tone, and age. This is a significant move for Meta, underscoring the company's commitment to tackling systemic injustices and inequalities in technological advancements. Preliminary research using FACET already indicates disparities in state-of-the-art computer vision systems.

Meta even took the opportunity to evaluate the performance of DINOv2 through FACET, allowing for an in-depth analysis of potential biases in DINOv2’s performance across various demographic groups. The initial findings suggest that while DINOv2 performs similarly to other models in most categories, it does display certain biases. This information is valuable for the development community as it paves the way for the improvement of existing models, making them more fair and equitable.

By open-sourcing FACET as an evaluation tool, Meta aims to promote fairness consciousness when developing new models. Researchers can identify biases in existing systems and gauge the impact of bias mitigation techniques.

It's also worth highlighting the importance of the open-source model in the development and improvement of DINOv2. Earlier contributions from the community led to substantial improvements, which in turn has prompted Meta to release this newer, improved version. The open-source community's impact is undeniable, and it's an approach that Meta seems keen on nurturing.

In a world that’s becoming increasingly reliant on AI technology, companies have a responsibility to ensure that their products are as unbiased and fair as possible. Meta believes open collaboration is key to ensuring AI progresses responsibly. Releasing DINOv2's under a more permissive license and FACET for fairness benchmarking reflect their commitment to this approach. Though risks exist, Meta hopes to pioneer solutions that allow society to benefit from advancing AI technology equitably.