Technology Innovation Institute (TII) has released Falcon 180B (base and chat model). This release represents a monumental milestone for openly available large language models (LLMs). With 180 billion parameters trained on 3.5 trillion tokens from TII's RefinedWeb, Falcon 180B achieves state-of-the-art performance, even rivaling major proprietary models like Google's PaLM 2.

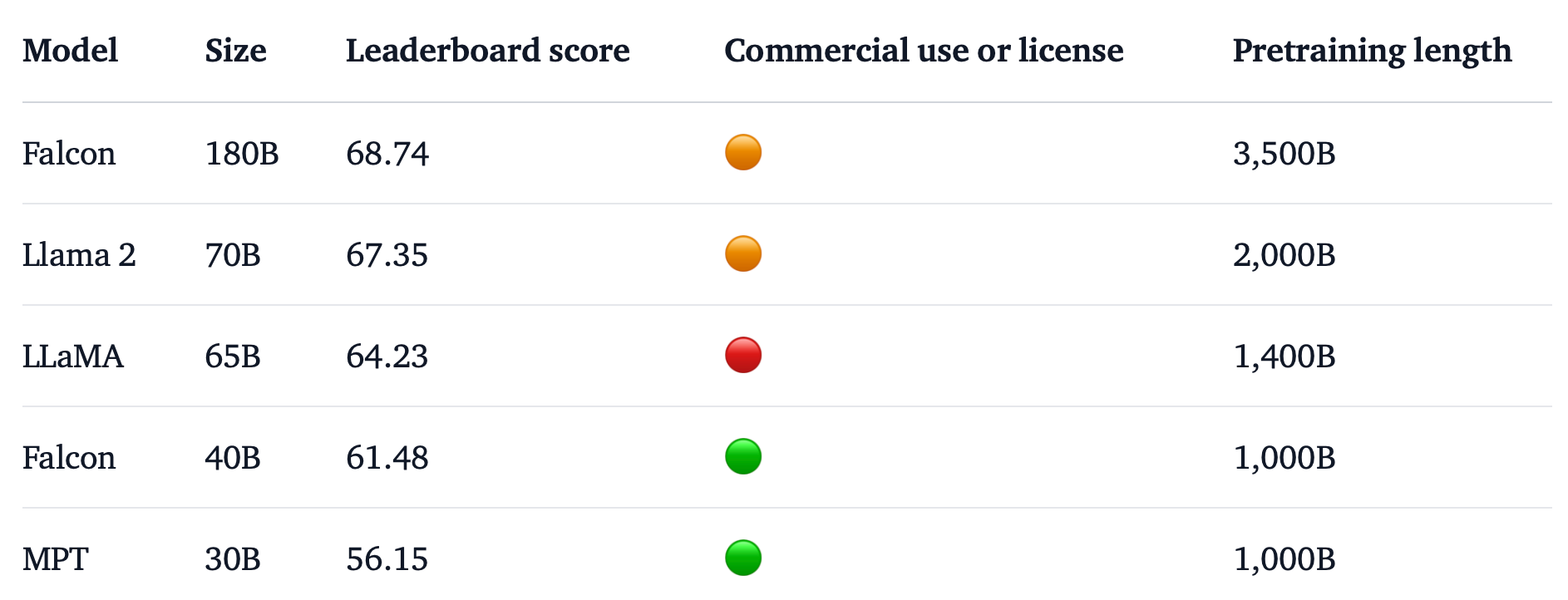

This training cycle represents the longest single-epoch pretraining for any open-source model. Significantly, Falcon 180B tops the Hugging Face leaderboard as the highest scoring open source pre-trained LLM, surpassing models like Meta's LLaMA 2. It demonstrates abilities on par with closed models like PaLM 2 Large—despite being just half its size. While Falcon 180B ranks just behind OpenAI’s GPT-4 in terms of performance, its open-access nature may offer a unique advantage for a variety of stakeholders, from AI researchers to professionals looking to harness AI tools.

For those looking for ready-to-use conversational capabilities, TII offers Falcon 180B-Chat, a derivative of Falcon 180B, fine-tuned on a mixture of chat datasets. The Chat variant comes with its own set of advantages, featuring an architecture optimized for inference. However, it is not ideal for those looking to further fine-tune the model for specific instructive or conversational tasks. You can use an interactive demo of the chat model in this playground or using the space embedded below:

From an architecture standpoint, Falcon 180B is a scaled-up iteration of its predecessor, Falcon 40B. It inherits foundational elements like multiquery attention for improved scalability. The model was trained on up to 4096 GPUs simultaneously using Amazon SageMaker, totaling approximately seven million GPU hours. The dataset it relies on is predominantly web data, making up about 85% of the total, while also including a mix of curated data such as conversations and technical papers. This eclectic data mix likely contributes to its versatility in handling multiple tasks.

While Falcon 180B offers tantalizing prospects for commercial usage, it's crucial to navigate its license carefully. Unlike the previous models that were released under the Apache 2.0 license, Falcon 180B can be employed commercially but under "very restrictive conditions," particularly ruling out any hosting uses. This limitation may affect cloud-based businesses and warrant legal consultations for those considering integrating Falcon 180B into their operations.

Still, Falcon 180B represents a remarkable achievement as both the largest and most capable openly available LLM. The release allows researchers worldwide to build upon Falcon's scaled-up architecture and advanced training. TII has noted that a research paper for the models will be published soon.