Figma is bringing Google’s generative AI deeper into its platform. The company says it will use Gemini 2.5 Flash, Gemini 2.0, and Imagen 4 to make image generation and edits snappier while keeping enterprise governance intact. In early tests, the “Make Image” feature saw latency drop by roughly 50%, according to the companies.

Key Points

- Gemini + Imagen 4 power Figma’s image gen/edit; early tests show ~50% faster output.

- Enterprise admins retain opt-out controls for AI content training and feature access.

- Imagen 4 supports watermarking/verification—useful for provenance in regulated workflows.

The move expands an existing Figma–Google Cloud relationship but puts Google’s latest models on the critical path of everyday design tasks: generate an on-brand hero image, cleanly remove a background, upscale a rough asset—all without leaving the canvas. Figma says the integration will be available to customers “with access to AI,” a nod to org-level controls already in place.

Why Gemini 2.5 Flash? Google positions it as a price-performance workhorse with large context handling—useful when prompts need product specs, brand rules, or prior iterations. That matters at scale, where milliseconds across thousands of prompts add up to real productivity.

On image quality, Imagen 4 steps in with improved typography and photorealism and, importantly for enterprises, built-in watermarking/verification hooks. That helps teams track provenance and meet rising disclosure expectations without bolting on extra tools.

Data handling remains the litmus test. Figma’s admin settings allow organizations to enable AI features and choose whether their content contributes to model training—an explicit control many CIOs now ask about in procurement. If you’re running regulated workflows or sensitive creative, those defaults and toggles matter more than any model name.

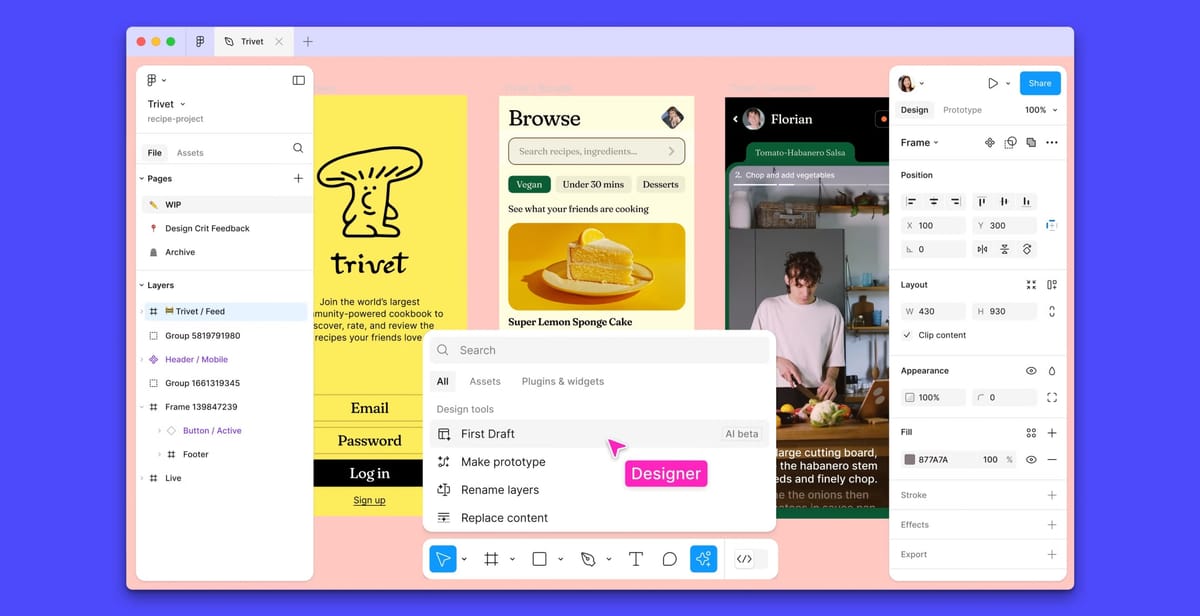

Figma has been steadily threading AI through its stack (rename layers, translate text, search, image tools) while learning from missteps. After pausing its “Make Designs” feature in 2024 over sameness concerns, the company’s recent updates have leaned into agent-safe ergonomics—exposing structured context to AI tools and agents rather than asking models to infer from pixels alone. Today’s Google tie-up fits that pattern: faster, governed assistance over flashy one-click layouts.

For execs making tool decisions, the read is straightforward: this integration reduces time-to-asset inside Figma, aligns with enterprise governance, and slots into a Google Cloud stack many teams already use. Pricing specifics for AI usage still depend on Figma plan settings, but the trajectory is clear—designers and adjacent teams will increasingly expect high-fidelity image ops in-flow, not as a detour to a separate app.