Google has announced "Grounding with Google Search" for its Gemini models. The new feature, available in Google AI Studio and through the Gemini API starting today, enables AI applications to automatically pull in context from relevant search results when responding to queries.

Not only does it reduce hallucinations (inaccurate or fictitious outputs generated by the model—but developers can expect richer responses, enhanced transparency with grounding sources, and search suggestions, all of which aim to increase the trustworthiness of AI output.

While AI models from OpenAI and Anthropic can be connected to web search, developers typically need to either use third-party tools or write custom code to enable this functionality. Google's integration marks the first time a frontier model provider has offered native web search grounding through its API. This will simplify development workflows and reduce the technical overhead for building web-aware AI products.

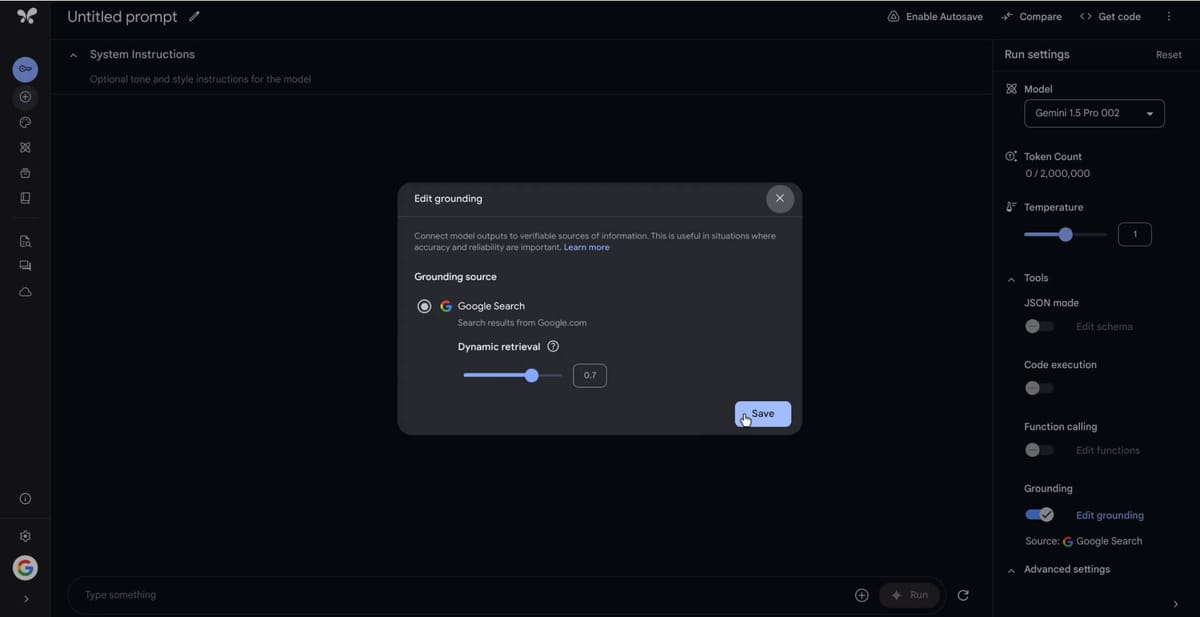

The new grounding capability works with all Gemini 1.5 models and can be enabled with a simple toggle in AI Studio or via an API call. When activated, the system uses Google Search to find relevant, up-to-date information for each query, helping ensure AI responses are current and accurate. Additionally, the system provides source attribution through inline citations and links to original content, making it easier for users to verify information and trace its origins.

Speaking with Logan Kilpatrick, Product Lead for Google AI Studio, and Shrestha Basu Mallick, Group Product Manager for Gemini API and AI Studio, it was clear that Google has big plans for this capability. "This is about putting more information into the hands of users," Kilpatrick explained. "We want developers to be able to trust what AI is doing for them and offer users the level of granular detail they need to validate their answers."

A key feature is the dynamic retrieval system, which lets developers control when search grounding is activated. Using a threshold value between 0 (always) and 1 (never), developers can fine-tune how selective the system should be about using grounding in Google search.

"Our aim is to allow developers to decide what makes the most sense for their applications. Certain developers might say their use case only requires grounding for recent fact queries," explains Mallick. "Others might want the richness of grounded answers more often, for example with research applications."

During a private demo of the new capabilities, Mallick showed that when asked about the latest Emmy winners, a model without grounding referred to outdated information from 2023, whereas the grounded model sourced and provided the accurate, current answer. Additionally, she showed off Google AI Studio's new Compare Mode that allows developers to see responses from different models side-by-side, evaluating the impact of grounding.

When asked, a Google representative clarified that the Grounding with Google Search integration excludes paid advertising content and only uses organic search results. They further explained that the feature supports the publisher ecosystem through Google's existing Google-Extended program with source attribution linking back to original content.

While the introduction of search grounding brings many benefits, it also comes with increased latency and additional costs. The latency is understandable, and is largely due to the increased verbosity of grounded responses, which often include citations and richer context. Google is pricing the service at $35 per 1,000 grounded queries, in addition to standard token costs.

With this release, Google is differentiating its AI ecosystem by enabling developers to easily tap into its decades-long expertise in search and its world-renowned knowledge graph. And by prioritizing key API enhancements, being responsive to developer needs, and offering unique innovations, the company is positioning its Gemini platform as a compelling choice for developers building the next generation of AI applications.