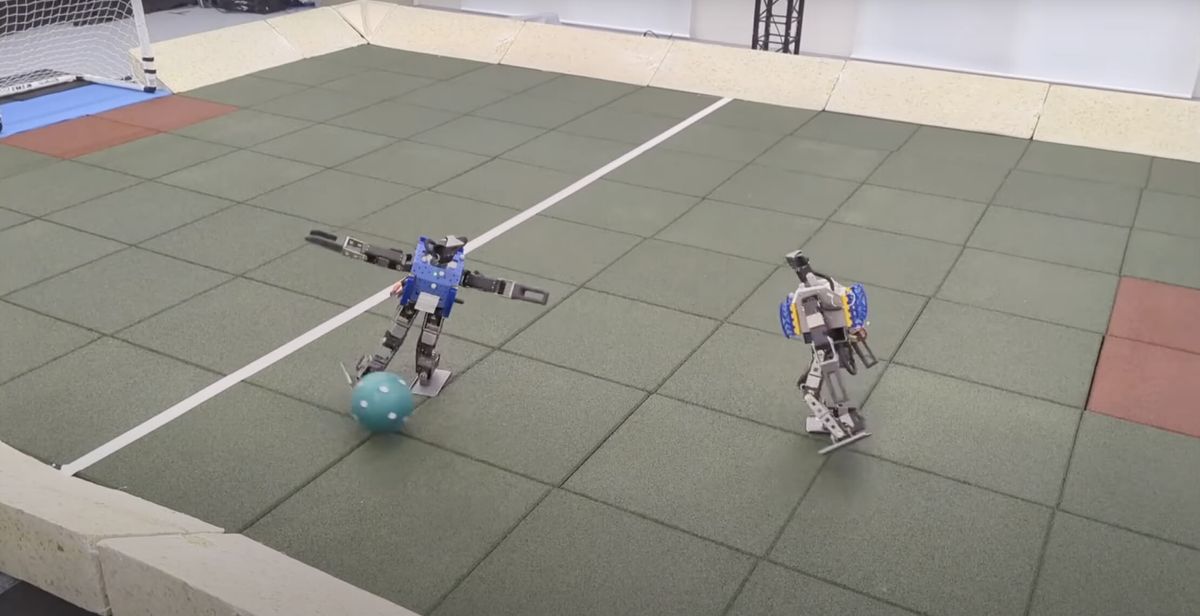

In a fascinating new research paper from DeepMind, scientists have successfully trained low-cost humanoid robots to play 1v1 soccer. Using advanced techniques like deep reinforcement learning, the team was able to teach these robots agile skills and tactics, bringing us one step closer to having robots perform complex tasks in the real world.

DeepMind's OP3 Soccer Team has made notable strides in the field of robotics with their latest research, demonstrating how deep reinforcement learning can be used to train robots to play soccer. The training process involved two stages, where robots first learned independent skills like getting up from the ground and scoring goals. In the second stage, these skills were combined into a single soccer-playing agent, which improved its tactics through self-play against previous versions of itself.

One of the most impressive aspects of this research is the successful zero-shot transfer from simulation to real-world application. This was achieved using a combination of system identification and light domain randomization, resulting in agile and dynamic behaviors that far exceeded the performance of scripted baseline controllers.

This progress could lead to the development of advanced humanoid robots capable of performing complex tasks across various industries, such as assisting in disaster relief efforts or navigating intricate environments for search and rescue missions. Additionally, the study explores the potential of multi-agent settings, where robots could collaborate and coordinate more effectively.

While the research is promising, the team acknowledges some limitations. They did not utilize real data for transfer, and their focus was on a small robot. However, they believe their proposed method could be applied to more complex settings and larger robots.

The research was inspired by RoboCup, but the environment and task are substantially simpler than the full RoboCup problem. The team believes that their proposed method could be applied to more complex settings, such as training agents in teams of two or more players. In preliminary experiments with 2v2 soccer, the agents demonstrated promising results, learning division of labor and simple forms of collaboration.

Another exciting direction for future research is playing soccer using raw vision, with robots relying solely on on-board sensors. Though this approach presents additional challenges, the researchers achieved initial success in training vision-based agents using on-board RGB cameras and proprioception.

Overall, the research from DeepMind's OP3 Soccer Team represents a significant and exciting advancement in robotics and deep reinforcement learning. Though there is room for improvement in terms of stability and perception, the results are promising, and similar methods could eventually be applied to larger robots for solving practical real-world tasks. As researchers continue to refine and expand these techniques, we can expect to see even more advanced and versatile humanoid robots in the future.