Rapid advances in AI capabilities are raising important questions about how to ensure these technologies are developed responsibly, safely, and for the maximum benefit to all. A new paper from Google's DeepMind in collaboration with OpenAI and leading academic institutions, including the University of Oxford, Université de Montréal, University of Toronto, Columbia University, Harvard University, and Stanford University, proposes and explores international institutions to manage the opportunities and risks posed by advanced AI systems.

The paper argues that while domestic regulation will be critical, the need for shared international standards is increasing. This is primarily due to the risks posed by unchecked AI, the potential of AI misuse, and the geopolitical implications of AI power.

On the opportunity side, international cooperation could promote equitable access to AI through technology transfer and capacity building, helping underserved communities benefit from the prosperity gains AI enables. It could also harmonize divergent national regulations to reduce friction in AI innovation and deployment.

However, certain AI capabilities like automated chemistry, synthetic media generation, and cybersecurity tools could pose global risks if misused by malicious actors or if deployed unsafely in high-stakes domains. Global AI governance could establish safety protocols and standards, and incentivize their responsible implementation across states.

Four Potential Institutional Models

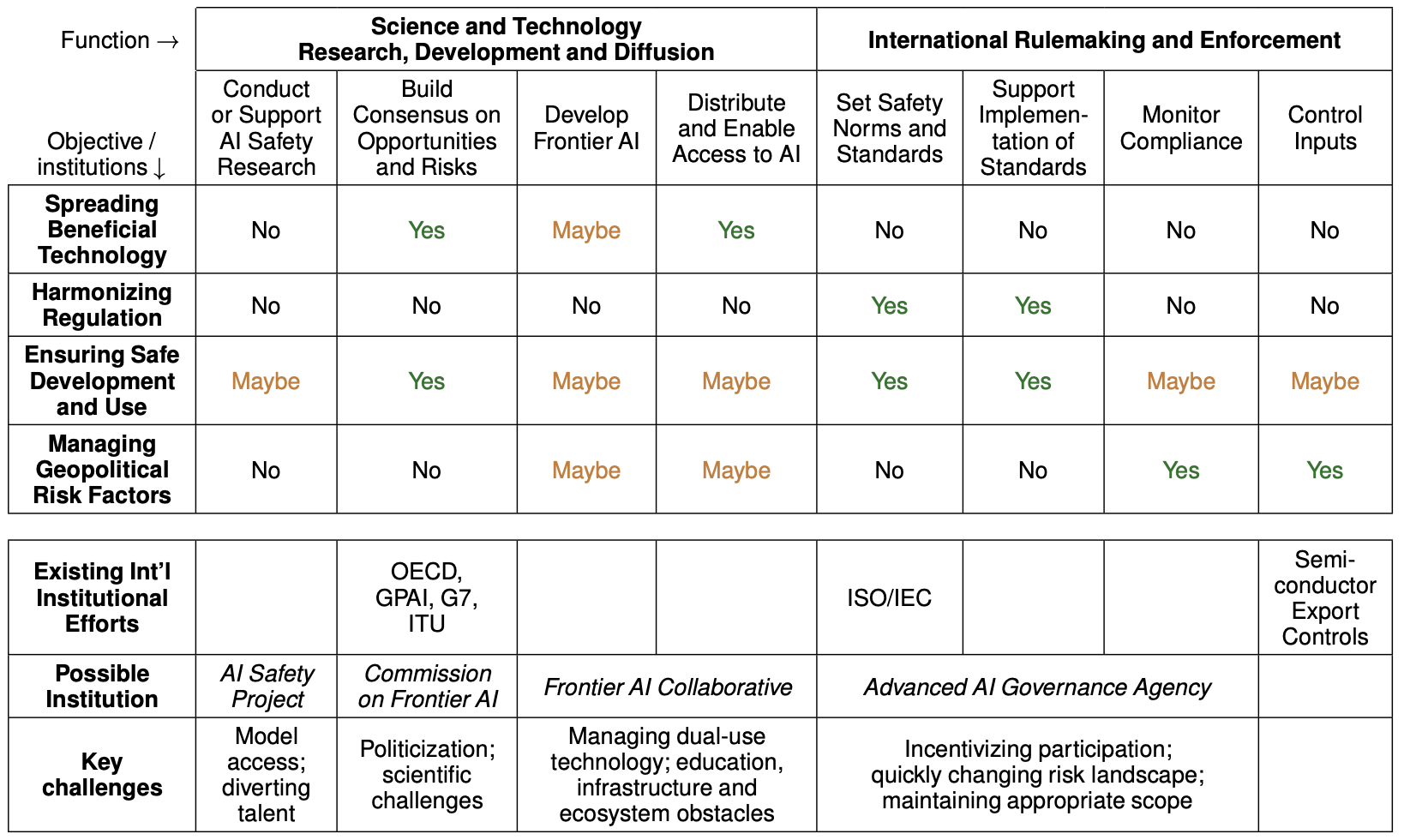

The consortium led by DeepMind proposes four possible institutional models to address these challenges, drawing parallels with existing international organizations to contextualize their approach.

- A Commission on Frontier AI to build consensus on AI's trajectory and associated risks through rigorous assessment. This could inform preparedness and collective action. Avoiding politicization and keeping pace with AI's speed of progress are key hurdles.

- An Advanced AI Governance Organization to develop international risk-based standards for responsible development and use of advanced systems. It could support domestic implementation and compliance monitoring. But effectively scoping governance and gaining broad participation will be challenging as AI systems rapidly advance.

- A Frontier AI Collaborative to increase access to cutting-edge AI, especially for underserved societies. It would develop and distribute beneficial technologies. Deeply entrenched barriers make it unclear if this is the most impactful model for development. Managing dual-use risks also poses further challenges.

- An AI Safety Project to accelerate technical research into AI safety at scale. It would provide resources and enable collaboration between experts. However, optimally allocating talent and obtaining sensitive model access from developers will require careful balancing.

Challenges and Open Questions

The consortium is fully aware of the many important open questions around the viability of these institutional models. These proposed models must navigate a myriad of operational challenges that range from funding, jurisdiction, representation, cooperation, to potential conflicts of interest. Effective institutions will need to balance legitimacy, nimbleness, and incentives for broad participation.

Funding: Identifying consistent funding sources for these institutions could prove complex. The organizations would require substantial financial resources to attract top-tier AI experts and to conduct safety research and policy assessments. The question remains: will countries and businesses be willing to contribute enough resources?

Jurisdiction and Cooperation: Cross-border governance always faces issues of jurisdiction. Not all nations have the same views on AI's use, and without universal acceptance, enforcement could become a severe issue. This leads to the critical question - how will these institutions ensure cooperation and compliance among nations with differing agendas?

Representation: Ensuring global representation in AI governance is crucial. The disproportionate influence of wealthier countries and big tech companies could result in a skewing of regulations towards their interests. How will these institutions ensure fair representation and prevent dominance by a select few?

Conflicts of Interest: As these institutions will likely include industry stakeholders, conflicts of interest could arise. Will these institutions adequately mitigate potential bias or conflicting interests between industry players and the public good?

Moving the Conversation Forward

This paper makes a valuable contribution in broadly mapping potential structures for global AI governance and grounding them in lessons from existing institutions. The proposed models provide helpful starting points for discussions on what international coordination could look like in this domain.

However, let's acknowledge the depth and breadth of the task at hand. The paper, although comprehensive, was not meant to cover the full gamut of operationalizing these models or delve deeply into the socio-economic implications of AI. It serves to advance critical conversations around these topics, acknowledging the real-world complexities and the vast scope of socio-economic impacts that AI holds. These areas require further investigation and dialogue, beyond what any single paper could encapsulate. This underscores the ongoing nature of our collective journey towards effective AI governance.

Continuing the conversation around global AI governance requires collaborative, transparent dialogue. Governments, industry stakeholders, academics, and civil society must be engaged in discussions to build upon these foundational models.

Expanding Research: Further research is needed to delve into the specifics of each proposed model. Continued comparative analysis between the proposed institutions and their real-world analogues could help predict potential roadblocks and success factors.

International Dialogue: Dialogue between nations is essential to encourage cooperation and mutual agreement on AI standards. This could begin with multilateral meetings and forums dedicated to discussing AI governance.

Public Engagement: AI is not just a concern for governments and corporations. Public engagement can democratize the discourse and generate more balanced and inclusive solutions. Town halls, public forums, and open consultations could be effective ways to achieve this.

With advanced AI's potential global impacts, this research provides a valuable starting point in constructing AI governance bodies. However, the conversation cannot end here. With AI development accelerating, the urgency of establishing global AI governance is more pressing than ever. As we move forward, inclusivity, transparency, and collaboration should guide our steps in navigating the challenging yet exciting journey of AI governance. Striking the right institutional balance will help steer AI toward equitable and stable progress.