Google DeepMind has unveiled an AI system that achieved silver medal-level performance at this year's International Mathematical Olympiad (IMO). The hybrid system, combining two specialized models named AlphaProof and AlphaGeometry 2, solved four out of six problems in the prestigious competition, marking a new milestone in AI's mathematical reasoning capabilities.

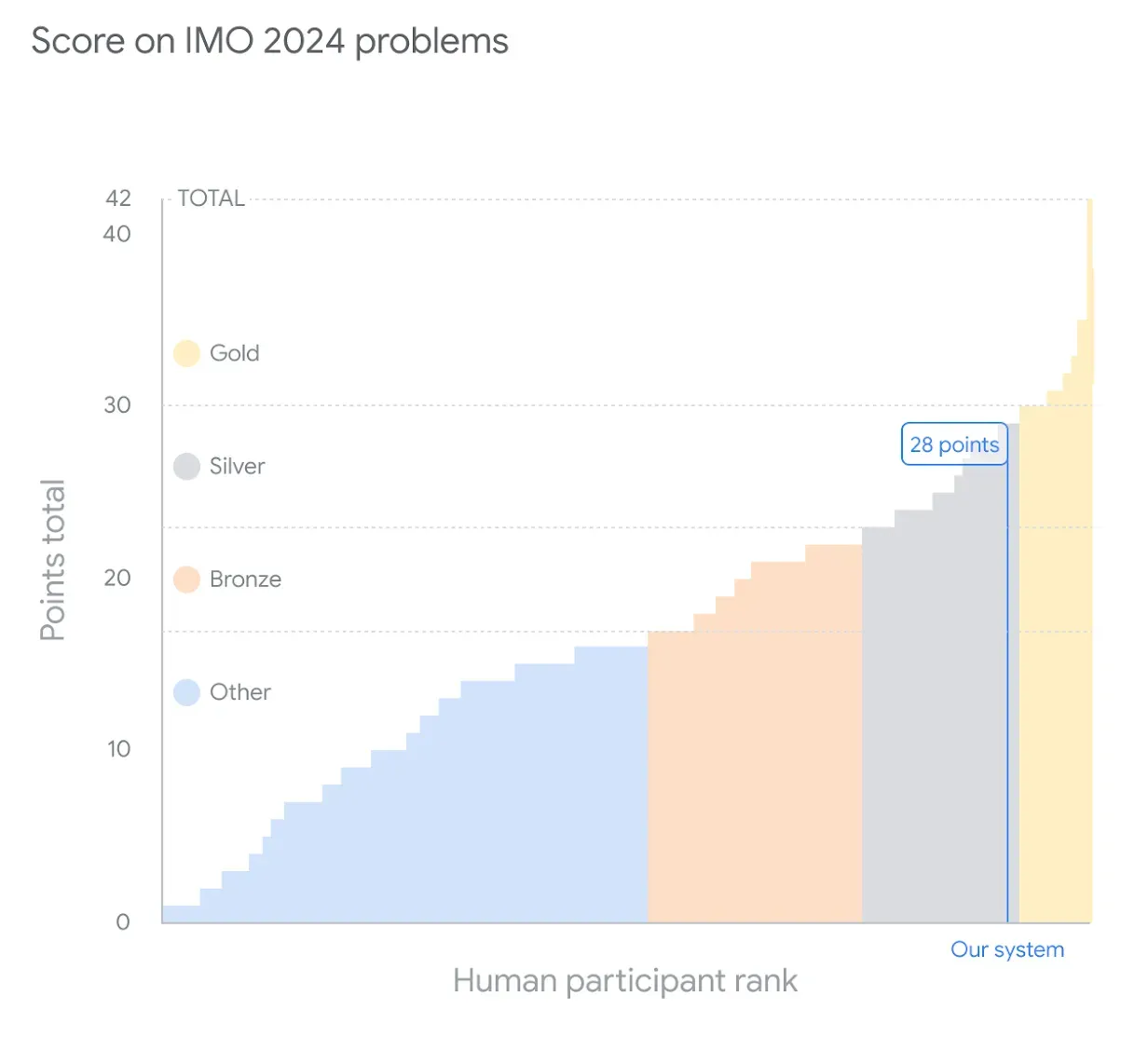

The IMO, widely regarded as the world's most challenging math competition for pre-college students, has become a benchmark for assessing AI's advanced problem-solving skills. Google DeepMind's system earned 28 out of 42 possible points, just shy of the 29-point gold medal threshold achieved by only 58 of 609 human contestants this year. (See all the solutions here.)

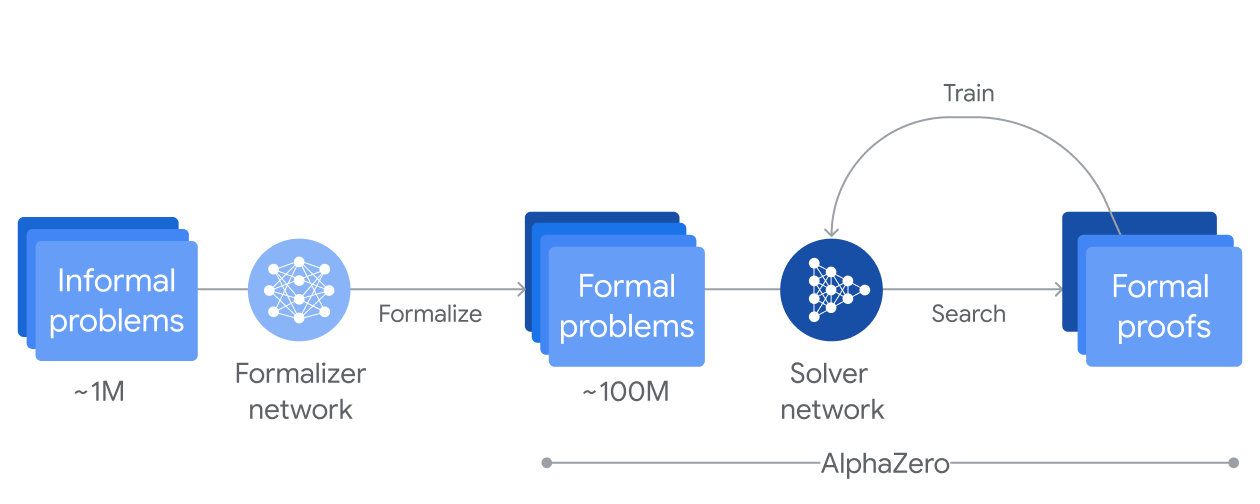

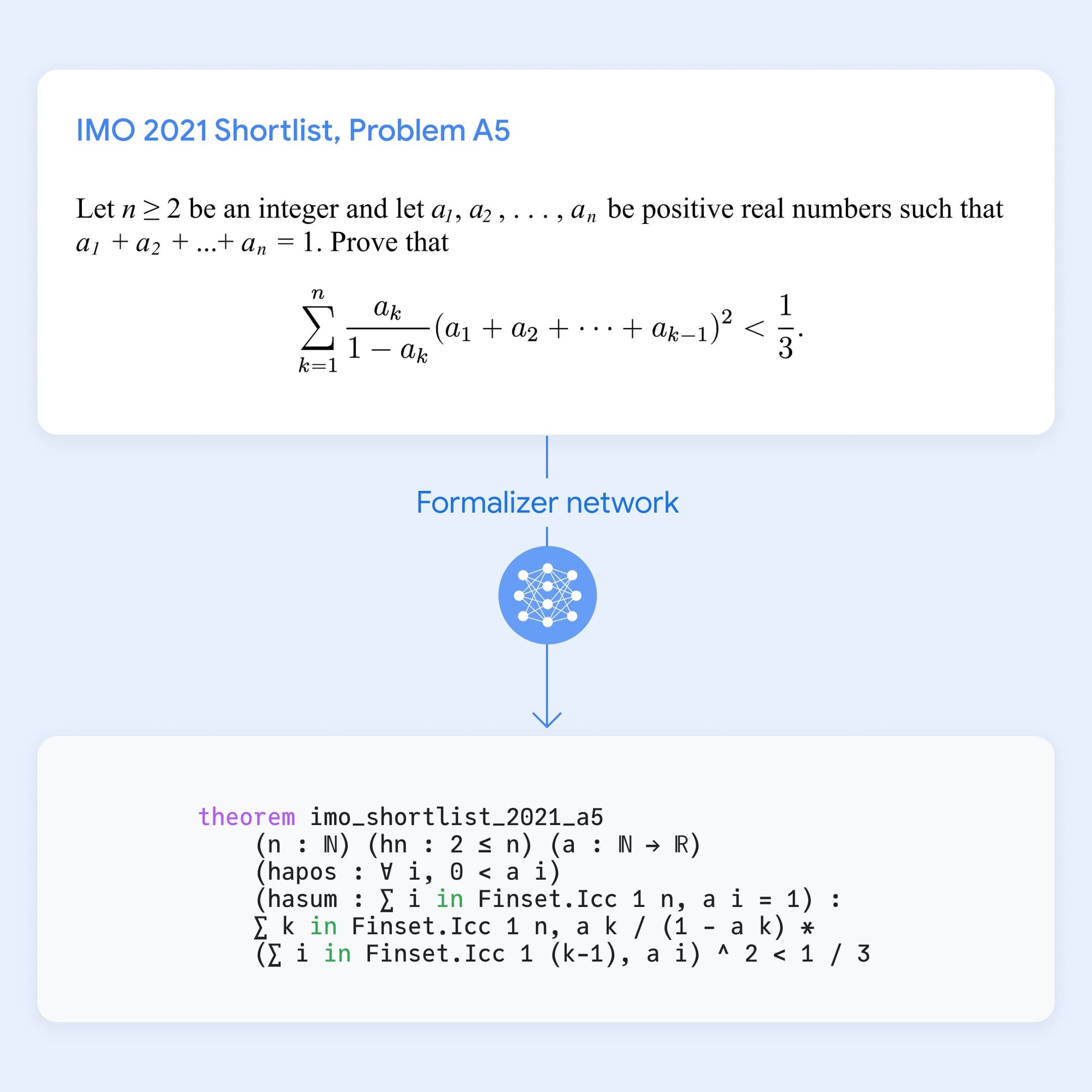

At the heart of this achievement is AlphaProof, a novel AI that couples a pre-trained language model with the AlphaZero reinforcement learning algorithm - the same technology that mastered complex games like chess and Go. This integration allows AlphaProof to approach mathematical reasoning with game-like strategies, searching through possible proof steps as if navigating a vast decision tree. Unlike previous approaches limited by scarce human-written data, AlphaProof bridges the gap between natural and formal languages. It uses a fine-tuned version of Google's Gemini model to translate natural language problems into formal statements, creating a vast library of training material.

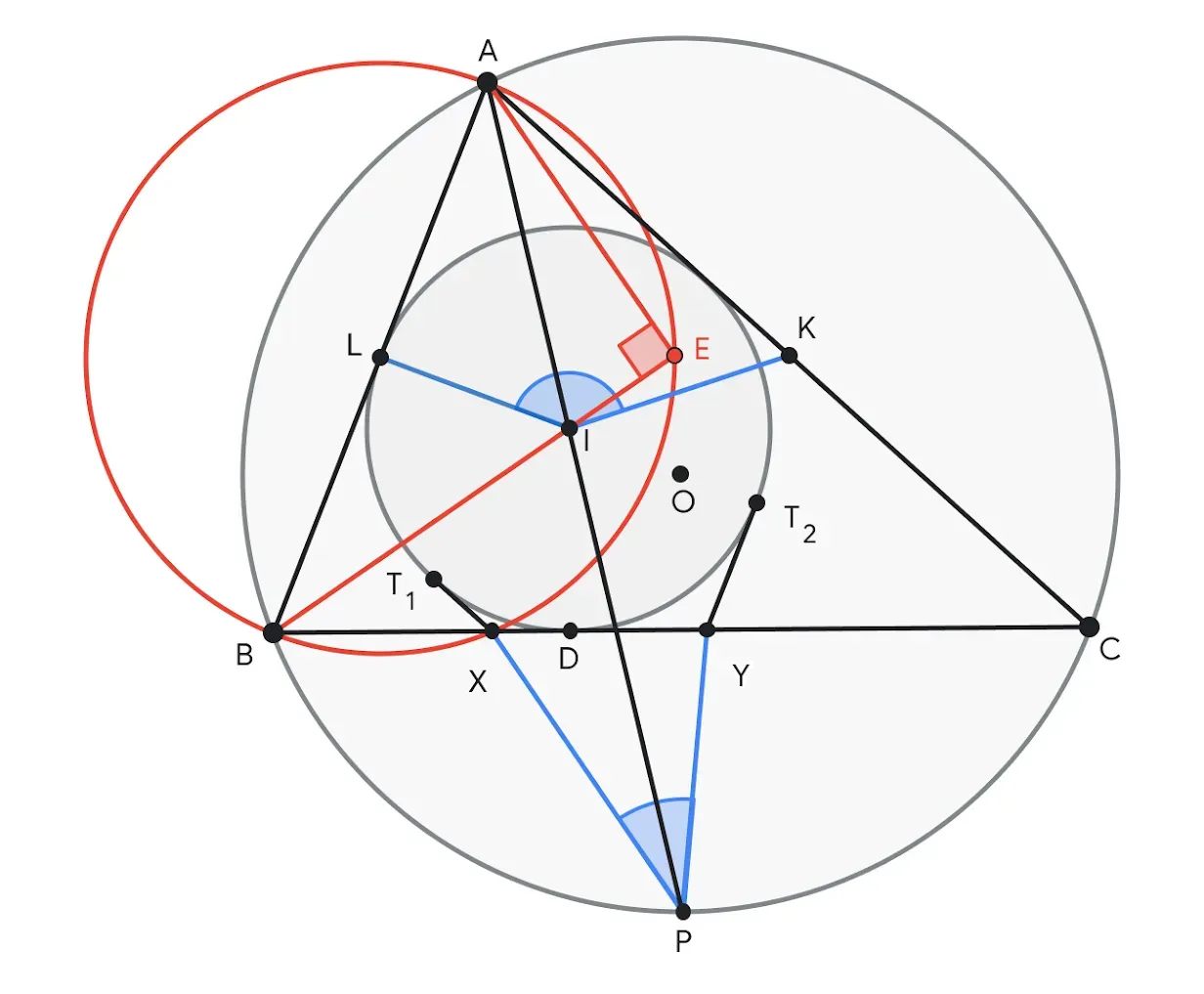

Complementing AlphaProof is AlphaGeometry 2, an upgraded version of DeepMind's geometry solver. This system demonstrated remarkable efficiency, solving one IMO problem in just 19 seconds. Its improved performance stems from enhanced training data and a novel knowledge-sharing mechanism that enables more complex problem-solving. Before this year's competition, AlphaGeometry 2 could solve 83% of all historical IMO geometry problems from the past 25 years, a significant improvement over its predecessor's 53% success rate.

The problems were manually translated into formal mathematical language for the AI systems to understand. While the official competition allows students two sessions of 4.5 hours each, the AI systems solved one problem within minutes and took up to three days to solve the others.

Google DeepMind's success at the IMO underscores the rapid progress in AI's ability to tackle advanced reasoning tasks. As these systems continue to evolve, they hold the potential to accelerate scientific discovery and push the boundaries of human knowledge.

Google DeepMind plans to release more technical details on AlphaProof soon and continues to explore multiple AI approaches for advancing mathematical reasoning.