Google has unveiled a trio of AI advancements, expanding its Gemma 2 family with a potent small-scale model and introducing new tools for safety and transparency.

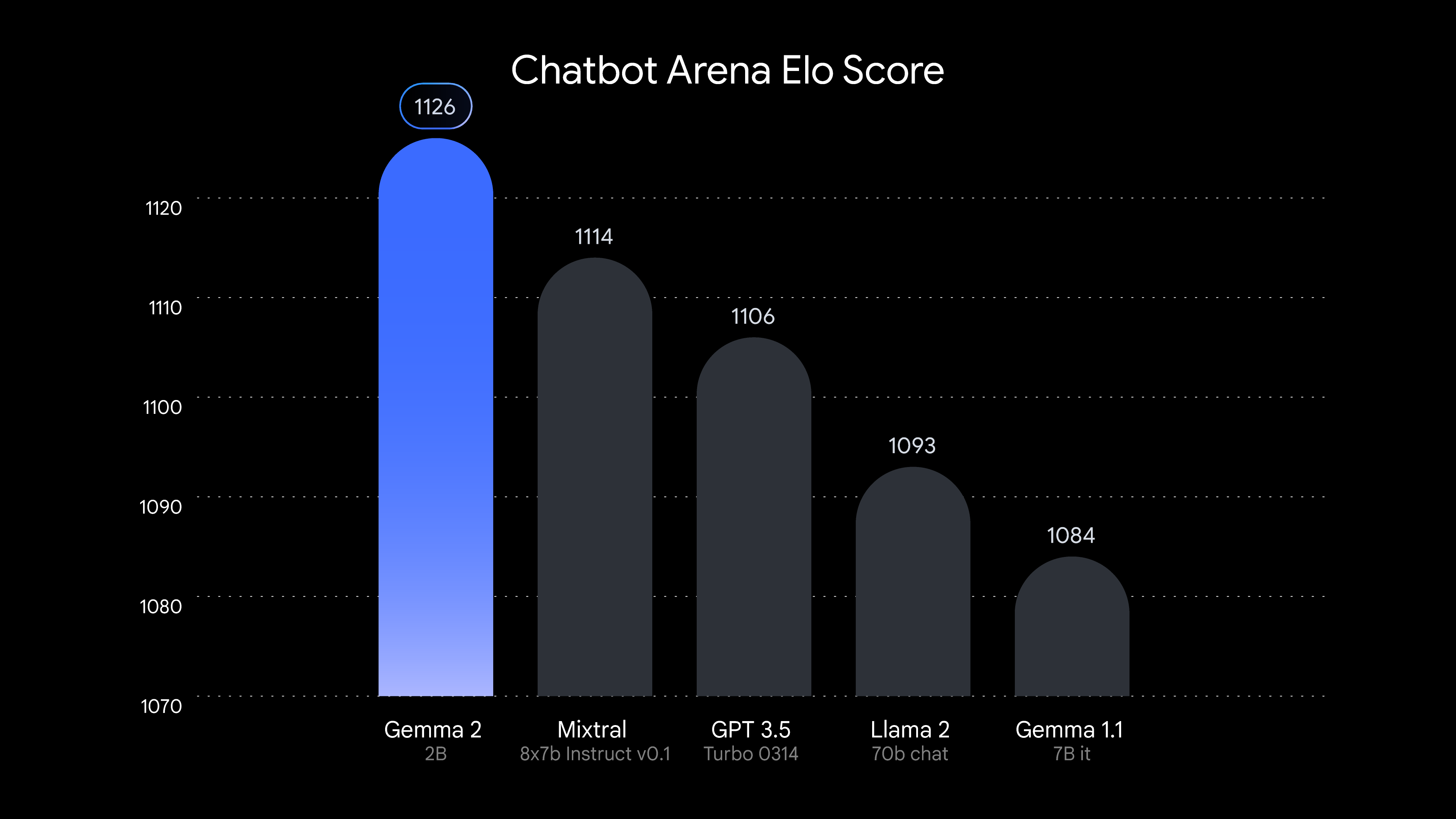

The star of the show is Gemma 2 2B, a new 2 billion parameter model that punches well above its weight class. Despite its compact size, it outperforms much larger models like Mixtral, GPT-3.5 and Llama 2 70B models in conversational tasks on the LMSYS Chatbot Arena leaderboard.

Gemma 2 2B's efficiency is its calling card. The model runs smoothly across a spectrum of hardware, from edge devices and laptops to cloud deployments. Google has optimized it for NVIDIA's TensorRT-LLM library, targeting everything from beefy data centers to modest PCs.

For developers, Gemma 2 2B integrates seamlessly with popular frameworks like Keras, JAX, and Hugging Face. It's even available on Google Colab's free tier, democratizing access to cutting-edge AI.

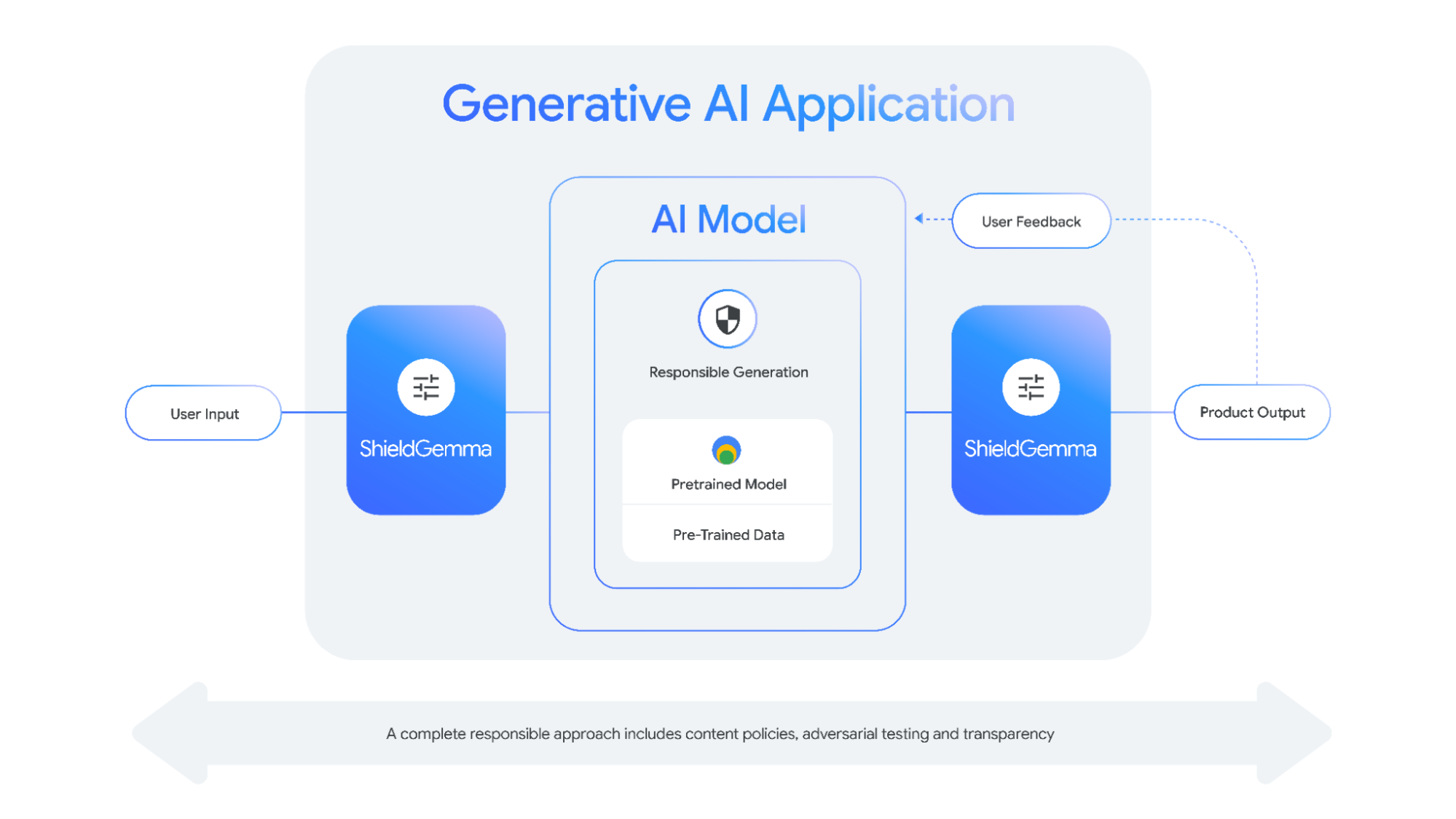

But raw power isn't Google's only focus. The tech giant is doubling down on responsible AI with ShieldGemma, a suite of safety classifiers built on Gemma 2. These tools can detect and filter harmful content in both model inputs and outputs, tackling issues like hate speech, harassment, and explicit material.

ShieldGemma comes in various sizes, offering flexibility for different use cases. The 2B version targets real-time classification, while larger 9B and 27B variants provide higher accuracy for less time-sensitive tasks.

Rounding out the announcements is Gemma Scope, a set of tools designed to crack open the AI black box. This suite of over 400 sparse autoencoders allows researchers to examine Gemma 2's inner workings, potentially leading to more interpretable and accountable AI systems.

Developers and researchers can access these new tools starting today, with model weights available through platforms on Hugging Face.