Google is rolling out a new text embedding model, Gemini Embedding (text-embedding-large-exp-03-07), that leverages its Gemini AI framework to improve search, retrieval, and classification capabilities. The model, currently in an experimental phase, surpasses its predecessor text-embedding-004 in performance and ranking, particularly excelling on the Massive Text Embedding Benchmark (MTEB) Multilingual leaderboard.

Key Points:

- Supports 8K token input length and 3K-dimensional output, allowing larger text chunks and richer embeddings.

- Now works in over 100 languages, doubling the previous coverage.

- Available via the Gemini API with limited capacity, with a full release planned later.

A More Capable Embedding Model

Text embeddings are used in AI applications to represent words, phrases, and sentences as numerical vectors, allowing models to understand and compare textual data effectively. Embedding models power semantic search, recommendation engines, retrieval-augmented generation (RAG), and classification tasks by enabling AI systems to find related concepts rather than relying on exact keyword matches.

Google says Gemini Embedding offers:

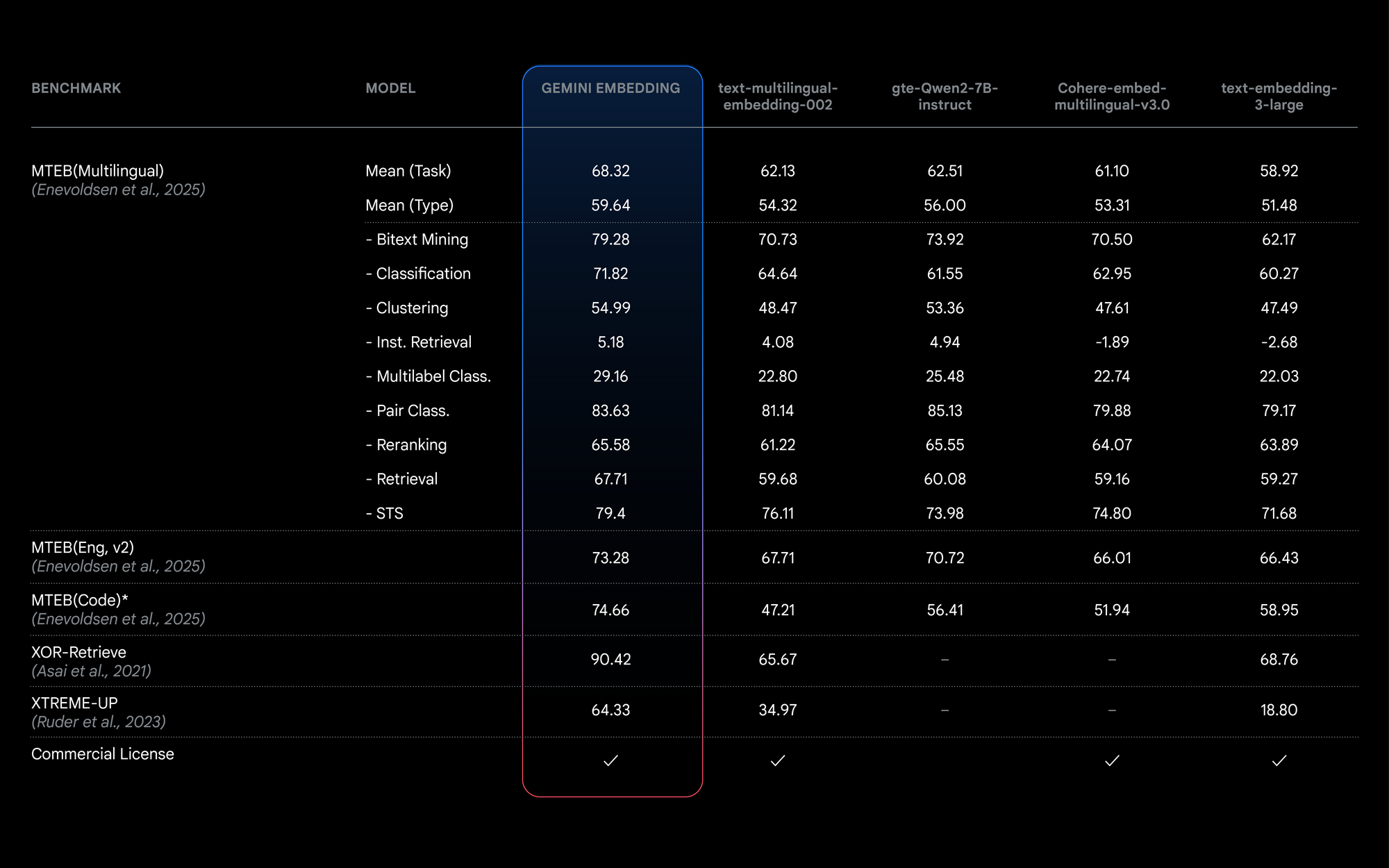

- A mean score of 68.32 on the MTEB Multilingual leaderboard, outperforming competitors by +5.81 points.

- Expanded input limits—supporting 8K tokens, more than doubling previous capacity, allowing for larger text and code embeddings.

- Higher-dimensional outputs, increasing from previous models to 3K dimensions, resulting in richer text representations.

- Matryoshka Representation Learning (MRL), a technique allowing users to truncate embeddings to fit storage constraints while maintaining accuracy.

Doubling Down on Multilingual Support

Gemini Embedding is designed for broad applicability, handling domains like finance, science, legal, and enterprise search without requiring task-specific fine-tuning. It also supports over 100 languages, doubling the previous reach of Google’s text embedding models. This puts it on par with OpenAI’s multilingual offerings and gives developers more flexibility for global applications.

An Experimental Release With Future Updates

While Gemini Embedding is available today via the Gemini API, Google has made it clear that the model is in an experimental phase, meaning it comes with limited capacity and may undergo changes before its full release. Developers can start integrating it now, but Google plans to refine and optimize it before making it generally available in the coming months.

Google’s move underscores the growing importance of embedding models in AI workflows, where reducing latency, improving efficiency, and expanding language coverage are key priorities for developers. With strong early performance, Gemini Embedding could be a significant step forward in making AI-powered retrieval and classification systems even more effective.