Google announced today that Gemini 2.0 Flash is now generally available, along with the launch of Gemini 2.0 Pro Experimental and Gemini 2.0 Flash-Lite, a cost-effective AI model designed for high-efficiency tasks.

Key Takeaways

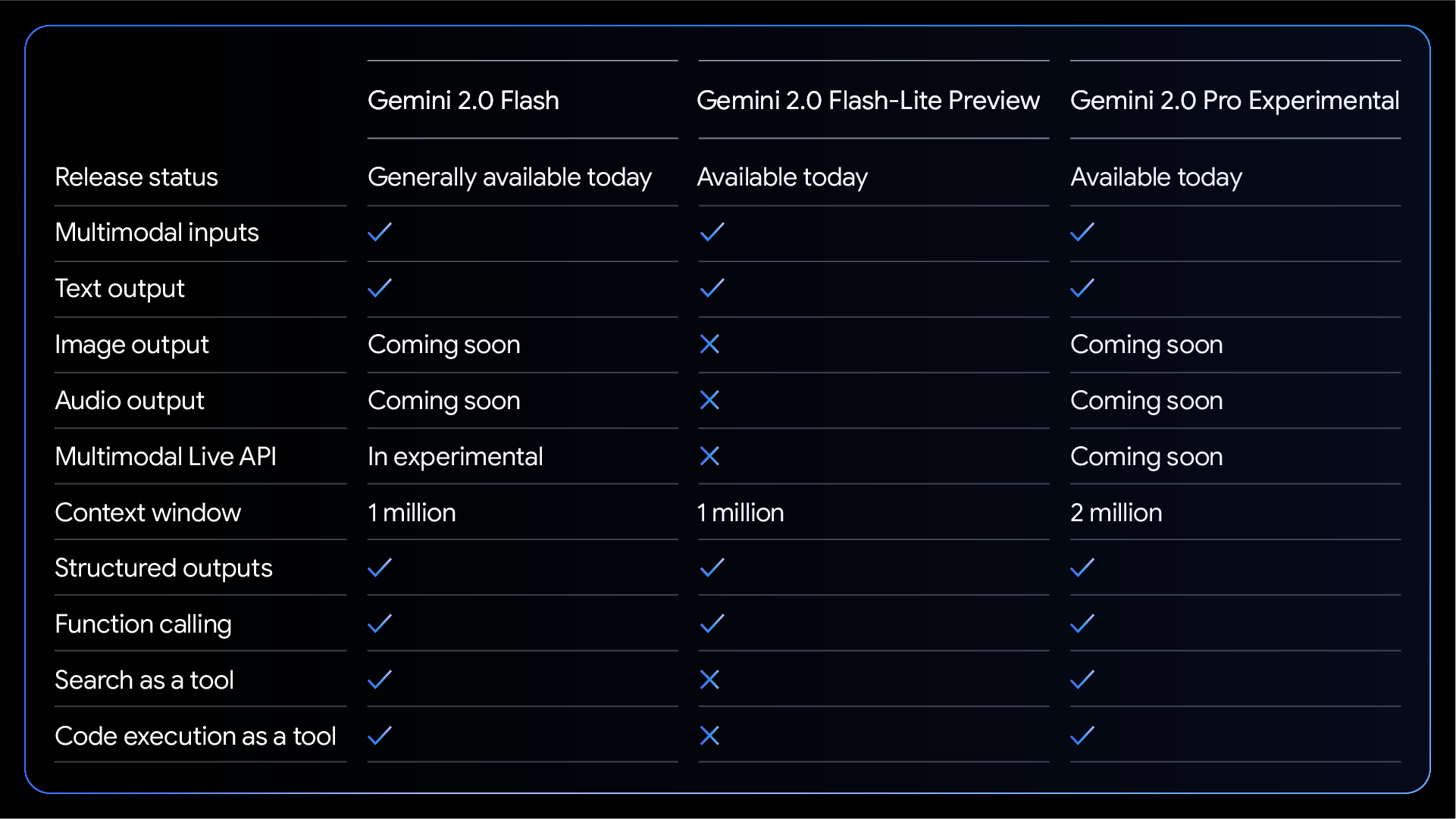

- Gemini 2.0 Flash is now generally available via Google AI Studio and Vertex AI.

- Gemini 2.0 Pro Experimental, Google’s most capable model yet, is now accessible to developers and Gemini Advanced users.

- Flash-Lite, a new low-cost model, offers improved performance over Gemini 1.5 Flash at the same price.

- These releases are part of Google’s broader push to advance AI agent capabilities.

Gemini 2.0 Pro, the flagship model in this release, comes with a 2-million token context window – enough capacity to process approximately 1.5 million words at once. This extensive context window allows the model to handle significantly larger amounts of information than its predecessors.

According to Logan Kilpatrick, Senior Product Manager for the Gemini API and Google AI Studio, the Pro variant particularly excels at coding tasks and managing complex prompts. It also integrates with Google Search and supports external tool use, reinforcing Google’s focus on AI-driven agents that automate multi-step tasks.

The company is also making Gemini 2.0 Flash widely available after initially launching it for Gemini app users last week. This model is optimized for high-volume, high-frequency tasks, making it an ideal workhorse for businesses integrating AI at scale.

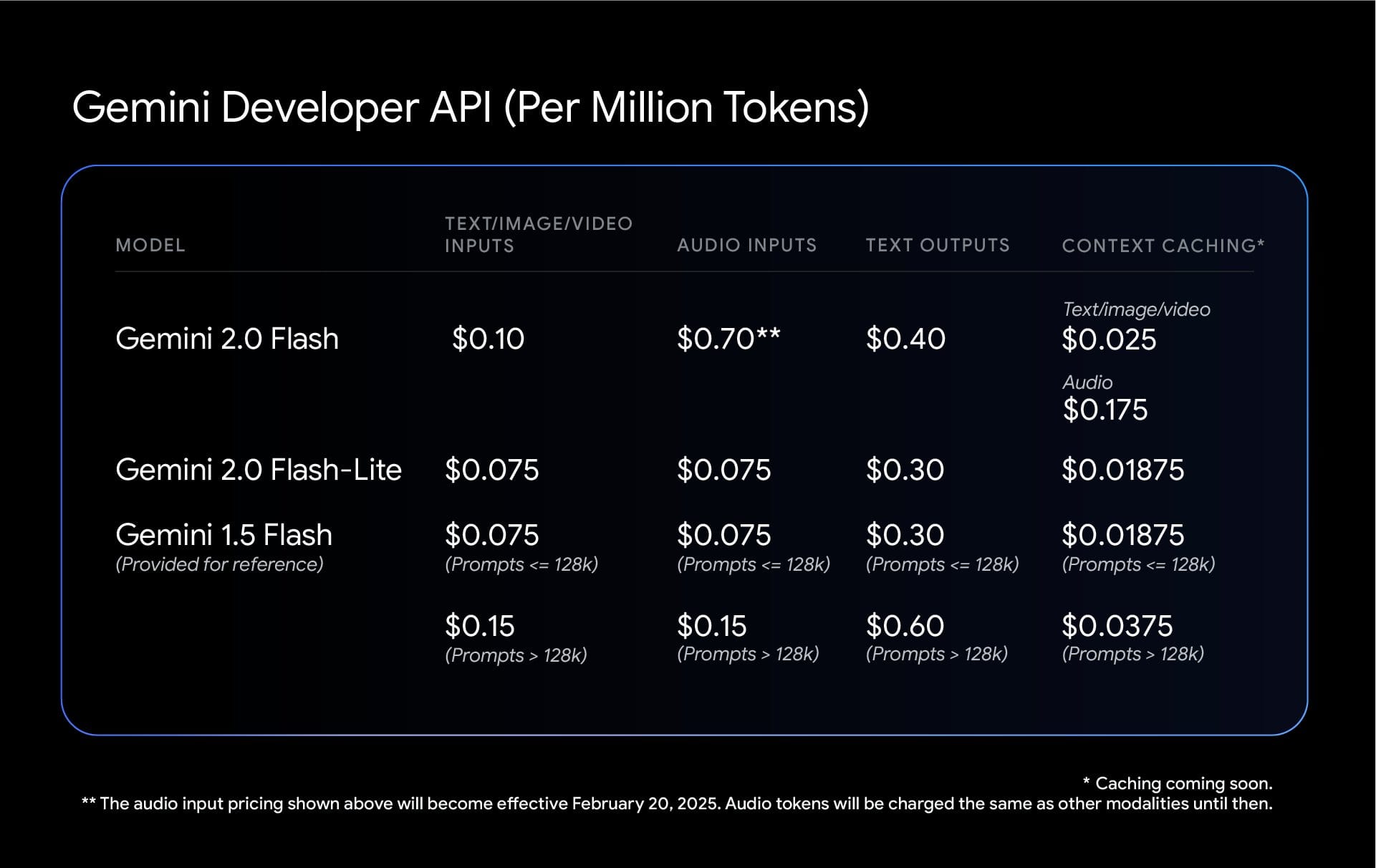

Meanwhile, Gemini 2.0 Flash-Lite introduces a more affordable AI option without sacrificing performance. It maintains the performance improvements of the 2.0 series while matching the pricing of its 1.5 predecessor. For developers working with high-volume applications, Flash-Lite offers an economical option at 0.75 cents per million tokens for text, image, and video inputs – a significant cost reduction compared to the standard Flash model's 10 cents per million tokens.

To round out today's releases, Google has made the experimental Gemini 2.0 Flash Thinking model available to all Gemini app users. In a series of posts on X, CEO Sundar Pichai highlighted the model's ability to integrate with Google's ecosystem of services, including YouTube, Search, and Maps, enabling more contextual and informed interactions.

3/ One more thing - our latest experimental 2.0 Flash Thinking model with advanced reasoning is available to all @Geminiapp users. Look for it in the drop down, including a version that connects to apps like YouTube, Search + Maps.

— Sundar Pichai (@sundarpichai) February 5, 2025

More details on everything we’re announcing…

The competitive landscape is shifting rapidly. Google’s move follows increasing pressure from rivals such as DeepSeek, whose models have gained traction for their reasoning abilities at lower costs. OpenAI, Microsoft, and Meta are also accelerating their efforts to develop AI agents capable of handling sophisticated user tasks without step-by-step guidance.

With these new releases, Google is positioning Gemini as a core AI platform for developers, emphasizing flexibility, affordability, and cutting-edge performance. With cost-efficient models like Flash-Lite and high-performance options like Pro, Google is refining its AI offerings to meet both consumer and enterprise demands, setting the stage for an even more competitive AI market in 2025.

If you are a business or developer looking to implement these new models, they are available through both Google AI Studio and Vertex AI platforms.