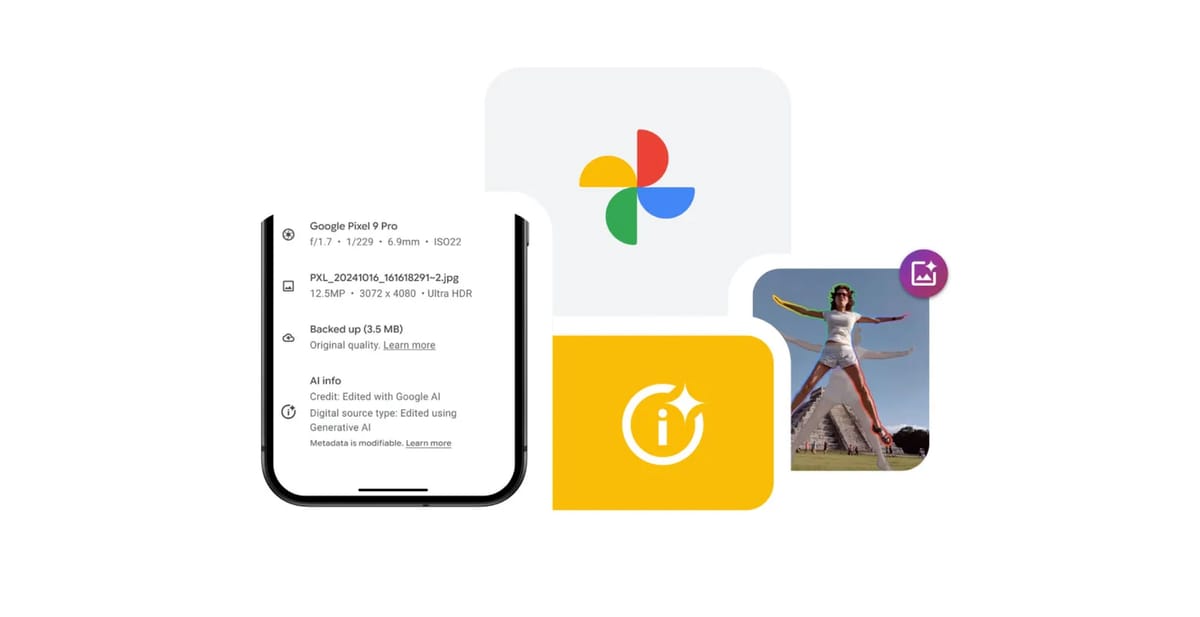

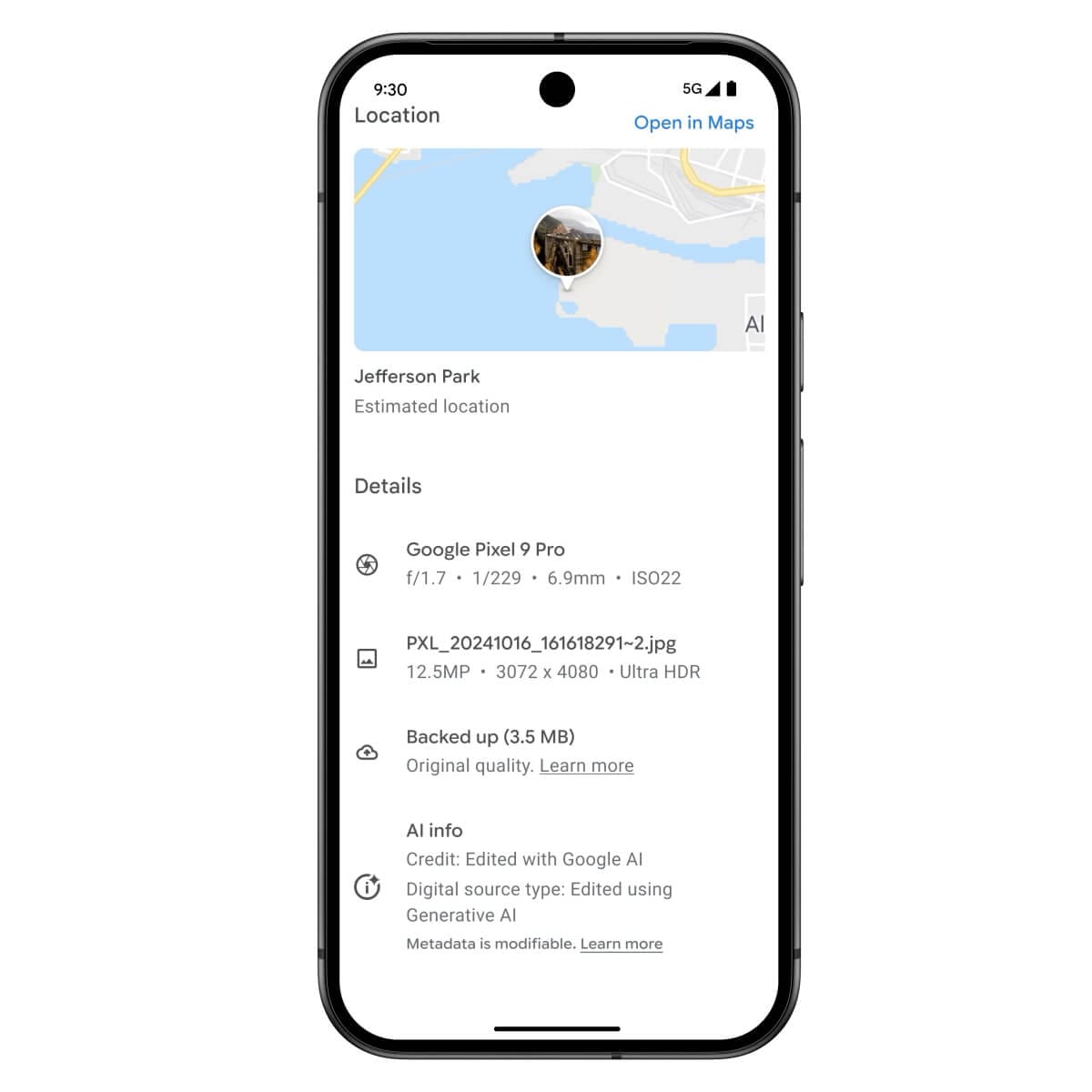

Starting next week, Google Photos will add a new disclosure for images edited with its AI features, like Magic Editor, Magic Eraser, and Zoom Enhance. Users browsing photos in the app will notice a note under the "Details" section at the bottom, indicating if an image has been "Edited with Google AI." Google says this step is intended to "further improve transparency" as more of its AI-powered tools become available.

With AI becoming a larger part of photo editing, it's increasingly challenging for users to discern which moments are real and which are enhanced. However, this new labeling approach may still leave some users wanting.

Unlike a watermark directly on the image, or the Content Credentials Pin, the AI disclosure is only visible in the details view. This means if you're seeing the photo on social media, in a message, or even in your own library, you might have no immediate clue that the image was edited by AI. The information is tucked away, and unless viewers make a deliberate effort to dig into the metadata, the AI edits remain largely invisible.

Google's announcement acknowledges that transparency is a work in progress, noting that this new label builds on existing metadata standards set by the International Press Telecommunications Council (IPTC), which already tag AI-edited content. Google is expanding on this by making the metadata more easily accessible in the app. The change will also apply to non-generative AI features, like "Best Take" and "Add Me," which blend elements from multiple photos for a better group picture.

Yet, critics argue that while these measures are a positive step, they do little to address the broader challenge: without an obvious visual watermark, AI-edited photos may blend seamlessly into everyday online life, blurring the line between real and synthetic content. The lack of clear in-frame labels means people encountering these images may be none the wiser unless they actively investigate—a step most users don't take.

Google appears to be responding to ongoing concerns about the trustworthiness of visual media in the era of generative AI. The company has faced criticism for the potential deception these AI tools could enable—criticism intensified by the fact that many users might not even know such tools are available. By adding this new disclosure in Google Photos, the tech giant is at least attempting to shine a light on AI's behind-the-scenes role in creating what we see.

Google has not ruled out adding visual watermarks to its AI-edited images, a step that some suggest would make AI-generated modifications more immediately obvious. Still, the tech industry is far from a consensus on how best to inform viewers about AI edits. For now, Google's strategy leans on metadata, hoping platforms like social media and messaging apps will pick up the baton and alert users when they're seeing synthetic content.