Researchers at Google AI have introduced a method that allows robots to acquire new skills from natural language instructions. The method bridges the gap between high-level natural language and low-level robotic control by utilizing reward functions as an interpretable intermediate representation.

Large language models (LLMs) like OpenAI's GPT-4 and Google's PaLM have shown the ability to acquire new capabilities through few-shot in-context learning. However, directly applying LLMs to robot control has been challenging. Low-level robotic actions are heavily dependent on hardware specifics and are underrepresented in the training data for general purpose LLMs.

The potential for robots to assist humans in various tasks, such as arranging lunch boxes or executing intricate maneuvers, is considerable. Yet, despite the advancements in LLM technology, existing systems have struggled to move beyond the limitations of pre-programmed behaviors or "primitives." These pre-defined actions, although extensive, restrict the robot's ability to learn new skills, as they require specialized coding expertise to expand or modify.

The researcher team proposes using reward functions as this interface. Reward functions map the robot's state and actions to a scalar value representing the desired objective. They are rich in semantics while remaining interpretable. Most importantly, they can be optimized through reinforcement learning or model predictive control to derive low-level policies.

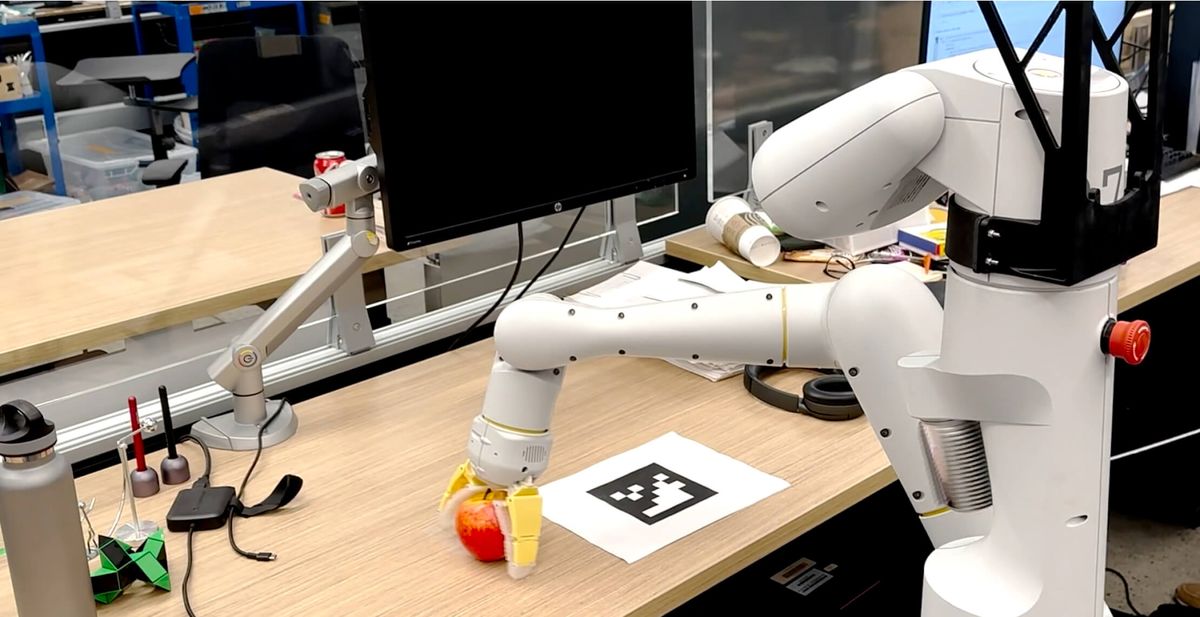

The system consists of two main components - a Reward Translator and a Motion Controller. The Reward Translator utilizes the LLM to convert a natural language instruction into a reward function represented as Python code. The Motion Controller then optimizes this reward function in real-time to determine the optimal torque commands for the robot's motors. This two-step approach allows for a more stable and reliable conversion of user instructions into actions that the robot can understand and execute.

To determine the optimal actions, the Motion Controller uses an open-source implementation based on MuJoCo that has proven effective in dealing with uncertainties and allows real-time re-planning. This enables users to immediately see the results of their instructions and provide feedback, thereby creating a truly interactive behavior creation experience.

The researchers evaluated their approach on a diverse set of 17 tasks using simulated legged and arm robots. It achieved 90% task completion, compared to only 50% using existing Code-as-Policies methods based on action primitives. The team also validated the system on a physical robot manipulator, where it learned complex behaviors like non-prehensile object pushing from scratch.

Examples

The following videos showcase examples of the emergent locomotion and dexterous manipulation skills acquired by the simulated robots through natural language instructions provided to the system.

Robot Dog

Dexterous Manipulator

This study could herald a new era in robotics, enabling anyone to easily teach robots an array of new skills simply by explaining it to them. Additionally, as LLMs continue to advance, intelligent assistants may one day teach robots directly through natural conversation. This could greatly expand the flexibility and ease of use of robotic systems across diverse real-world settings and offer a more seamless human-robot collaboration.