One year after unveiling its Gemini model family, Google has announced Gemini 2.0 Flash—the first release in the Gemini 2.0 lineup. This new model sets new benchmarks with its enhanced performance, multimodal capabilities, and developer-focused features.

Key Points:

- Twice as fast as the previous model and even outperforms Gemini 1.5 Pro

- Supports real-time text, image, and audio outputs

- Multimodal Live API allows developers to build dynamic applications with real-time audio and video streaming

- Built-in tool integration with Google Search and code execution

Google is doubling down on its AI ambitions with the release of Gemini 2.0 Flash, marking a significant upgrade to its foundation model family that's seen explosive growth in developer adoption.

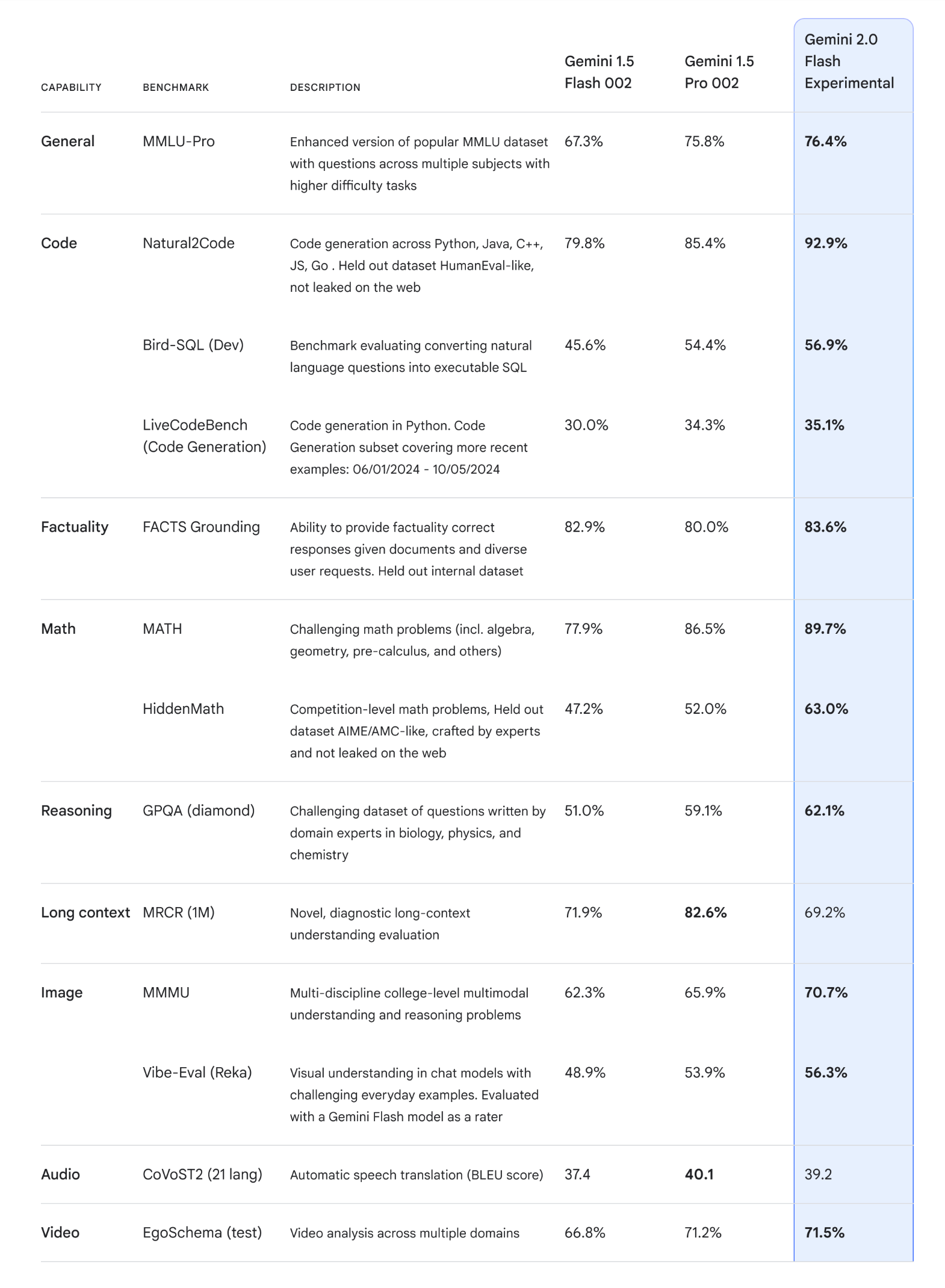

The new model delivers substantial improvements in both speed and capability while maintaining the quick response times that made its predecessor popular among developers. Google shared some benchmarks highlighting the model’s strengths in general knowledge, coding, advanced reasoning, and multimodal applications.

"Gemini 2.0 Flash builds on the success of 1.5 Flash, our most popular model yet for developers, with enhanced performance at similarly fast response times," Hassabis said.

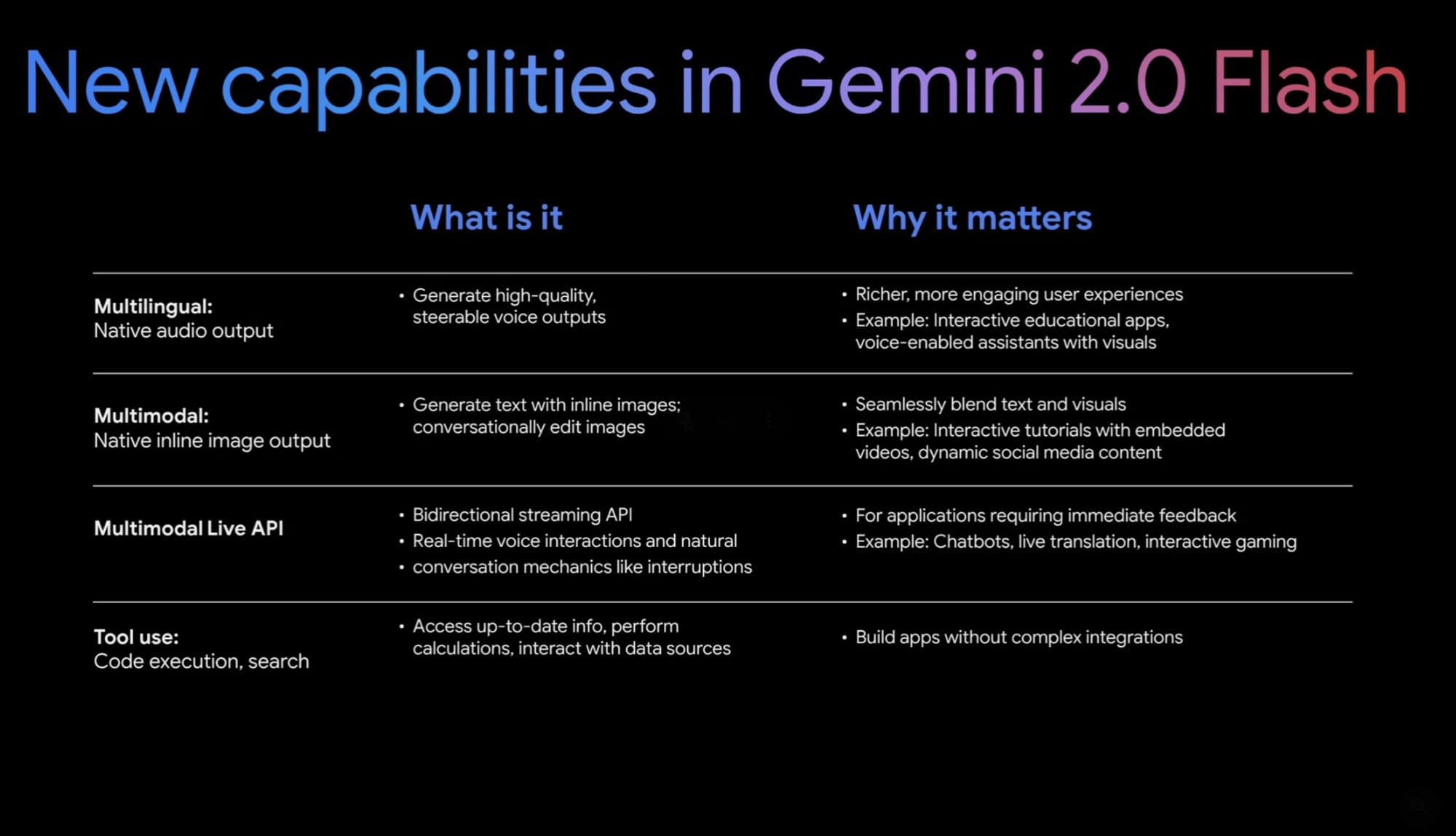

The model introduces several new features that developers building AI-powered applications will love:

- Multilingual native audio output: Generate high-quality, steerable voice outputs in multiple languages, with accents tailored to user preferences.

- Native inline image output: Seamlessly combine text with images for applications like tutorials or social media content.

- Multimodal Live API: A bidirectional streaming API that supports real-time conversations with natural interaction patterns. This is similar to what you will find in Google's Project Astra and ChatGPT Advanced Voice Mode.

- Tool integrations: Natively perform tasks like code execution, Google Search queries, and custom user-defined functions.

Gemini 2.0 Flash is currently available as an experimental model through the Gemini API in Google AI Studio and Vertex AI. Developers can access multimodal input and text output immediately, with text-to-speech and native image generation available to early-access partners. General availability is expected in January, alongside additional model sizes.

The launch comes as Google reports significant traction in its AI developer ecosystem, with millions of developers now building applications across 109 languages using its AI tools. This growth underscores the increasing demand for powerful, efficient AI models that can be readily integrated into real-world applications.

Early access partners are already putting the new capabilities to use. Companies like tldraw, Viggle, and Toonsutra are developing applications ranging from visual playgrounds to multilingual translation services, showcasing the model's versatility.

Google's emphasis on developer feedback and iterative improvement appears to be paying off, with usage of its Flash model growing by over 900% since August. This rapid adoption suggests strong market validation for Google's models and their approach to AI development and deployment.