Google today announced the launch of Gemma, a new family of lightweight open-weight language models. Available in two sizes - Gemma 2B and Gemma 7B - the new models demonstrate "best-in-class performance" compared to other open models according to Google. Instruction-tuned variants of both models are also being released to better support safe and helpful conversational AI applications.

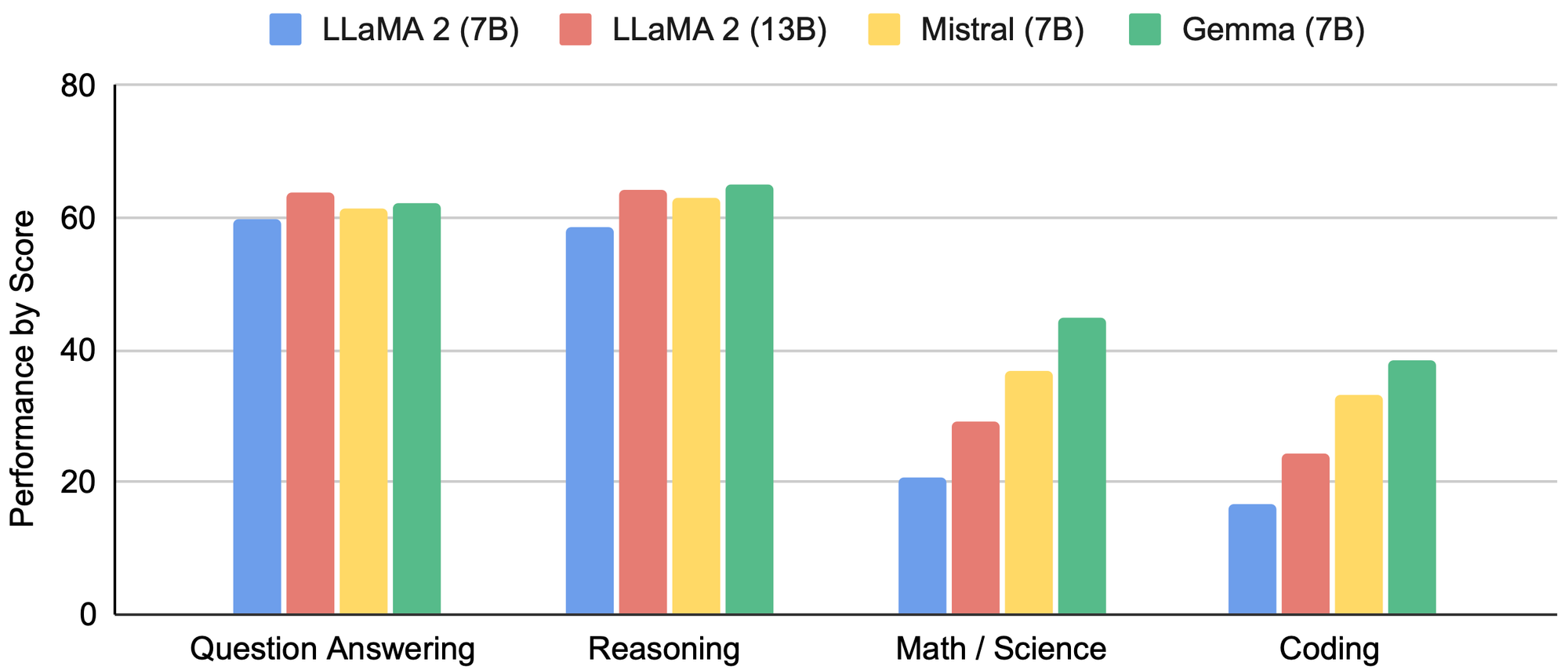

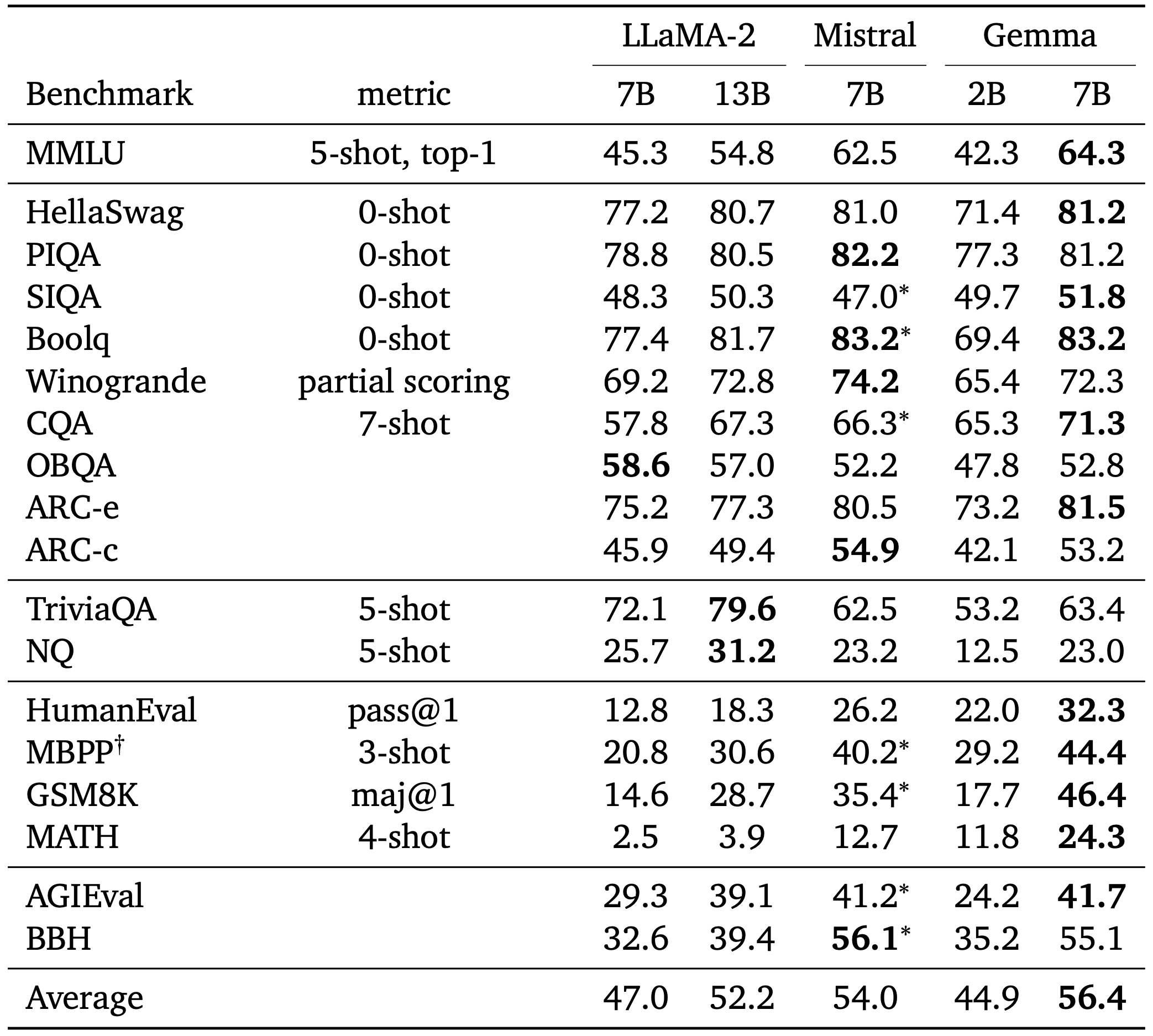

Gemma achieved state-of-the-art results across several key benchmarks, outscoring popular open models like Llama-2 and Mistral.

"Gemma 7B outperforms all open source alternatives on 11 out of 18 language tasks,” said Jeff Dean, Google’s Chief AI Scientist, in a blog post. “It shows particular strength on mathematical reasoning and coding capabilities."

For example, on the challenging Mathematics Dataset for Language Understanding (MMLU) benchmark, Gemma 7B scored 64.3% - exceeding the 60.8% scored by Llama-2 13B, a much larger model. Gemma 2B also bested Llama 2-7B on 8 out of 8 tests of general language mastery and reasoning ability.

Google researchers noted Gemma still trails their own gigantic Gemini model in areas like mathematical reasoning. But its strong overall performance combined with open availability makes it a compelling new option for developers.

Google also emphasized Gemma’s focus on responsible AI development. The company has released a Responsible Generative AI Toolkit alongside Gemma covering areas like safety classification, debugging tools, and best practices guidance.

“Gemma is designed with our AI Principles at the forefront,” Google stated. “As part of making Gemma pre-trained models safe and reliable, we used automated techniques to filter out certain personal information and other sensitive data from training sets.”

Extensive testing was done to align the instruction-tuned Gemma models with helpfulness and safety standards. Google researchers acknowledged risks remain in open-sourcing language models but believe Gemma will provide overall benefit to AI innovation.

Google is making it super easy to access Gemma and is providing free ready-to-use Colab and Kaggle notebooks, alongside integration with popular tools such as Hugging Face, MaxText, NVIDIA NeMo and TensorRT-LLM. Pre-trained and instruction-tuned Gemma models can run on your laptop, workstation, or Google Cloud with easy deployment on Vertex AI and Google Kubernetes Engine (GKE)