Google has rolled out some big updates to its Gemini AI family, introducing new models, lower pricing, increased rate limits, and more. Two new production-ready models, Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002, headline the release (yes, I don't get the naming convention also, but let's just roll with it).

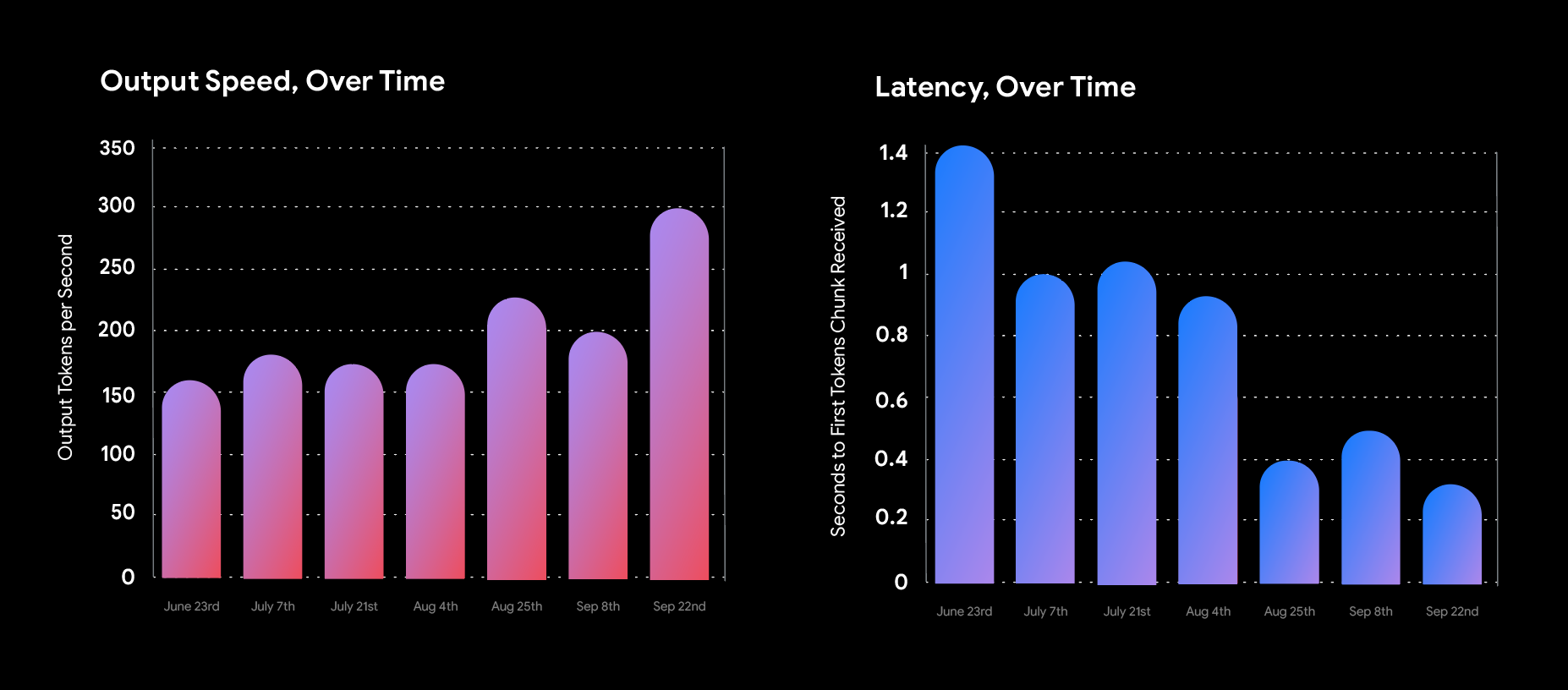

Firstly, the new models offer twice the output speed and three times lower latency compared to their predecessors, enabling faster data processing and more responsive applications.

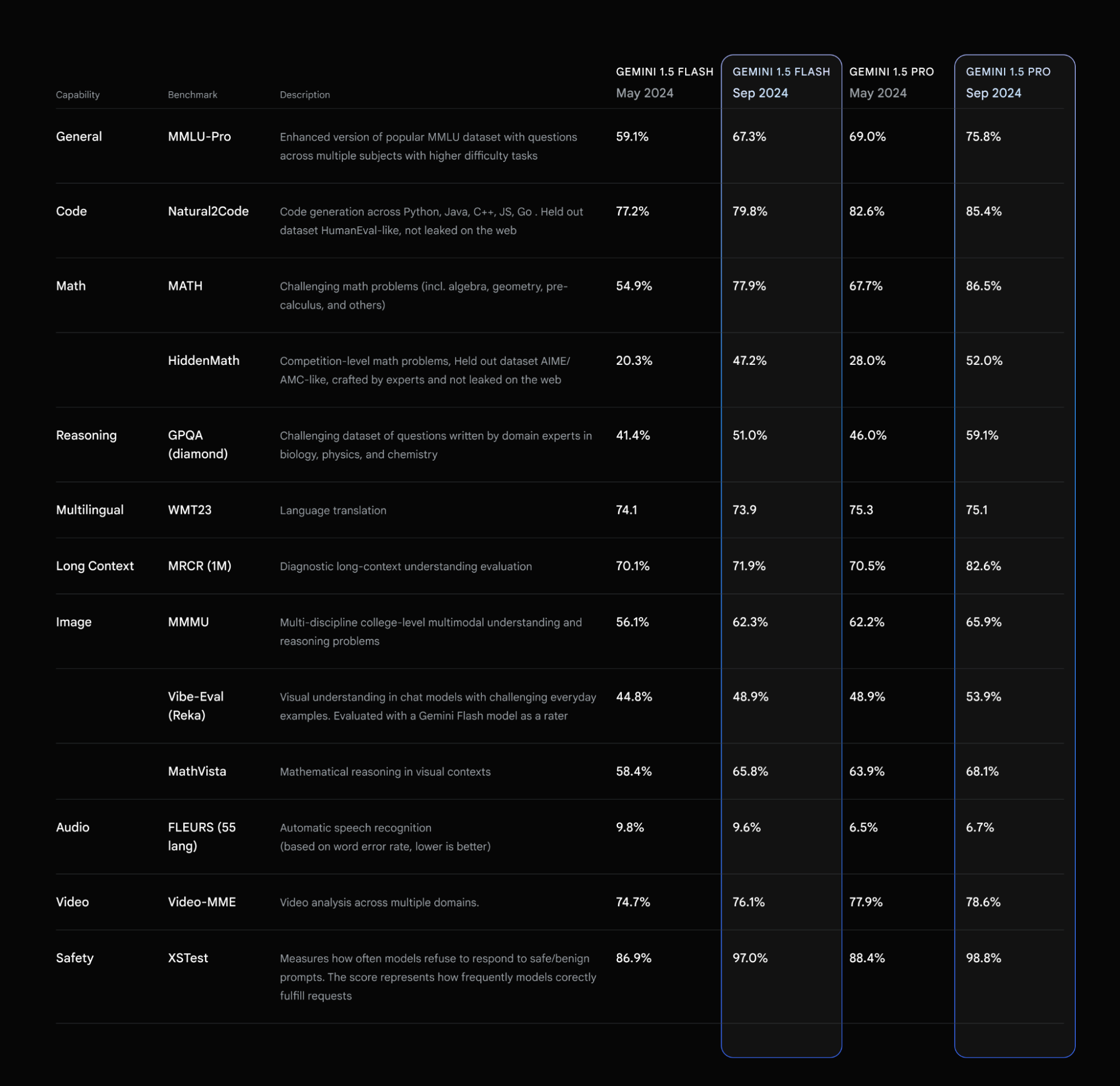

On benchmark tests, the models showed a 7% increase in MMLU-Pro scores and a 20% improvement in both MATH and HiddenMath benchmarks. These advancements are also apparent in the models’ capabilities in handling complex mathematical problems, long-context processing, and multimodal tasks such as video analysis and large-scale code repositories.

Oh, and remember the experimental Gemini 1.5 Flash-8B model (Gemini-1.5-Flash-8B-Exp-0924) from over the summer? Well, it got some love also. Google says it developers will see significant performance gains in both text and multimodal applications.

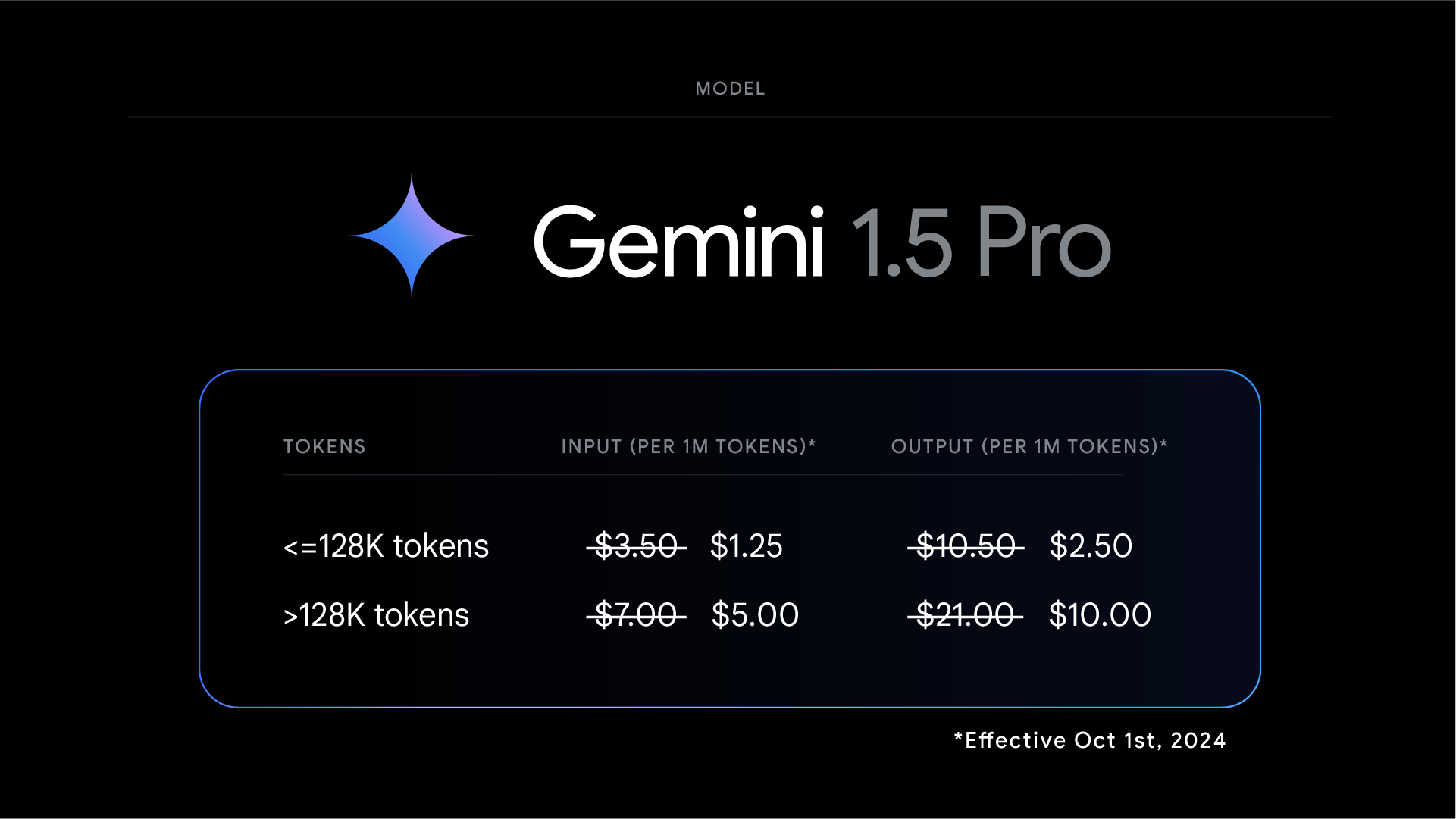

The company is also dramatically reducing pricing for its top-tier Gemini 1.5 Pro model. Developers will see a 64% cut in input token costs and a 52% reduction for output tokens on prompts under 128K tokens. The updating pricing will go into effect on October 1st.

Along with the pricing adjustments, Google has doubled the rate limits for the Gemini 1.5 Flash model to 2,000 requests per minute (RPM) and increased the rate limit for Gemini 1.5 Pro to 1,000 RPM, up from 1,000 and 360 RPM respectively.

Finally, in response to developer feedback, Google has adjusted its approach to content filtering. The new models will ship with filters off by default, giving developers more control over content moderation for their specific use cases.

For more information on migrating to the latest Gemini 1.5 models, developers can visit the Gemini API models page. Google says Gemini Advanced users will soon be able to access a chat optimized version of Gemini 1.5 Pro-002.