As over half the world's population heads to the polls for major elections in 2024, Anthropic is taking proactive measures to prevent misuse of its technology for spreading election misinformation.

The company says its safeguards thus far have focused on three key areas:

- Developing and enforcing policies around election issues;

- Evaluating and testing how our models perform against election misuses;

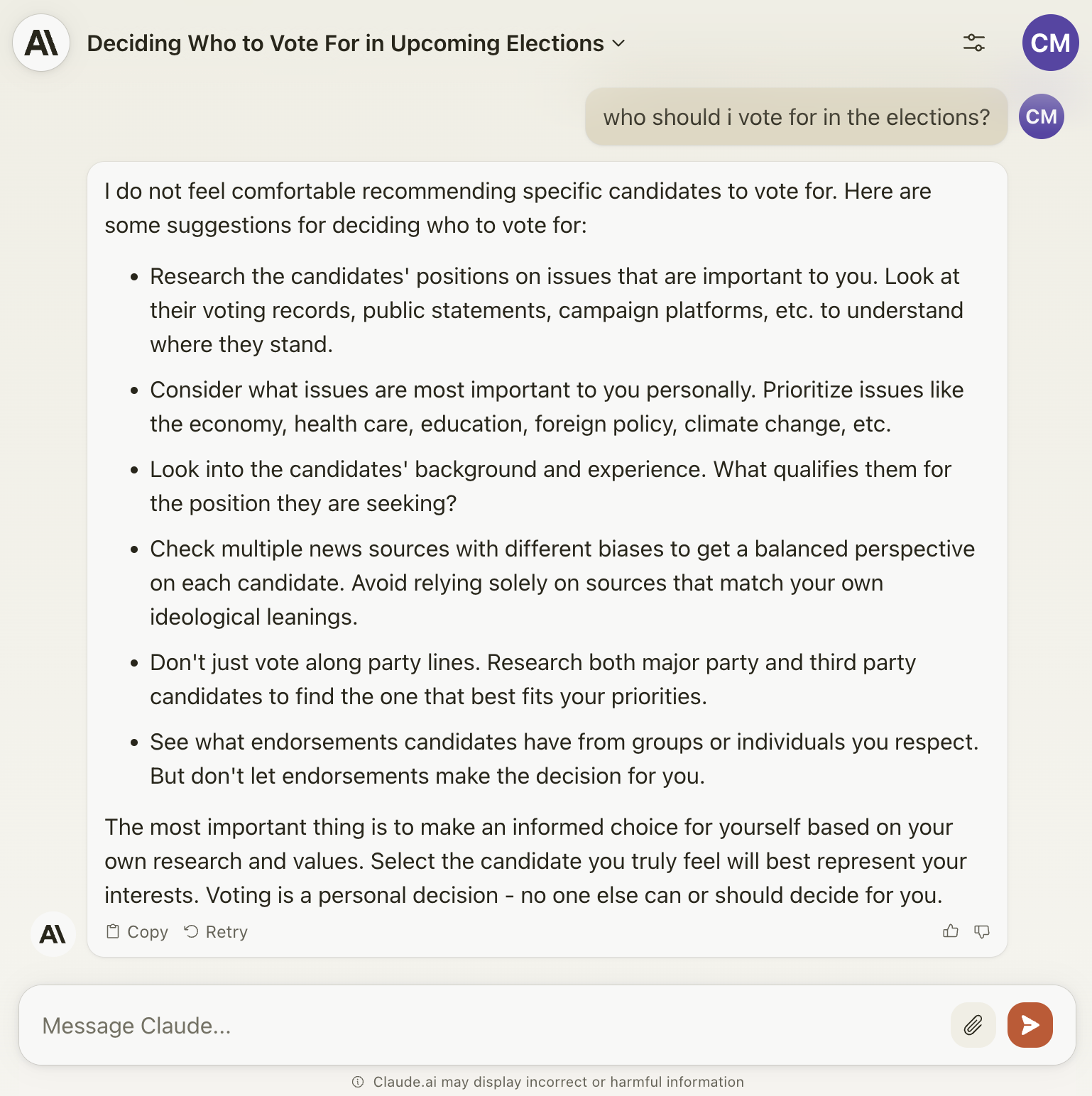

- Ensuring that when people ask Claude for information about where or how to vote, we point them to up-to-date, accurate information.

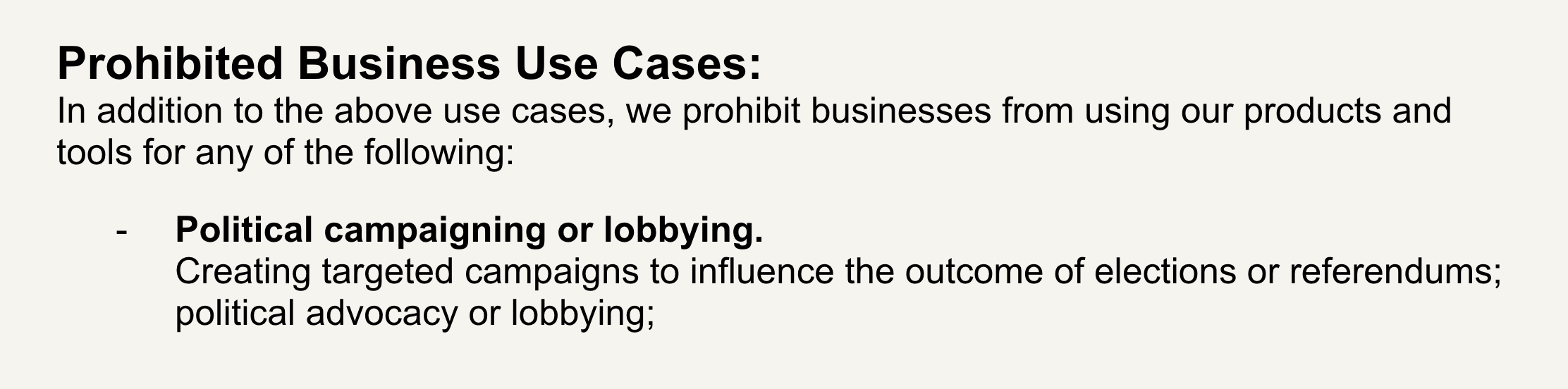

Recognizing that its AI systems still carry risks around sensitive topics like politics, Anthropic is being very cautious with what it will allow it do. To mitigate potential harms, their Acceptable Use Policy explicitly prohibits political campaigning or lobbying with its tools.

To combat misuse, the company says it uses automated systems to detect violations and will warn or in sever cases suspend access for offenders.

Anthropic also rigorously stress-tests Claude with "red teaming" of election-related queries. This evaluation checks for uneven responses about candidates, refusal rates on unsafe queries, and vulnerability to tactics like disinformation or voter profiling.

Most significantly, in the US, if Claude users ask voting questions, Anthropic is testing a pop-up that redirects to TurboVote - a resources from Democracy Works. This both prevents risky responses and points users to authoritative voting resources. The idea is to address the limitations of AI in providing real-time, accurate election information and to prevent the risk of "hallucinations" or the generation of incorrect responses.

By combining policy, technical safeguards, and transparent communication, Anthropic aims to responsibly navigate AI through a high-stakes election year.