Meta today open sourced Code Llama 70B, the largest version of its popular coding model. In this article, we'll cover how you can easily get up and running with the new codellama-70b.

Like its smaller siblings, there are three variations of the codellama-70b model:

instruct- This is fine-tuned to generate helpful and safe answers in natural languagepython- A specialized variation of Code Llama further fine-tuned on 100B tokens of Python codecode- The base model for code completion

Download from Meta

If you are an experienced researcher/developer, you can submit a request to download the models directly from Meta. N.B. The 70B model is 131GB and requires a very powerful computer 😅. also, you can find sample code to load Code Llama models and run inference on GitHub.

Download from Hugging Face

The models are also available in the Hugging Face Transformers format.

Run Locally with Ollama

If you are on Mac or Linux, download and install Ollama and then simply run the appropriate command for the model you want:

- Intruct Model -

ollama run codellama:70b - Python Model -

ollama run codellama:70b-python - Code/Base Model -

ollama run codellama:70b-code

Check their docs for more info and example prompts.

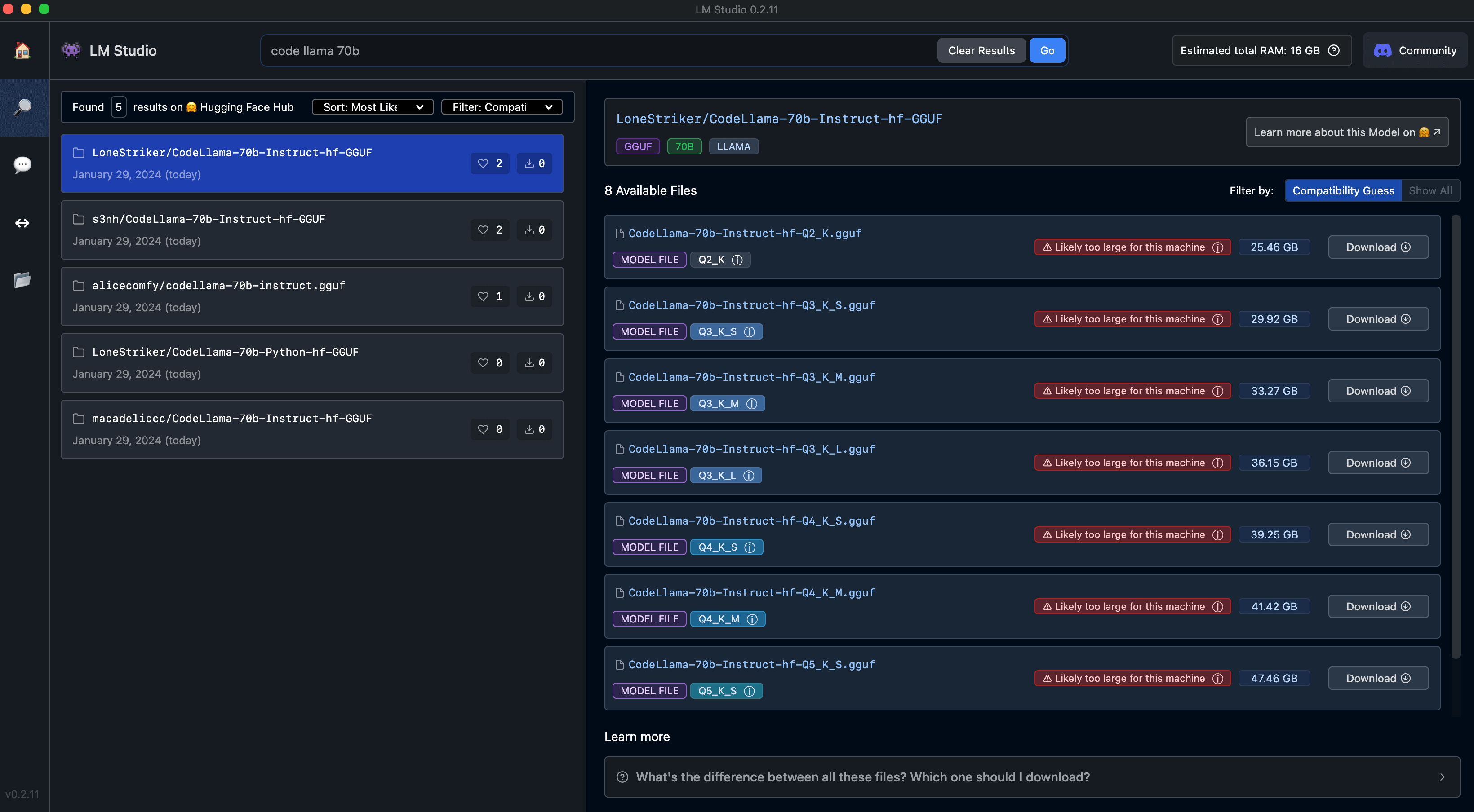

Run Locally with LM Studio

Alternatively, you can use LM Studio which is available for Mac, Windows or Linux. Just do a quick search for "Code Llama 70B" and you will be presented with the available download options.

Use Perplexity

This is one of the easiest ways to try codellama-70B. Simply navigate the Perplexity Labs Playground and select the model from the provided options - labs.pplx.ai . Check out the video below—and no, it wasn't sped up 🤩

Directly in Your IDE with Continue

Continue is an open-source autopilot for VS Code and JetBrains. It supports CodeLlama via Ollama or LM Studio as we covered above. Alternatively, you can access codellama-70b via API using TogetherAI.

We'll continue to update this article as more resources become available.