A new paper from Berkeley researchers demonstrates a new state of the art approach for reconstructing detailed 3D models of humans and tracking them over time in video. The research was enabled by Stability AI, which provided critical compute resources to develop and train the complex AI models.

The paper details an approach for taking any monocular video and jointly reconstructing the humans in 3D, while also tracking their identities over time. The approach also allows the system to reliably track multiple people even through challenges like occlusions and unusual poses.

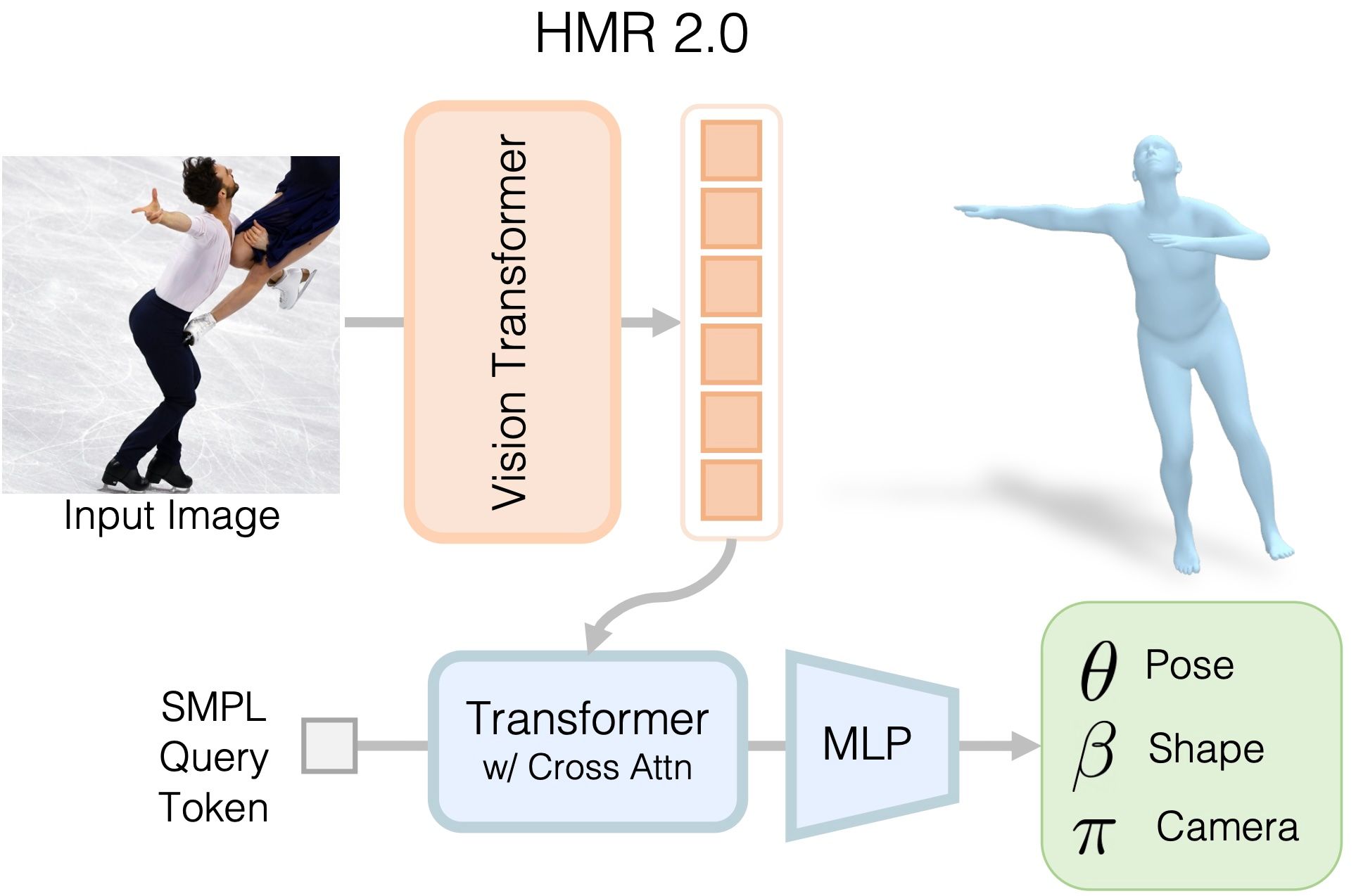

At the core of the system is Human Mesh Recovery (HMR) 2.0, a fully "transformerized" neural network architecture for predicting 3D human meshes from single images. It leverages the powerful self-attention mechanisms of transformers to understand spatial context and estimate detailed body shape, pose, and clothing. The researchers show HMR 2.0 provides state-of-the-art results for 3D pose estimation, significantly improving on previous convolutional architectures like the original HMR model.

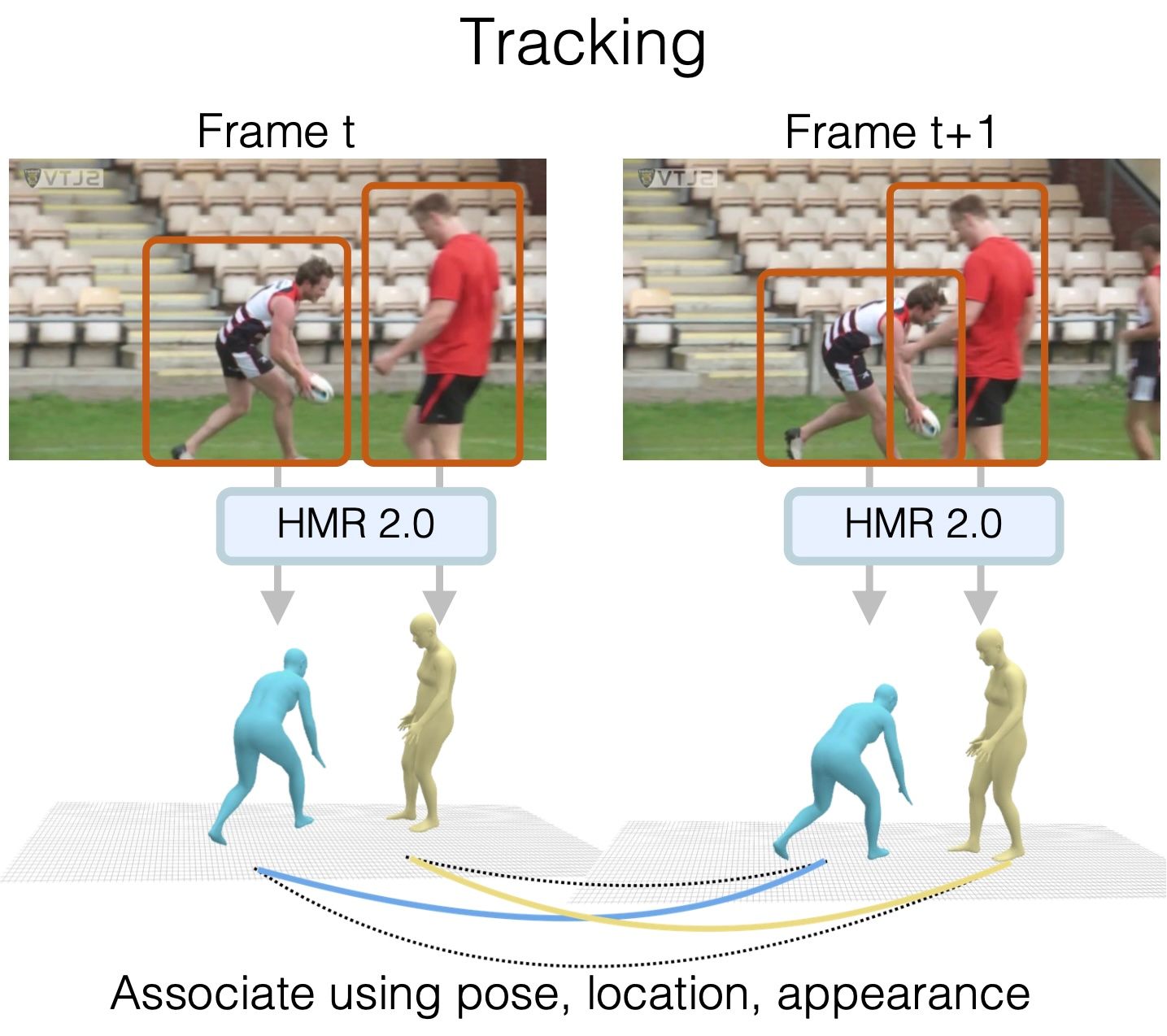

By integrating HMR 2.0 pose predictions into a 3D tracking framework, the researchers built 4DHumans, which jointly reconstructs and tracks people over time. It establishes correspondences between predicted 3D poses to link identities across frames. 4DHumans achieves top performance on the task of multi-person 3D tracking.

Beyond tracking, the team shows HMR 2.0's high-fidelity poses also provide benefits for action recognition. By using pose features from HMR 2.0, they attain state-of-the-art results on a challenging benchmark for detecting human actions in video.

The work was enabled by Stability AI's generous compute grant, which provided access to high-performance cloud infrastructure. With continued advancements in transformer architectures and increases in compute, techniques like 4DHumans could unlock new applications in robotics, graphics, biomechanics, and other fields that require fine-grained 4D understanding of humans in motion.

The team has shared the code for 4DHumans, as well as demos on Hugging Face and Google Colab.