IBM, NASA, the European Space Agency (ESA) and allied institutions have open-sourced dramatically downsized versions of their leading Earth-observation models. These “tiny” and “small” variants of TerraMind and Prithvi retain much of their original power yet are light enough to run on devices from smartphones to orbiting satellites.

Key Points

- The new models drop hardware barriers, enabling on-device inference even in remote or disconnected environments.

- Performance loss is under 10% relative to full models, while model size shrinks by orders of magnitude.

- Satellites could upload tiny decoder heads mid-orbit, transforming them into software-defined sensing platforms.

When IBM and its partners first launched TerraMind and Prithvi, their strength was undeniable — but so were their computational demands. Running inference required powerful hardware, effectively limiting use cases to labs, cloud clusters, or data centers.

That constraint often forced field users — conservationists, local governments, disaster responders — to offload raw data to centralized servers for inference. Latency, connectivity, and cost all became gating factors.

The newly released tiny and small versions change this calculus. IBM says Prithvi.tiny is 120× smaller than a 600M-parameter version, yet delivers a performance drop of less than 10%. In tests on Unibap’s iX10 satellite computing platform, TerraMind.tiny processed 224×224 images at 325 fps (12 spectral bands, batch size 32), while Prithvi.tiny hit 329 fps on six bands — roughly 2 Gbit/s of inferred data throughput, exceeding Sentinel-2’s raw data rate.

Crucially, the “frozen encoder” architecture means that the heavy upstream model (encoder) is fixed, while small task-specific decoder heads (just ~1–2 MB) can be uploaded later — even when a satellite is already in orbit.

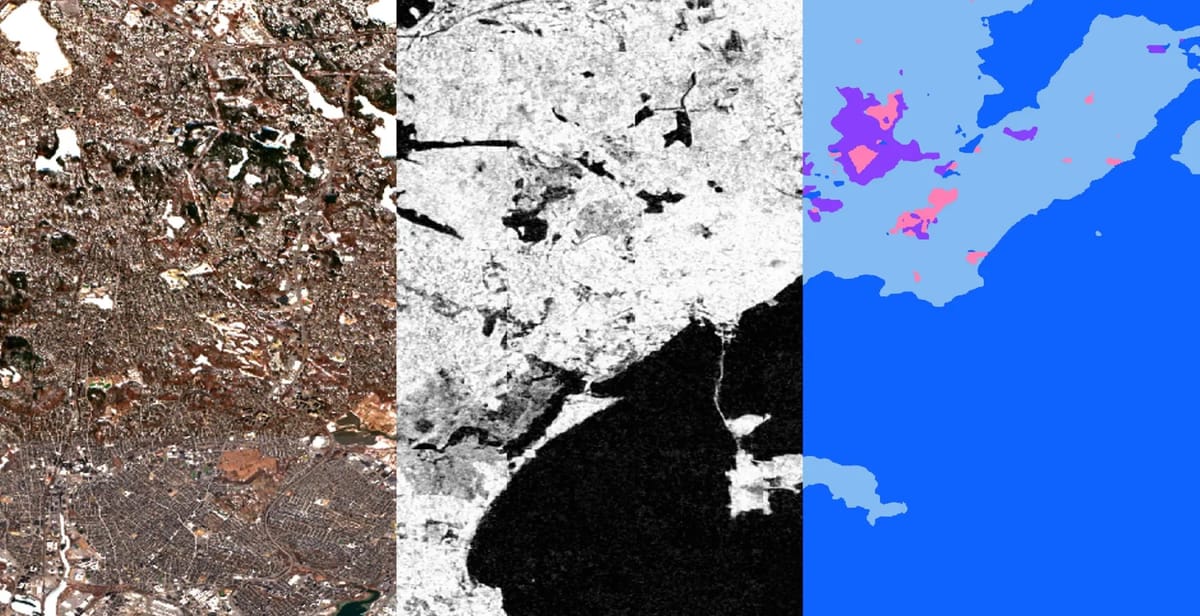

TerraMind is built to handle multiple modalities — radar, optical, topography, elevation, vegetation indices, metadata, and more — and can even generate missing modalities from available ones via a technique the authors call Thinking-in-Modalities (TiM). Its architecture uses a dual-scale transformer (pixel + token) approach, trained on the TerraMesh dataset of ~9 million globally aligned multimodal samples. On the PANGAEA benchmark, it beats many existing EO models.

The Prithvi line already delivered strong performance. With version 2.0, IBM and NASA scaled the model to 300M and 600M parameters and introduced temporal and locational embeddings, a masked autoencoder (MAE) pretraining strategy, and 3D patch embeddings to capture space–time relationships. In benchmarks (GEO-bench), Prithvi-EO-2.0 outperforms its predecessor by ~8 % and surpasses six peer geospatial foundation models.

The open release includes various scales, including a “tiny” variant. The Hugging Face space lists versions such as Prithvi-EO-2.0-tiny, 100M, 300M, and 600M.

Because the models can run on consumer hardware, even a fundraising nonprofit or regional agency with limited infrastructure can fine-tune or deploy these tools. Imagine a ranger station analyzing deforestation patches, or a drone detecting elephants, all offline. The IBM team already showed TerraMind.tiny running on an iPhone 16 Pro from live drone video.

The decoder-upload model means satellites need not carry full task-specific AI onboard. One can upload decoder models later over limited bandwidth, converting even legacy satellites into dynamic sensing platforms. This unlocks new mission flexibility, reduces risk, and accelerates iteration in space.

Satellites generate vast volumes of imagery. With onboard inference, they can pre-filter and send only interesting segments (e.g. disaster zones, deforestation hotspots). That saves latency, downlink cost, and ensures faster response in emergencies.

These models are released under Apache 2.0 on Hugging Face, with code and tooling via IBM’s TerraTorch framework. That means any researcher, startup, or NGO can build EO capabilities without buying tens of thousands in compute upfront.