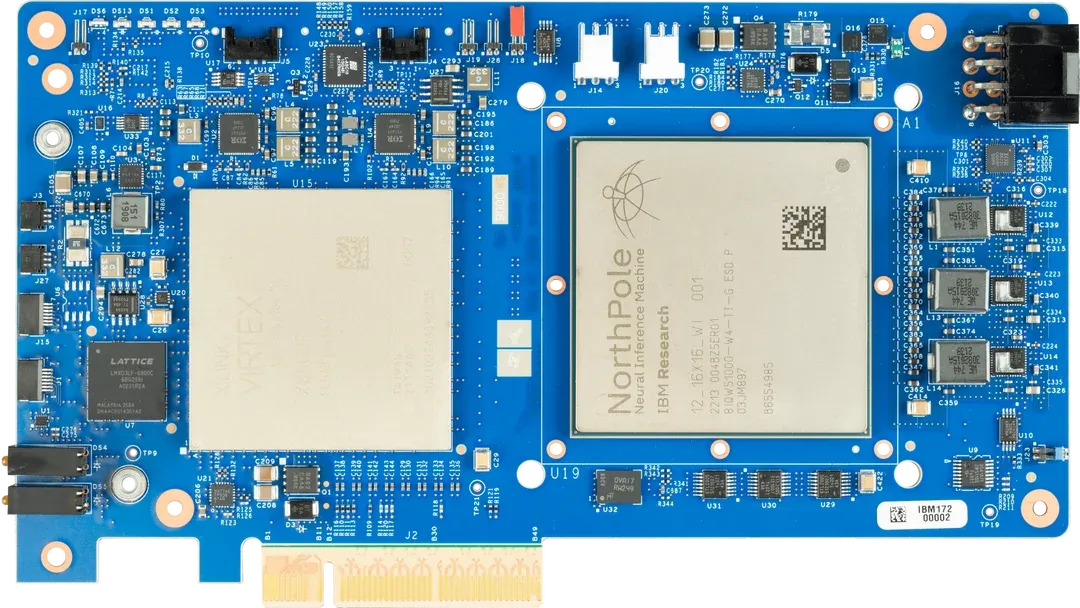

IBM has revealed a new AI chip architecture called NorthPole that could enable significant leaps forward in energy efficiency, speed, and scalability for AI systems. Developed over the past eight years by IBM Research scientist Dharmendra Modha and colleagues in Almaden, California, NorthPole represents a radical rethinking of conventional computer chip design.

Traditionally, computer chips have been designed such that the processing units and memory are stored separately, leading to the "von Neumann bottleneck". This architecture causes delays as data is shuttled between memory and processing units.

To navigate this challenge, IBM's Dharmendra Modha and his team have taken a page from neuroscience. Their creation, the NorthPole chip, tightly integrates processing and memory, and thus allows data to move far more efficiently. Consequently, AI inferencing on NorthPole is considerably swifter than other chips available today.

IBM says NorthPole is more energy-efficient, space-efficient, and has lower latency than any other chip on the market—boasting speeds approximately 4,000 times faster than its predecessor, TrueNorth. Using the ResNet-50 model as a benchmark, NorthPole achieved up to 25x better energy efficiency and far lower latency than leading 12-nm GPUs and 14-nm CPUs.

Modha suggests NorthPole's efficiency stems from its brain-inspired architecture. The chip contains 22 billion transistors packed into a space the size of a postage stamp. This enables an extremely dense network of 256 cores that can perform over 2,048 operations per core per cycle at 8-bit precision. With 4-bit and 2-bit precision, this figure doubles and quadruples respectively. Simply put, as Modha mentions, it is an "entire network on a chip".

However, NorthPole's strength, its onboard memory, is also its limitation. The chip's efficiency would be compromised if it had to fetch data from elsewhere. To counteract this, NorthPole employs a "scale-out" approach, enabling it to manage larger neural networks by segmenting them into smaller, manageable sub-networks.

“We can’t run GPT-4 on this, but we could serve many of the models enterprises need,” Modha said. “And, of course, NorthPole is only for inferencing.”

Possible applications for NorthPole center on AI inferencing, especially real-time sensory processing. In its trial phase, the focus was predominantly on computer vision tasks, owing in part to funding from the U.S. Department of Defense. However, its versatility extends to fields like natural language processing and speech recognition. Autonomous systems, robotics, surveillance, and other edge use cases requiring instant analysis of high-bandwidth data are prime candidates. For example, NorthPole could enable self-driving cars to handle complex real-world scenarios.

While still early stage, NorthPole shows the dramatic gains possible from reimagining computer architecture for the AI era. The state of the art for CPUs currently stands at 3 nm, and with IBM delving into research on 2 nm nodes, NorthPole has much more potential for further enhancements.

With Moore's Law slowing, breakthroughs like NorthPole that unlock orders-of-magnitude performance leaps will be instrumental in AI's continued scaling. IBM's research exemplifies how fundamental engineering can overcome constraints and open new frontiers.