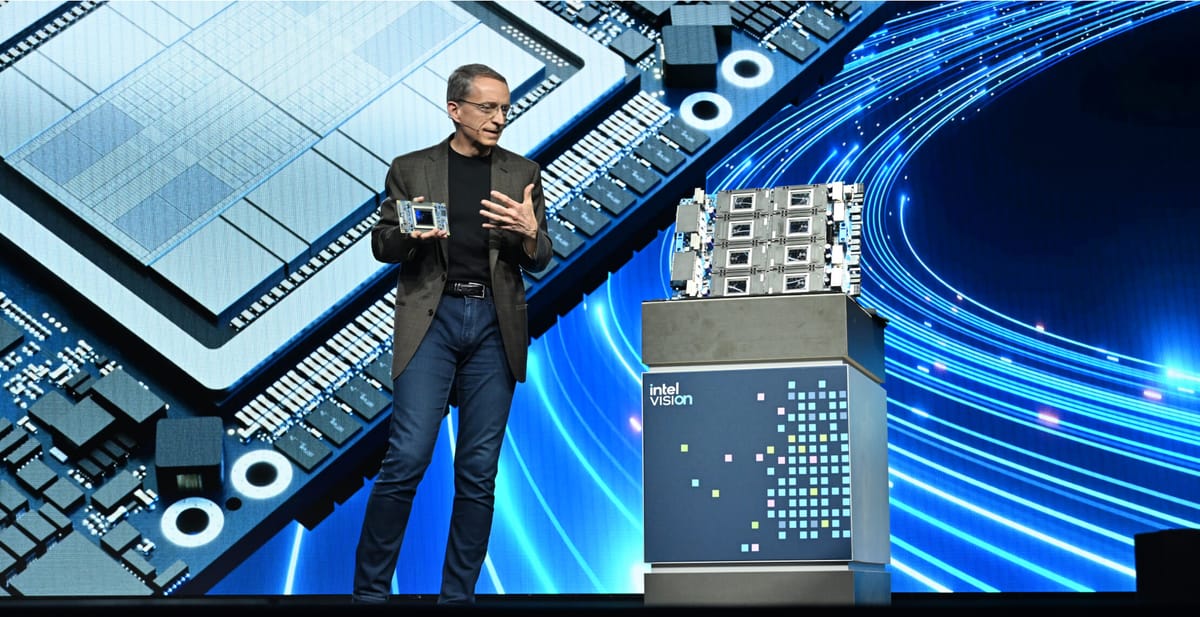

At the Intel Vision 2024 conference in Phoenix, Arizona, Intel unveiled its latest artificial intelligence chip, the Gaudi 3 AI accelerator, taking direct aim at NVIDIA's market-leading position in the AI chip market. The new chip is designed to bring performance, openness, and choice to enterprise generative AI, while addressing the challenges businesses face in scaling AI initiatives.

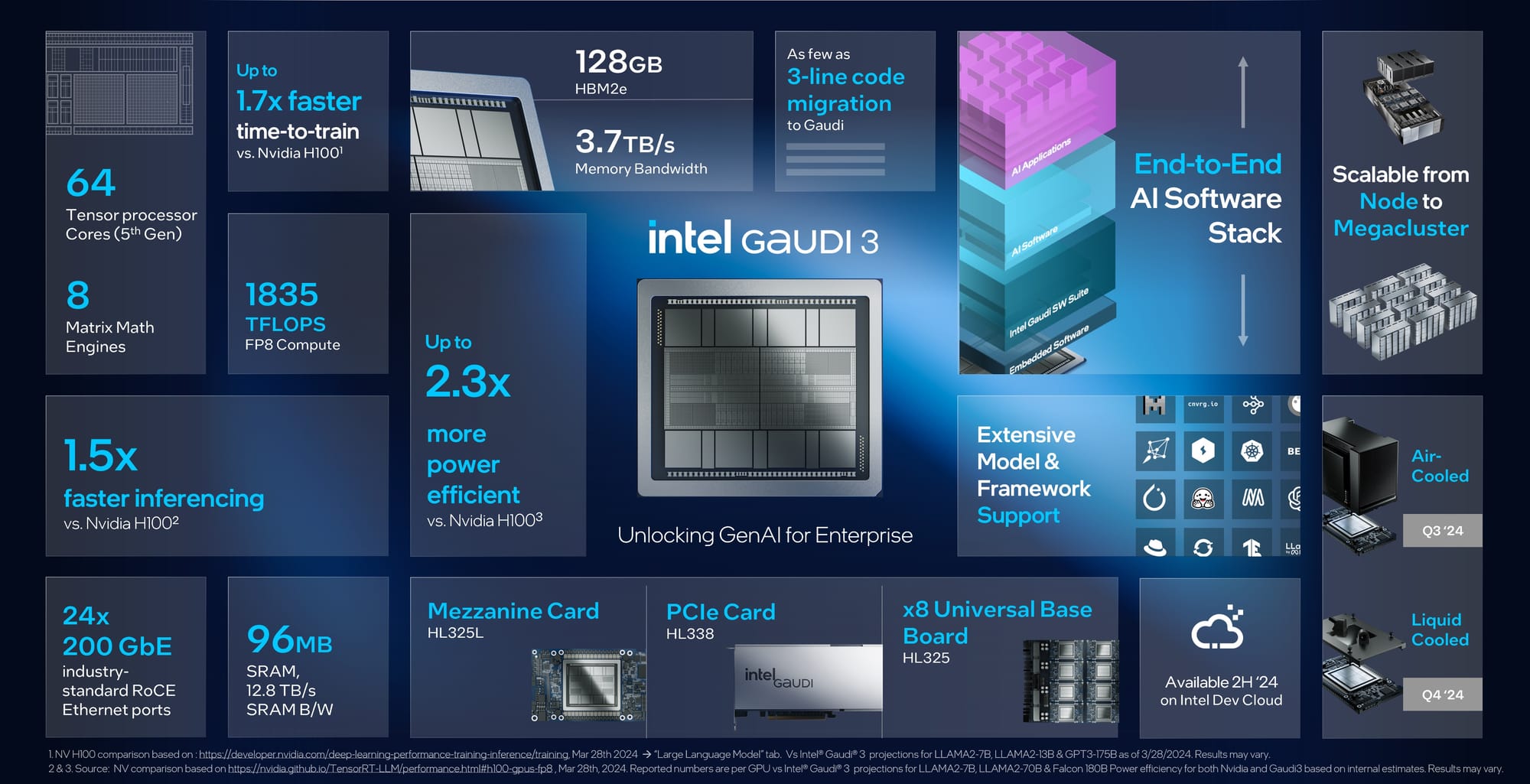

According to Intel, the Gaudi 3 delivers 50% better inference performance on average and 40% better power efficiency compared to NVIDIA's H100 GPU, at a fraction of the cost. In terms of training performance, Intel projects that Gaudi 3 will deliver up to 1.7x faster time-to-train than the H100.

The Gaudi 3 chip is built on a 5nm process, an improvement over the 7nm process used in its predecessor, the Gaudi 2. This allows for more advanced features and transistors, resulting in increased AI computing power, networking bandwidth, and memory bandwidth.

"Innovation is advancing at an unprecedented pace, all enabled by silicon – and every company is quickly becoming an AI company", said Intel CEO Pat Gelsinger. "Intel is bringing AI everywhere across the enterprise, from the PC to the data center to the edge."

The Gaudi 3 will be available to original equipment manufacturers (OEMs), including Dell Technologies, HPE, Lenovo, and Supermicro, broadening the AI data center market offerings for enterprises. Intel also announced new Gaudi accelerator customers and partners, such as Bharti Airtel, Bosch, CtrlS, IBM, IFF, Landing AI, Ola, NAVER, NielsenIQ, Roboflow, and Seekr.

In addition to the Gaudi 3 accelerator, Intel provided updates on its next-generation products and services across all segments of enterprise AI. The company introduced the new brand for its next-generation processors for data centers, cloud, and edge: Intel Xeon 6. These processors are expected to deliver exceptional efficiency and increased AI performance.

Intel's announcement comes as NVIDIA continues to dominate the AI chip market, with an estimated 80% market share. However, Intel believes its open approach and industry-standard Ethernet networking will provide a strong offering for enterprises looking for alternatives to NVIDIA's proprietary solutions.

Intel is also focusing on its software stack, touting its end-to-end support and open-source nature. The company is working with Hugging Face, PyTorch, DeepSpeed, and Mosaic to ease the software porting process and enable faster deployment of Gaudi 3 systems.

"We are working with the software ecosystem to build open reference software, as well as building blocks that allow you to stitch together a solution that you need, rather than be forced into buying a solution," said Sachin Katti, senior vice president of Intel's networking group.

The data center AI market is expected to grow as cloud providers and businesses build infrastructure to deploy AI software, suggesting there is room for competitors even if NVIDIA continues to make the vast majority of AI chips. Running generative AI and buying Nvidia GPUs can be expensive, and companies are looking for additional suppliers to help bring costs down.

Intel's push into the AI chip market comes at a critical time for the company as it works to reinvent itself as a leader in sophisticated chip technology while building out its chip manufacturing capabilities in the US and abroad. The company's stock has been underperforming compared to rivals NVIDIA and AMD, but Intel is betting that its open approach and competitive pricing will appeal to enterprises wary of sticking with a single source for high-value needs like AI hardware.