The Linux Foundation's LF AI & Data has unveiled the Open Platform for Enterprise AI (OPEA), a groundbreaking initiative aimed at accelerating the development of open, multi-provider, and composable generative AI systems. This collaborative effort brings together industry leaders such as Intel, Cloudera, Hugging Face, Red Hat, SAS, and VMware (acquired by Broadcom) to foster innovation and standardization in the rapidly evolving field of generative AI.

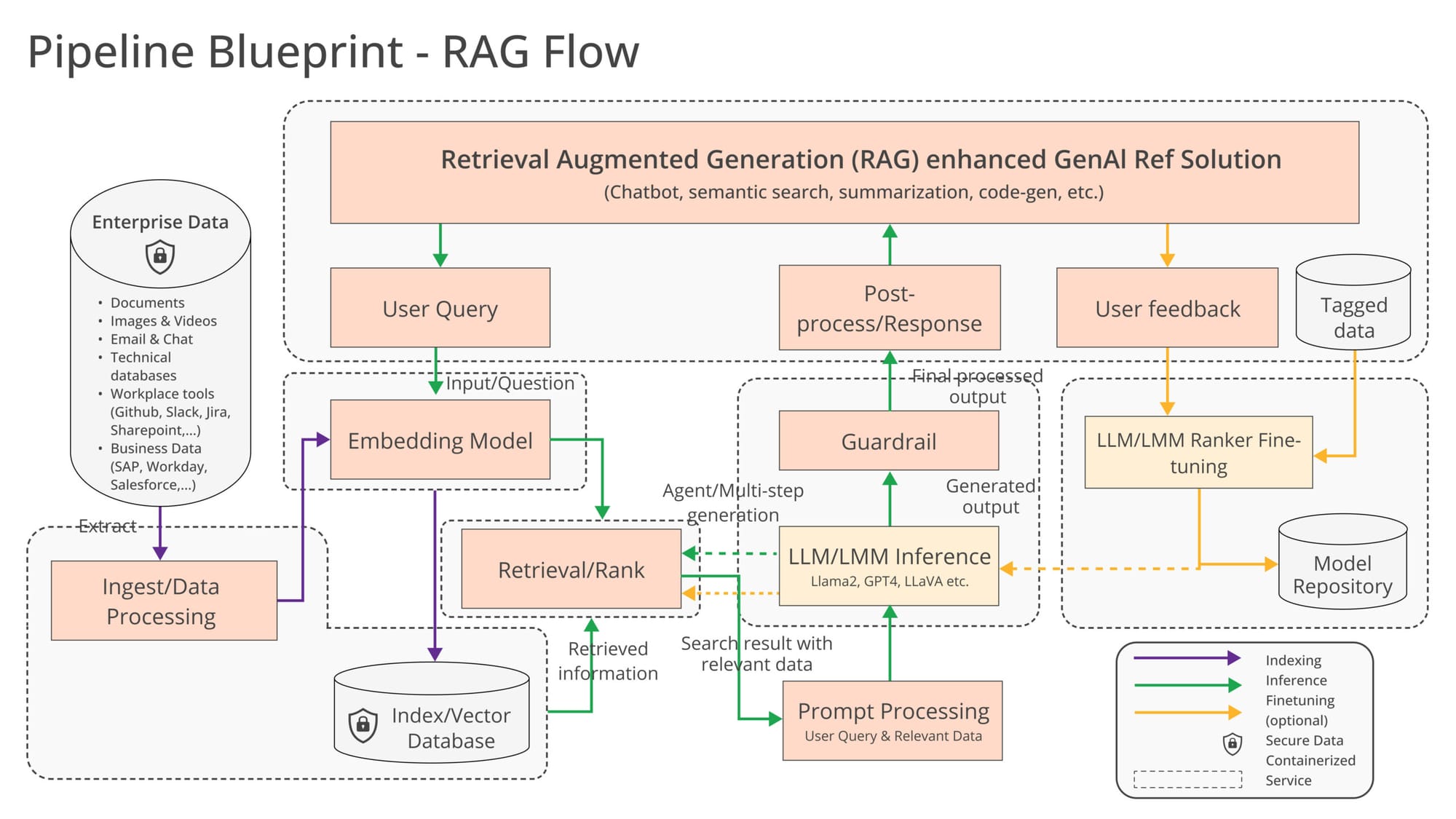

The launch of OPEA comes at a crucial time when generative AI projects, particularly those utilizing Retrieval-Augmented Generation (RAG), are gaining momentum for their ability to unlock significant value from existing data repositories. However, the swift advancement in generative AI technology has led to a fragmentation of tools, techniques, and solutions, creating challenges for enterprise adoption.

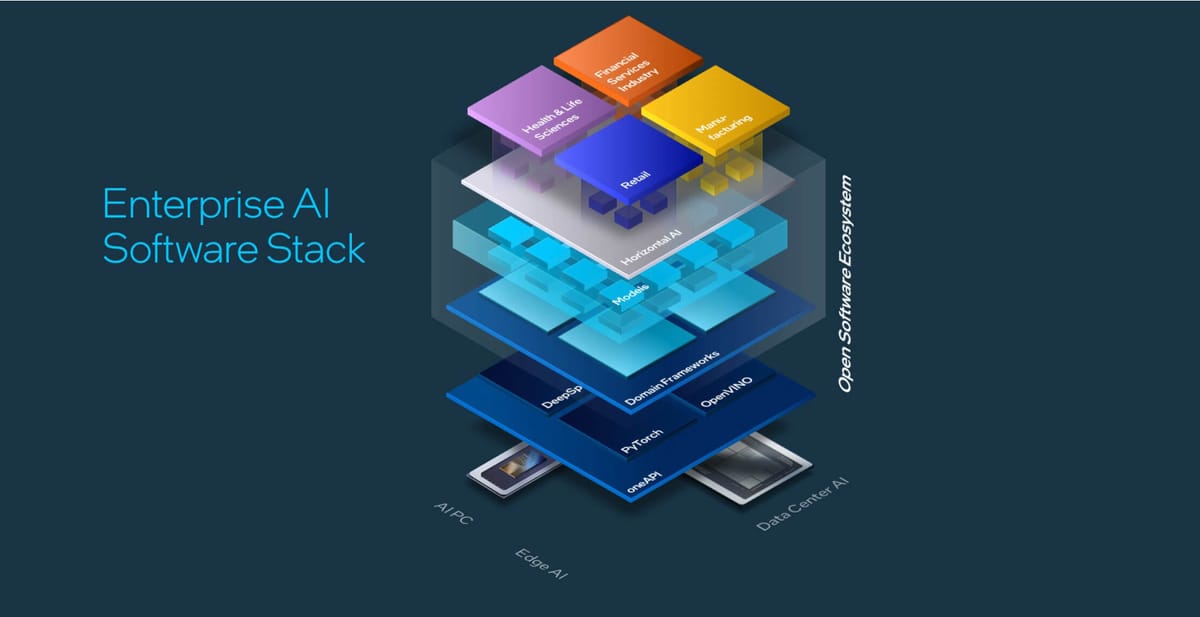

OPEA aims to address this fragmentation by collaborating with the industry to standardize components, including frameworks, architecture blueprints, and reference solutions that showcase performance, interoperability, trustworthiness, and enterprise-grade readiness. By providing a detailed, composable framework, OPEA stands at the forefront of technology stacks, enabling enterprises to accelerate containerized AI integration and delivery, as well as develop unique vertical use cases.

"OPEA, with the support of the broader community, will address critical pain points of RAG adoption and scale today. It will also define a platform for the next phases of developer innovation that harnesses the potential value generative AI can bring to enterprises and all our lives," said Melissa Evers, Vice President of Software Engineering Group and General Manager of Strategy to Execution at Intel.

Intel, a key contributor to OPEA, plans to publish a technical conceptual framework, release reference implementations for generative AI pipelines on secure solutions based on Intel Xeon processors and Intel Gaudi AI accelerators, and continue adding infrastructure capacity in the Intel Tiber Developer Cloud for ecosystem development, AI acceleration, and validation of RAG and future pipelines.

OPEA offers a holistic view of a generative AI workflow that includes RAG and other functionalities, with building blocks such as generative AI models, data processing, embedding models/services, indexing/vector/graph data stores, retrieval/ranking, prompt engines, guardrails, and memory systems. The open source community and enterprise partners will collaborate to evolve this framework to meet developers' needs.

Alongside the composable framework, OPEA provides an assessment framework that allows for grading and evaluation of generative AI flows against vectors such as performance, trustworthiness, scalability, and resilience, ensuring they are enterprise-ready. The assessment categories include performance, features, trustworthiness, and enterprise readiness.