Google DeepMind has unveiled SIMA, a versatile AI agent capable of following natural language instructions across a wide range of 3D virtual environments, from bespoke research settings to complex commercial video games. This research marks the first time an AI system has demonstrated the ability to ground language in perception and action at such a broad scale.

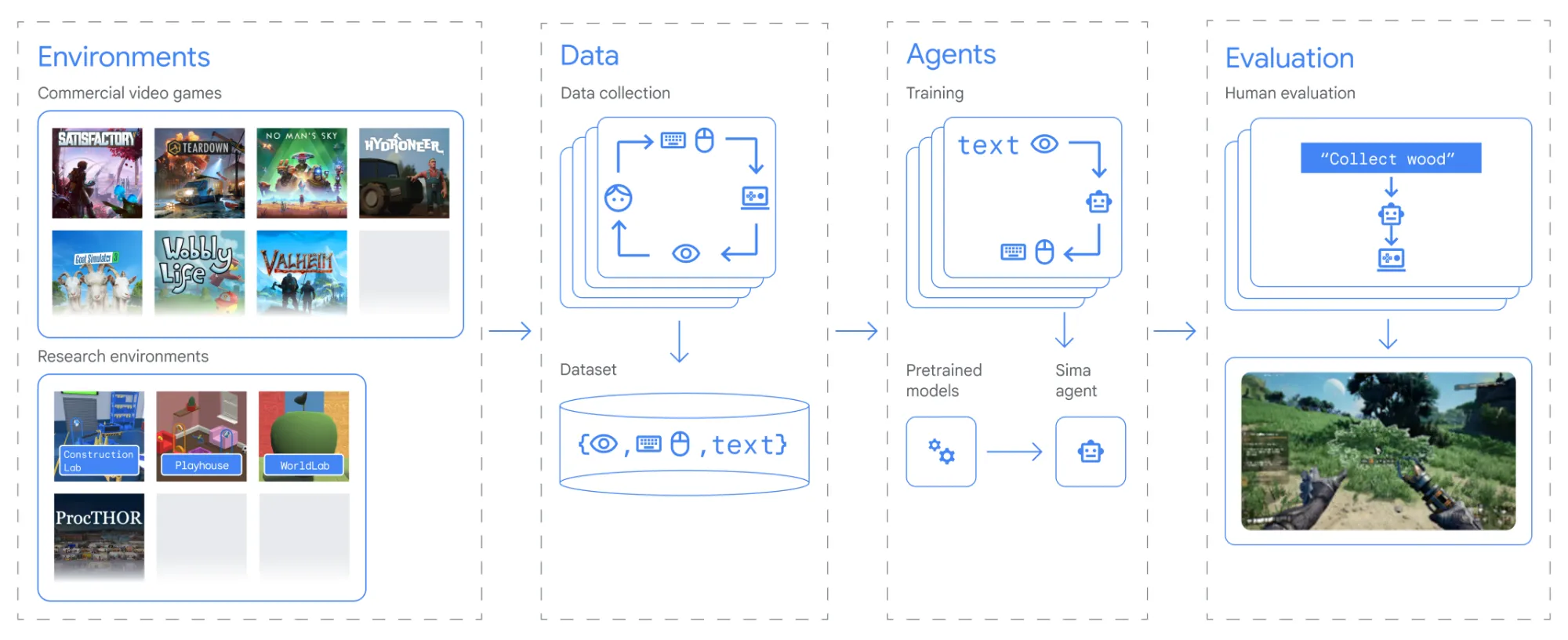

SIMA, short for Scalable, Instructable, Multiworld Agent, leverages the power of large language models while connecting them to the embodied world—a key challenge in developing artificial general intelligence. Google collaborated with eight game studios to train and test SIMA on nine different video games. By training on a diverse set of human gameplay data, including videos, language instructions, and recorded actions, SIMA learns to map visual observations and language commands to keyboard and mouse controls.

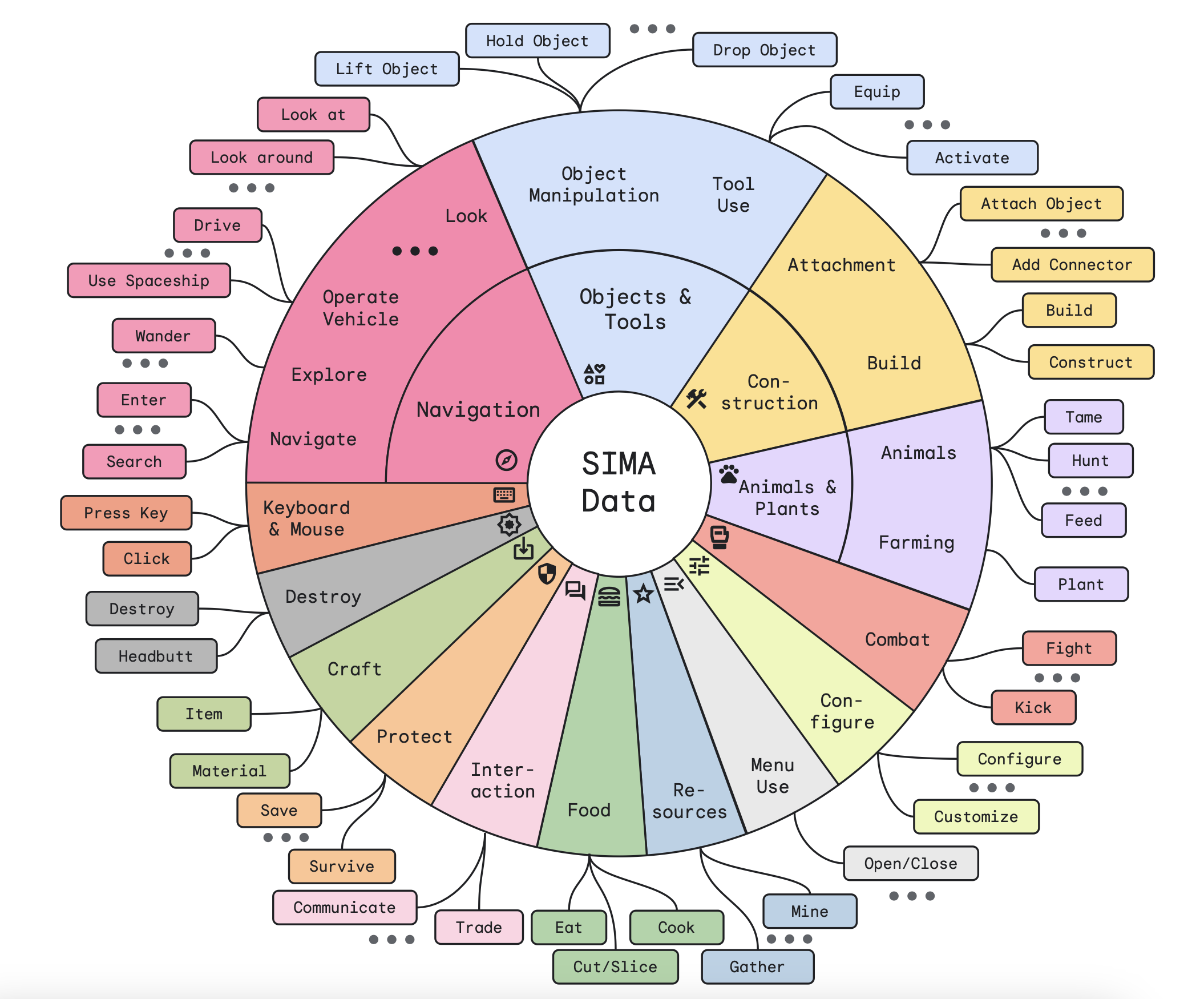

Each of the 3D environments presents unique challenges in perception, navigation, object manipulation, and other complex interactions. From navigating the procedurally generated planets in No Man's Sky to building factories in Satisfactory or causing mayhem as a mischievous goat in Goat Simulator 3, SIMA showcases its adaptability and potential for grounded language understanding.

We also used four research environments - including a new environment we built with Unity called the Construction Lab, where agents need to build sculptures from building blocks which test their object manipulation and intuitive understanding of the physical world.

Importantly, SIMA operates without access to privileged information or bespoke APIs, relying solely on visual input and language instructions, much like a human player would. This approach allows the agent to potentially interact with any virtual environment using the same human-compatible interface.

Evaluation of SIMA's performance across 600 basic skills, such as navigation, object interaction, and menu usage, reveals promising results. The AI agent significantly outperforms specialized agents trained on individual environments, highlighting its ability to transfer knowledge and skills across different settings. However, there remains substantial room for improvement, particularly in more complex commercial games and research environments designed to test advanced physical reasoning and object manipulation.

Despite the challenges ahead, the SIMA project represents a significant milestone in the pursuit of language-driven, embodied AI agents. By grounding language in rich, interactive virtual worlds, DeepMind researchers aim to develop more helpful, general-purpose AI systems capable of safely carrying out a wide range of tasks in both online and real-world environments.

As the project continues to expand its portfolio of games, refine its models, and develop more comprehensive evaluations, SIMA holds the promise of unlocking valuable insights into the fundamental challenge of linking language to perception and action at an unprecedented scale. This groundbreaking research brings us one step closer to the vision of AI agents that can understand and interact with the world as humans do.