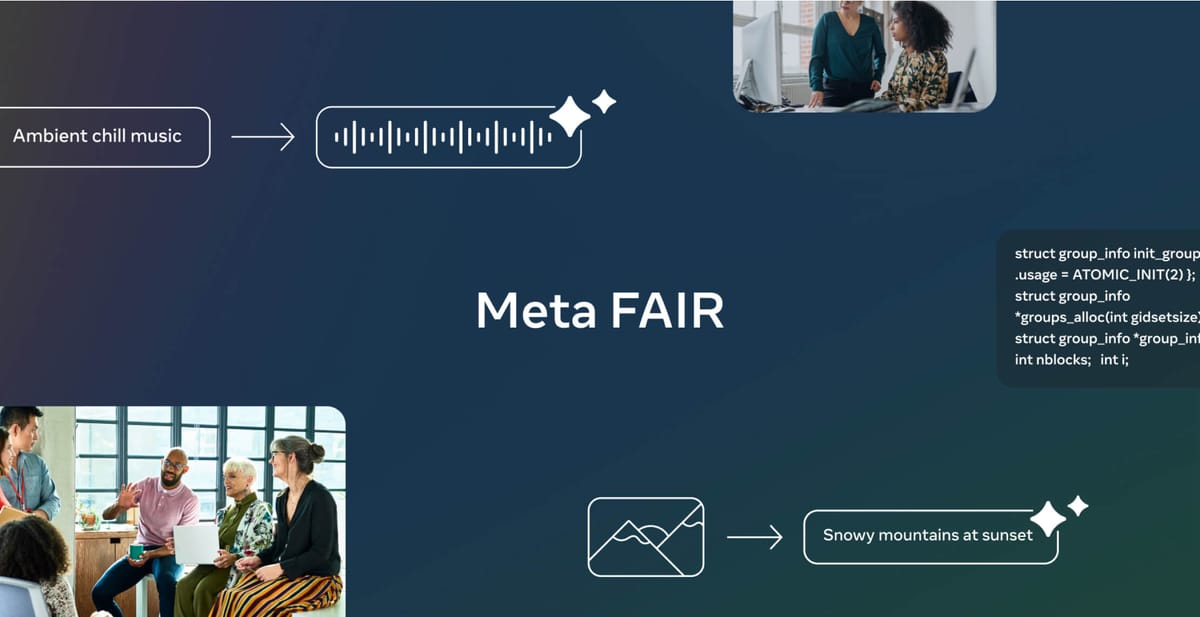

Meta's Fundamental AI Research (FAIR) team has just announced the release of four new AI models, with a focus on encouraging open innovation and responsible advancement in the AI community.

Today is a good day for open science.

— AI at Meta (@AIatMeta) June 18, 2024

As part of our continued commitment to the growth and development of an open ecosystem, today at Meta FAIR we’re announcing four new publicly available AI models and additional research artifacts to inspire innovation in the community and… pic.twitter.com/8PVczc0tNV

"We believe that access to cutting-edge AI creates opportunities for everyone – not just a handful of tech giants," said Joelle Pineau, Managing Director of FAIR. "By sharing these models, we're hoping to see the community learn, iterate, and build in exciting new directions."

So what's in this AI goodie bag? Let's take a peek:

Meta Chameleon is a versatile multimodal AI system that can process both text and images simultaneously. With its 7B and 34B language models, Chameleon goes beyond traditional unimodal results by accepting mixed-modal inputs and providing text-only outputs. This means it can handle tasks like image captioning and even generate entirely new scenes from a mix of text and image prompts.

Meta Multi-Token Prediction takes a different approach to training large language models. Instead of predicting the next word, it predicts multiple future words simultaneously, improving efficiency and speed. This method, available for code completion tasks, is said to be up to three times faster than traditional methods, requiring far less data to achieve the same level of fluency. Get the models.

Meta JASCO (Joint Audio and Symbolic Conditioning) is a text-to-music generation model that takes various audio inputs, such as chords and beats, to generate AI-music. Users can adjust features like drums and melodies through text, giving them greater control over the final sound. The research paper and audio samples are available today, with the inference code and pre-trained model to follow.

Meta AudioSeal is an audio watermarking technique designed to detect AI-generated speech within longer audio snippets. It can pinpoint AI-generated segments, making it a valuable tool for identifying AI-created content. AudioSeal is said to be 485 times faster than traditional detection methods and will be released under a commercial license.

Meta's also tackling the thorny issue of diversity in AI. They've developed tools to measure and improve how well text-to-image models represent different geographical and cultural perspectives. It's a step towards ensuring that when AI "imagines" the world, it's not just one narrow slice of it.

It's worth noting that Meta is releasing these models under various licenses, some for research only, others for commercial use.