Meta has released Video Seal, an open-source solution for efficiently watermarking videos. This addresses growing concerns around AI-generated content verification and digital rights management. The social media giant released a research paper, a web demo as well as the

Key Points:

- Video Seal can efficiently watermark videos without processing every frame, significantly reducing computational overhead compared to existing methods

- The system shows superior robustness against common video manipulations like compression, cropping, and brightness adjustments

- Meta's decision to open-source Video Seal, including code and models, could accelerate adoption and further research in video watermarking

With tools like Sora, Runway and Kling making it increasingly difficult to distinguish between authentic and AI-generated content, it is more important than ever before to have digital watermarking solutions.

Video Seal is a significant advancement in digital content verification, introducing a novel approach called "temporal watermark propagation." This technique allows the system to watermark videos efficiently by processing only select frames and propagating the watermark signal to neighboring frames, dramatically reducing computational requirements while maintaining effectiveness.

One of Video Seal's most notable achievements is its robustness against common video manipulations. The system maintains high watermark detection rates even when videos undergo multiple transformations such as compression, cropping, and brightness adjustments - conditions that typically challenge existing watermarking solutions.

"Video Seal achieves higher robustness compared to strong baselines especially under challenging distortions combining geometric transformations and video compression," the research team notes in their paper.

The system's architecture consists of two main components: an embedder that inserts the watermark and an extractor that retrieves it. These components are trained together using a sophisticated multi-stage process that includes image pre-training, hybrid post-training, and extractor fine-tuning.

What sets Video Seal apart from previous solutions is its ability to balance three critical factors:

- Speed: By watermarking only key frames and propagating the signal, Video Seal processes videos significantly faster than frame-by-frame approaches

- Imperceptibility: The watermarks remain virtually invisible to viewers while being detectable by the system

- Robustness: The watermarks persist even after common video manipulations and compression

Meta's decision to open-source Video Seal, including its codebase, models, and public demo, reflects a commitment to fostering collaboration in addressing content authenticity challenges. This move could accelerate the development and adoption of video watermarking technologies across the industry.

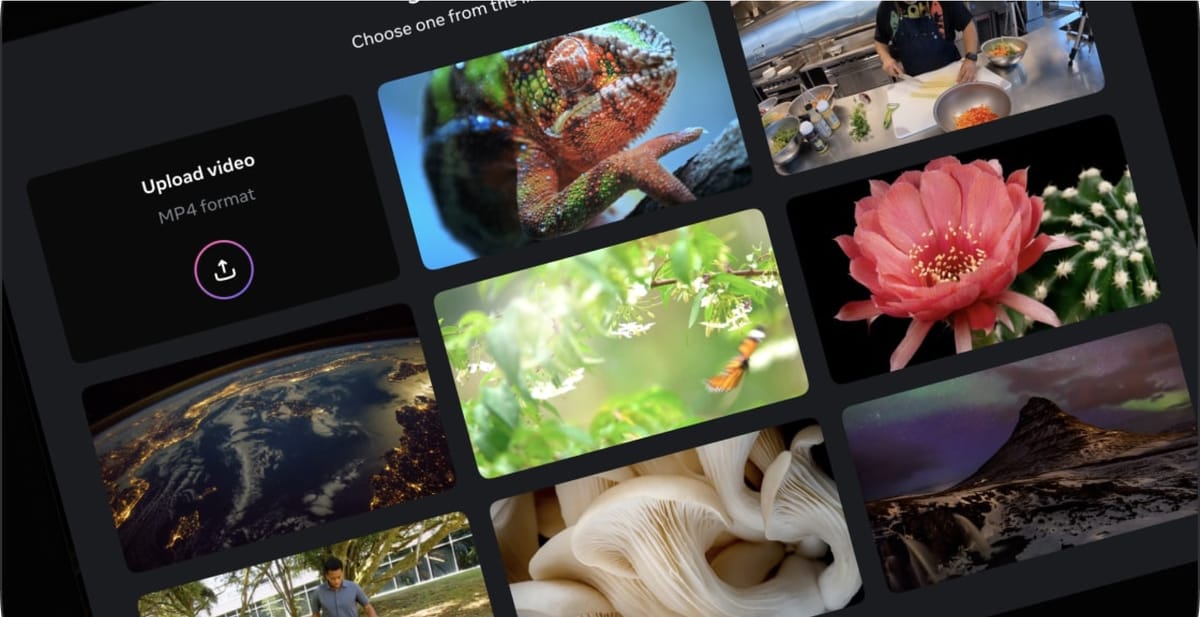

What's being released: Meta has made Video Seal available under a permissive MIT license. The GitHub repository includes pre-trained models ready for implementation, complete training code for customizing solutions, inference code for deployment, and comprehensive evaluation tools. The release also provides baseline implementations of leading image watermarking models (MBRS, CIN, TrustMark, and WAM) adapted for video. The company has also released a web demo that you can try yourself.

Why it matters: As AI-generated content becomes increasingly sophisticated and prevalent, robust watermarking solutions like Video Seal will play a crucial role in content verification and digital rights management. The technology's efficiency and reliability could make it particularly valuable for social media platforms and content creators dealing with large volumes of video content.

Looking ahead: While Video Seal represents a significant advance in video watermarking technology, the researchers acknowledge several areas for future improvement, including enhancing visual consistency across watermarked frames and increasing payload capacity. The open-source nature of the project means these challenges could be addressed through collaborative efforts within the broader research community.