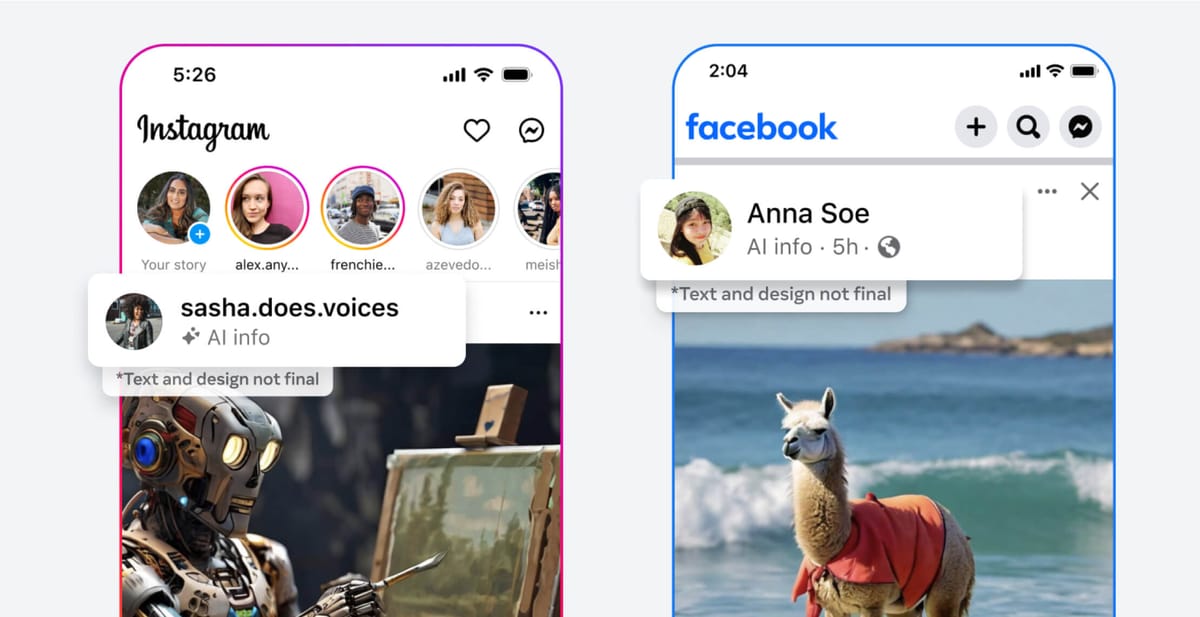

Meta announced today that they will begin labeling AI generated images posted by users across Facebook, Instagram and Threads. The decision comes as deepfakes and hyper-realistic synthetic media becomes more commonplace online, raising major privacy, transparency and safety concerns.

Nick Clegg, President of Global Affairs at Meta, detailed the initiative, highlighting that the company is collaborating with industry partners to establish common technical standards for identifying AI content, encompassing video and audio as well. Over the next few months, Meta will start labeling images that are detected to be AI-generated based on these industry standards.

This initiative is not entirely new for Meta, which has already been labeling photorealistic images created using its Meta AI since its launch. The aim is to extend this transparency to content created using other companies' tools as well, ensuring users can discern between human-made and synthetic content.

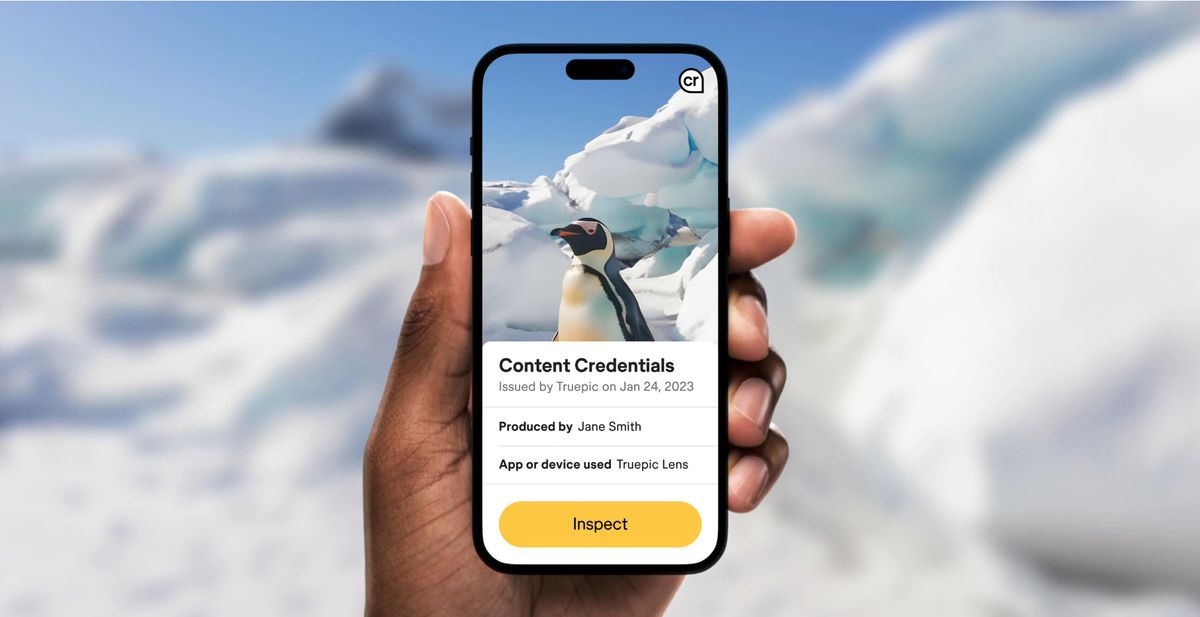

We’re building industry-leading tools that can identify invisible markers at scale – specifically, the “AI generated” information in the C2PA and IPTC technical standards – so we can label images from Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock as they implement their plans for adding metadata to images created by their tools.

The company is also preparing for scenarios where AI-generated content may seek to evade detection through the removal of invisible markers. Efforts are underway to develop solutions such as their Stable Signature technology, that integrate invisible watermarking directly into the image generation process, making it harder to disable.

Meta's labeling initiative comes at a crucial time, with important elections around the world on the horizon. The company aims to learn more about the creation and sharing of AI content, adjusting its strategies based on user feedback and technological advancements.

However, Meta acknowledges the challenges ahead, recognizing that not all AI-generated content can currently be identified, especially as adversaries seek ways to circumvent safeguards. Thus, the company encourages users to exercise judgment, considering the trustworthiness of the content source and looking for unnatural details.

Beyond labeling, Meta continues to leverage AI in enforcing its Community Standards, demonstrating AI's potential to both generate content and safeguard the platform. The use of AI has significantly reduced the prevalence of hate speech on Facebook, and generative AI tools are being explored for their potential to further enhance policy enforcement.

As AI-generated content becomes more commonplace, debates on if/how to identify and verify content will likely intensify. Meta's current steps represent a proactive approach to these challenges, emphasizing the need for industry collaboration and regulatory dialogue.