Meta has announced that it is updating its approach to how it handles AI-generated content across its platforms. The move comes in response to recommendations from the Oversight Board and the company's policy review process, which included public opinion surveys and expert consultations.

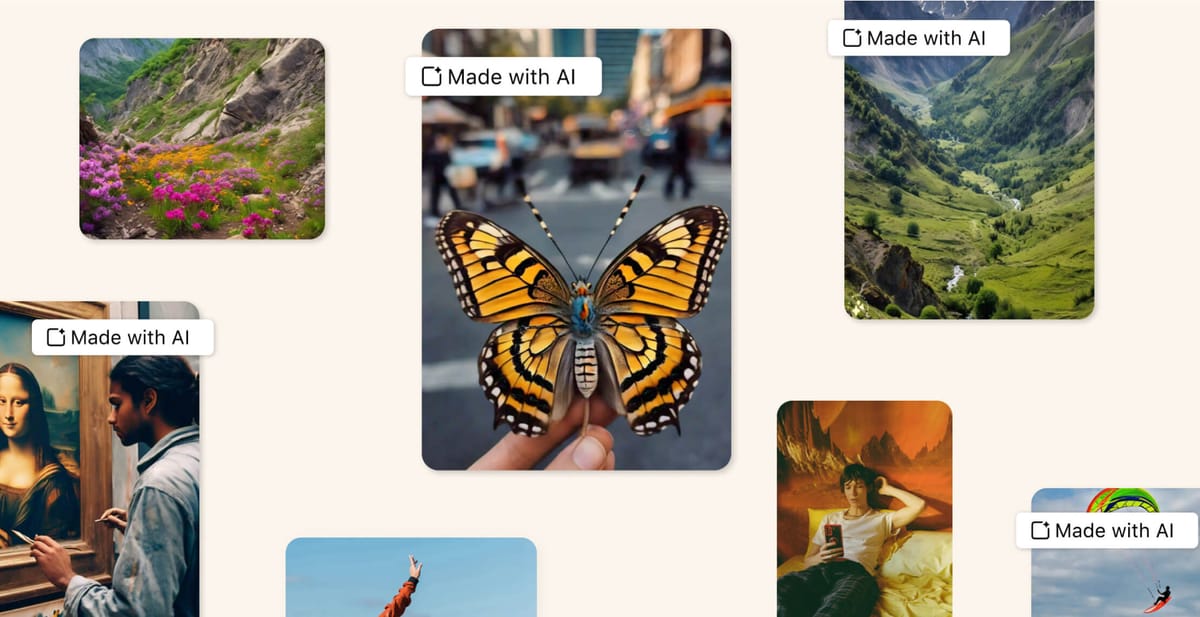

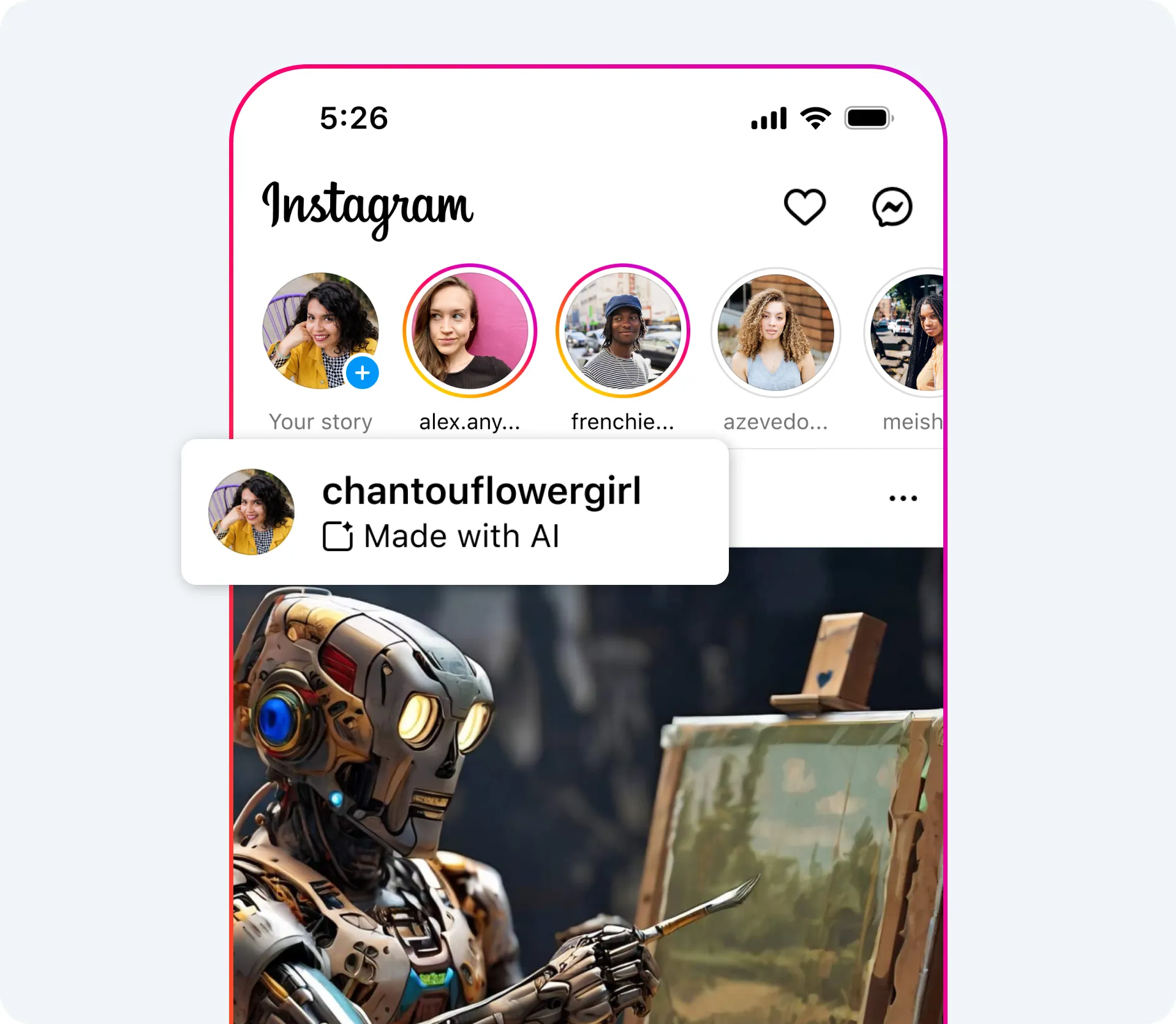

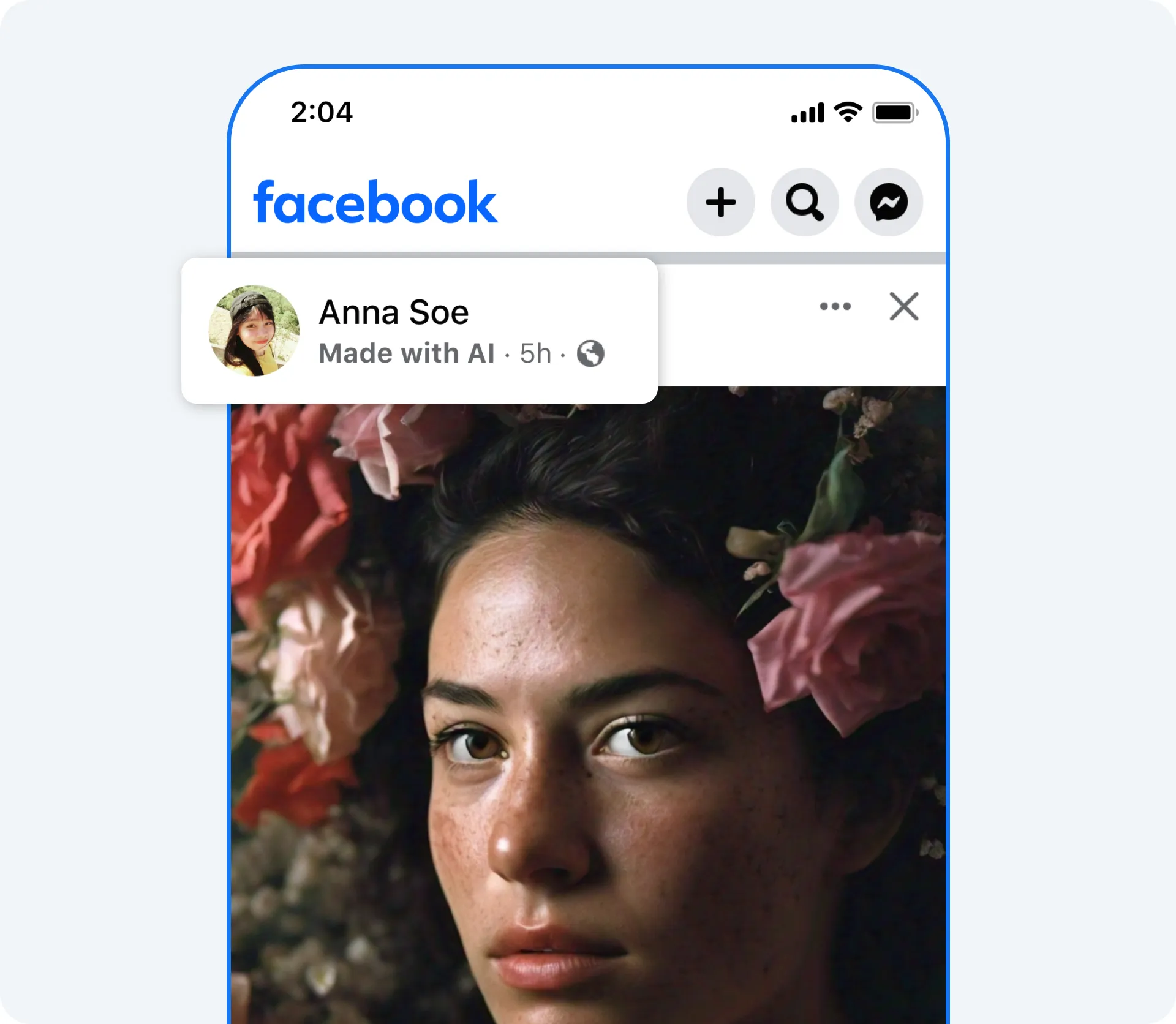

Starting in May, Meta will begin labeling a wider range of video, audio, and image content as "Made with AI" when the company detects industry-standard AI image indicators or when users disclose that they are uploading AI-generated content. The new labels will cover a broader range of content than the company's current manipulated media policy, which was established in 2020 and primarily focuses on videos altered by AI to make a person appear to say something they did not say.

This move is in line with the Oversight Board's recommendation that providing transparency and additional context is a more effective way to address manipulated media. According to Monika Bickert, Vice President of Content Policy at Meta, the company agrees that this approach avoids the risk of unnecessarily restricting freedom of speech and will keep such content on its platforms so that labels and context can be added.

Meta's policy review process included consultations with over 120 stakeholders in 34 countries, with broad support for labeling AI-generated content and strong support for more prominent labels in high-risk scenarios. The company also conducted public opinion research with more than 23,000 respondents in 13 countries, revealing that 82% favor warning labels for AI-generated content that depicts people saying things they did not say.

Meta's existing approach to manipulated media only covers videos that are created or altered by AI to make a person appear to say something they didn't say. However, the company acknowledges that the landscape has changed since the policy was written in 2020. Realistic AI-generated content, such as audio and photos, has become more prevalent, and the technology is rapidly evolving.

Under the new policy, Meta will keep AI-generated content on its platforms to add informational labels and context, unless the content violates other policies outlined in the company's Community Standards. The company's network of nearly 100 independent fact-checkers will continue to review false and misleading AI-generated content, with content rated as False or Altered shown lower in Feeds and overlaid with additional information.

Meta plans to start labeling AI-generated content in May 2024 and will stop removing content solely on the basis of its manipulated video policy in July. This timeline gives people time to understand the self-disclosure process before the company stops removing the smaller subset of manipulated media.The company aims to continue collaborating with industry peers and engaging in dialogue with governments and civil society as AI technology progresses.