Today, Meta shared it's latest research on CM3leon (pronounced “chameleon”), a transformer-based model that achieves state-of-the-art results on text-to-image generation and shows new capabilities for multimodal AI. CM3leon marks the first time an autoregressive model has matched the performance of leading generative diffusion models on key benchmarks.

In recent years, generative AI models capable of creating images from text prompts have progressed rapidly. Models like Midjourney, DALL-E 2 and Stable Diffusion can conjure photorealistic scenes and portraits from short text descriptions. These models use a technique called diffusion—a process that involves iteratively reducing noise in an image composed entirely of noise, and gradually bringing it closer to the desired target. While diffusion-based methods yield impressive results, their computational intensity poses challenges, as they can be expensive to run and often lack the speed required for real-time applications.

CM3leon takes a different approach. As a transformer-based model, it utilizes the power of attention mechanisms to weigh the relevance of input data, whether it's text or images. This architectural distinction allows CM3leon to achieve faster training speeds and better parallelization, making it more efficient than traditional diffusion-based methods.

CM3leon was trained efficiently on a dataset of licensed images using just a single TPU pod, and reaches an FID score of 4.88 on the MS-COCO dataset. Meta researchers say the model is over 5x more efficient than comparable transformer architectures.

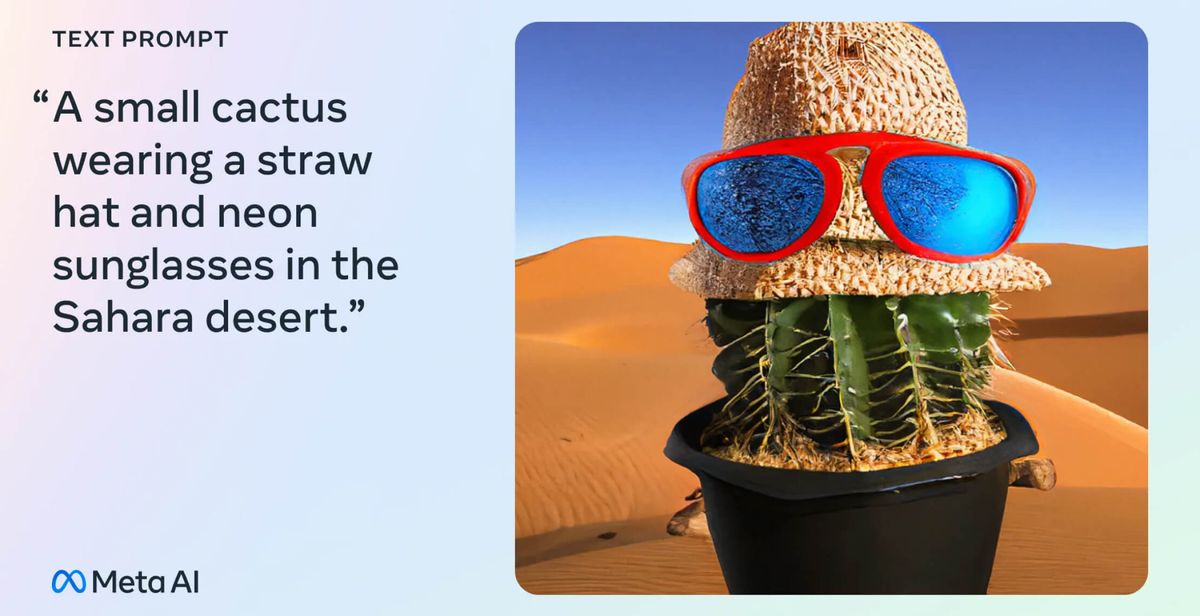

But raw performance metrics don't tell the full story. Where CM3leon truly shines is in handling more complex prompts and image editing tasks. For example, CM3leon can accurately render an image from a prompt like "A small cactus wearing a straw hat and neon sunglasses in the Sahara desert."

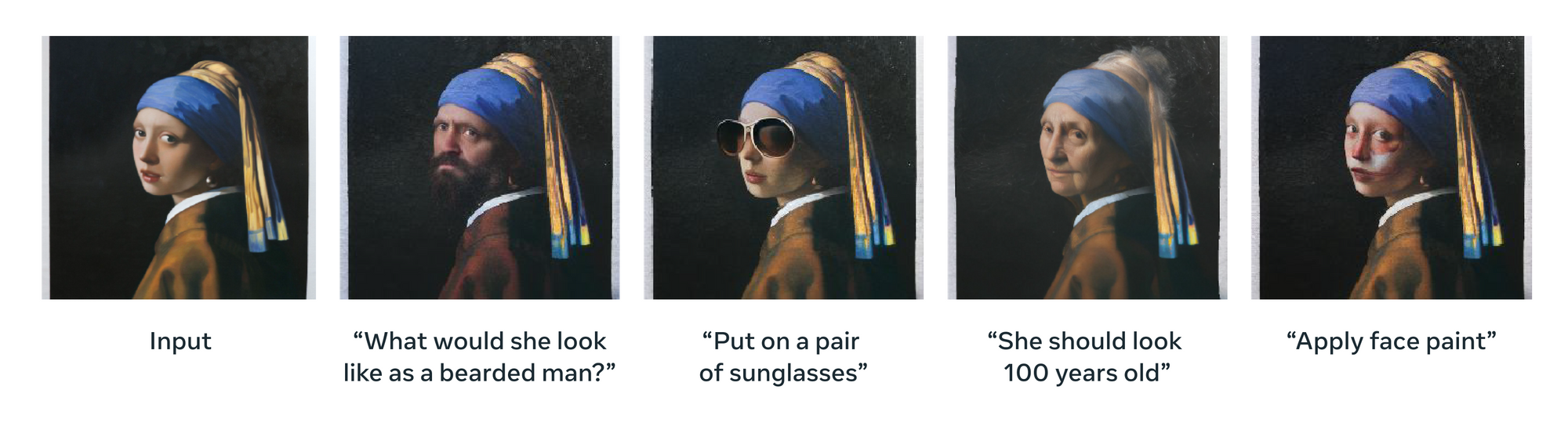

The model also excels at making edits to existing images based on free-form text instructions, like changing the sky color or adding objects in specific locations. These capabilities far surpass what leading models like DALL-E 2 can currently achieve.

Text Guided Image Editing

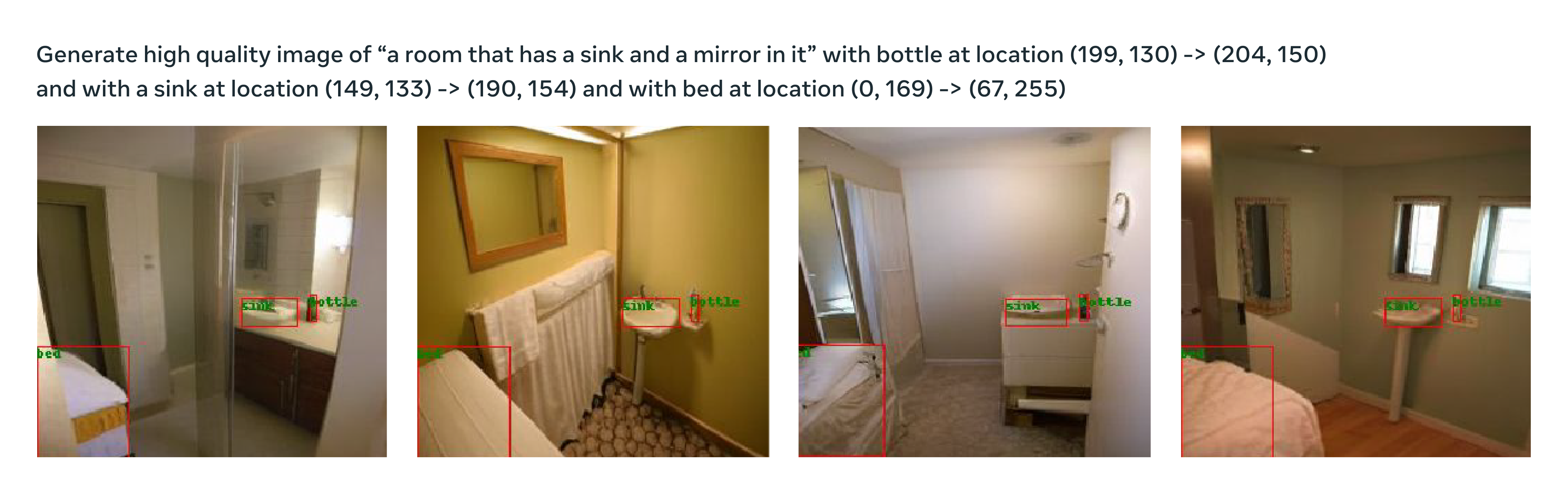

CM3leon's versatile architecture allows it to move fluidly between text, images, and compositional tasks. Beyond text-to-image generation, CM3leon can generate captions for images, answer questions about image content, and even create images based on textual descriptions of bounding boxes and segmentation maps. This combination of modalities into a single model is unprecedented among publicly revealed AI systems.

Object-to-image

Given a text description of the bounding box segmentation of the image, CM3leon can generate an image.

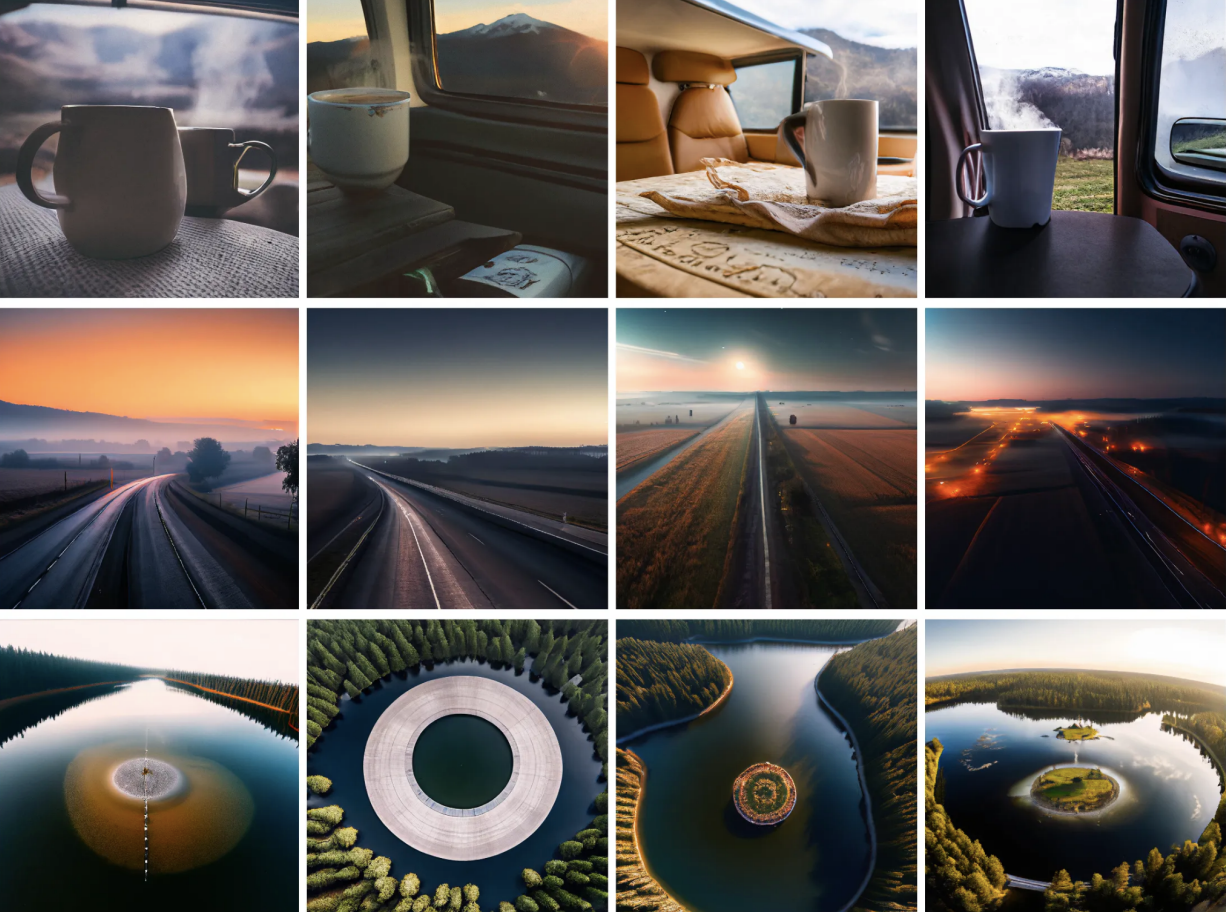

Super-resolution results

A separate super-resolution stage can be integrated with CM3leon output that significantly improves resolution and detail. Below are four example images for each of the prompts: (1) A steaming cup of coffee with mountains in the background. Resting during road trip. (2) Beautiful, majestic road during sunset. Aesthetic. (3) Small circular island in the middle of a lake. Forests surrounding the lake. High Contrast

CM3leon's success can be attributed to its unique architecture and training methods. The model employs a decoder-only transformer architecture, similar to established text-based models, but with the added capability of handling both text and images. Training involves retrieval augmentation, building upon recent work in the field, and instruction fine-tuning across various image and text generation tasks.

By applying a technique called supervised fine-tuning across modalities, Meta was able to significantly boost CM3leon's performance at image captioning, visual QA, and text-based editing. Despite being trained on just 3 billion text tokens, CM3leon matches or exceeds the results of other models trained on up to 100 billion tokens.

Meta has yet to announce plans to release CM3leon publicly. But the model defines a new bar for multimodal AI and shows the power of techniques like retrieval augmentation and supervised fine-tuning. It's a remarkable achievement that points to a future where AI systems can smoothly transition between understanding, editing, and generating across images, video, and text.