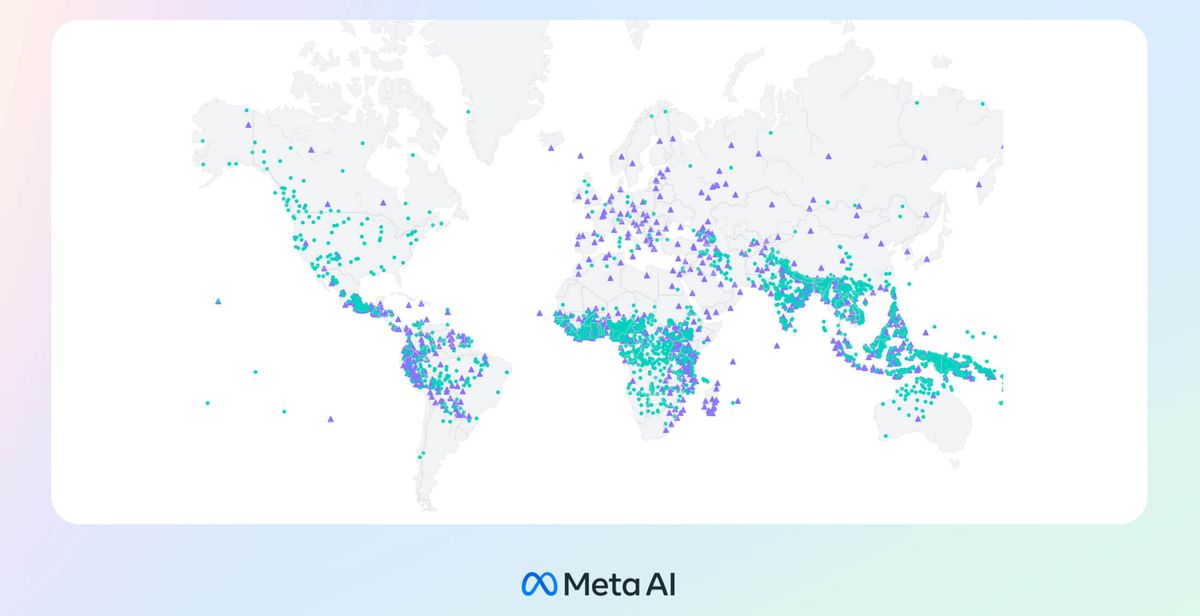

Meta AI today released and open-sourced the Massively Multilingual Speech (MMS) project, which offers speech-to-text and text-to-speech models for an unprecedented 1,107 languages and language identification for over 4,000 languages. This is a quantum leap from the roughly 100 languages that most speech recognition models currently support.

The MMS dataset includes over 500,000 hours of speech data in 1,400 languages, some with only hundreds of speakers. By pretraining models on this dataset, MMS is able to achieve strong performance with less data, overcoming a key challenge for developing speech technology in small languages. Meta says MMS is part of their goal to develop AI for "the next billion people" and protect marginalized groups through technology. The models and data could help build educational tools, improve access to information, and generate synthetic speech when real speech data is lacking. MMS demonstrates how AI can be developed for empowerment when resources and open sharing are combined, the company said.

Comparing the MMS project with proprietary models like OpenAI's Whisper reveals the effectiveness of Meta's model: MMS attains half the word error rate of Whisper, while providing language coverage for 11 times more languages. Such performance underscores Meta's increasing prominence in the AI sector.

Meta's approach to data collection is undeniably ingenious, yet it does raise a few eyebrows. To circumvent the challenge of scarce data in non-dominant languages, the MMS project has utilized religious texts - notably the Bible - that have been translated into numerous languages worldwide. These translated texts, read out loud, have been used to create a dataset of over 1,100 languages, each with an average of 32 hours of data.

The addition of unlabeled recordings from other Christian readings expanded the language base to over 4,000. Despite the specific religious domain and a predominance of male voices, Meta asserts that their models perform equally well across genders and do not exhibit an overemphasis on religious language.

However, it's worth asking if the choice of religious texts could potentially introduce any bias or limitation into the system. Could the reliance on a single domain, and one as loaded as religion, limit the breadth of language the models are exposed to? Could the dominance of male speakers inadvertently skew speech patterns or pronunciation nuances in languages where gender influences these factors?

While Meta assures that their Connectionist Temporal Classification approach minimizes the risk of religious language bias, these are questions that need more detailed exploration. As we look forward to further developments from the MMS project, it will be interesting to see how Meta addresses these considerations to ensure the broadest and most inclusive application of their technology.

Meta has aimed to advance AI for underserved global communities, with a focus on creating shared datasets and models. Open-sourcing MMS provides the models and data freely to researchers and companies, allowing them to build upon Meta's work. The MMS project is not Meta's first contribution to the open-source AI community. In fact, the tech giant has been progressively establishing a reputation for its commitment to open source. This latest project follows a line of impressive, freely available AI models developed by Meta such as ImageBind, DINOv2, and the Segment Anything Model (SAM).

Yet, there has been some disquiet in the open-source community concerning Meta's decision to release its models and code under a CC-BY-NC 4.0 license. This particular license allows for others to build upon the work, but restricts any commercial use of the material without express permission.

Critics argue that this move falls short of the spirit of open source - a philosophy grounded in unrestricted sharing and collaboration. They contend that while Meta gains from freely available research in the open source community, the non-commercial clause in their license limits the full potential of its contributions to be utilized by others for commercial applications.

It's a delicate balancing act for companies like Meta, to contribute to the open source community while also protecting their investment. As we observe the AI landscape evolve, the conversation around licensing and how best to cultivate a genuinely symbiotic relationship between corporates and the open-source community will continue to be a significant discourse.

Meta's evolution from a social media giant to a significant player in the AI arena underscores a keen understanding of the evolving technology landscape. By investing in initiatives like MMS, the company is contributing towards addressing real-world problems while also solidifying its influence within the AI community.

By democratizing AI technology and breaking down language barriers, Meta is not just driving AI advancement—it is shaping a future where technology serves as a universal platform for communication and collaboration. The keen observer will of course note that these areas of focus are also strategically aligned with their broader vision of creating the metaverse.