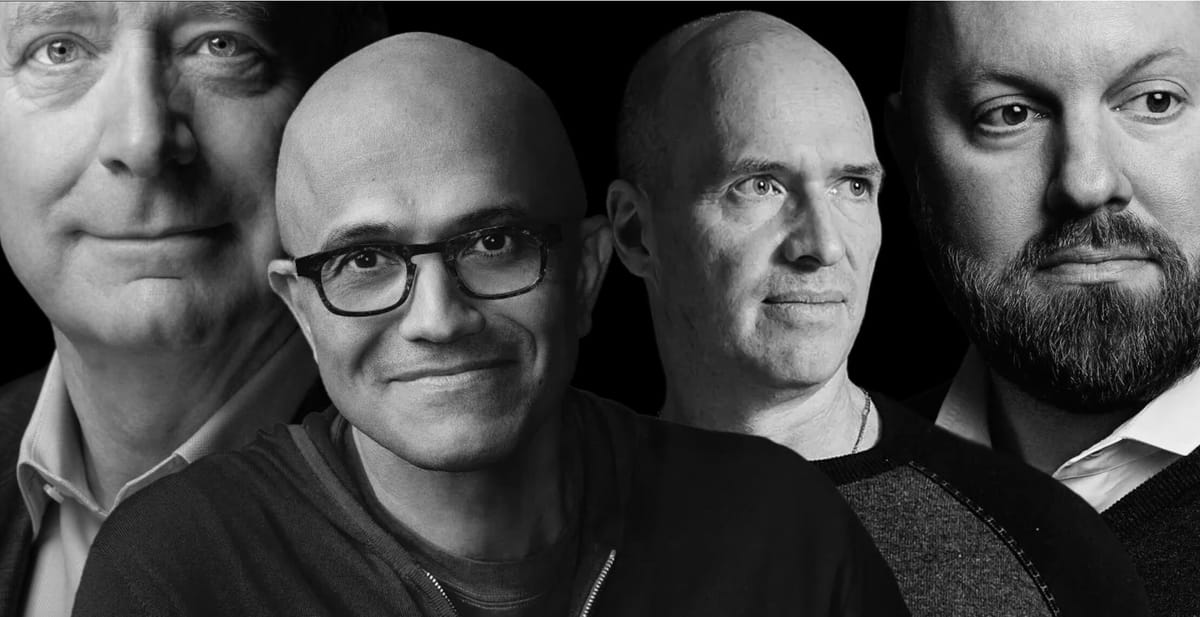

Microsoft and Andreessen Horowitz (a16z) are collaborating to propose a comprehensive public policy framework for AI development. While the collaboration between a $3 trillion tech giant and one of Silicon Valley's most influential VC firms was not on my bingo card for 2024, it underscores just how critical and urgent AI policy has quickly become.

What makes this collaboration particularly powerful is how it bridges potential divides within the tech industry. Microsoft, with its massive infrastructure investments and global reach, represents the interests of "Big Tech". a16z, with its focus on startups and entrepreneurship, brings the perspective of "Little Tech". Their unified vision suggests a path forward that can benefit both established players and newcomers.

This policy proposal arrives just days before the US elections. Regardless of the outcome, a robust AI agenda will be crucial for our economy and national security. Whether we like it or not, the U.S. is in an arms race for AI supremacy, and we cannot afford to fall behind our adversaries.

Overall, I'm generally in agreement with the policy proposals. We do need smart, light-touch regulation that prevents misuse without stifling innovation. It should allow both proprietary and open-source models to thrive, and give startups the space they need to experiment and grow.

There are three proposed policies that I found particularly interesting that I want to highlight. The first is open data commons.

I think this an ideal domain for government to lead in. Not only will it make more datasets more accessible, but by curating and making public, vetted, and trusted data sets available, we can ensure diverse perspectives and voices are reflected in the training data and ultimately lead to more robust and equitable AI systems.

The second is the right to learn:

This is a contentious issue and likely the hardest one to get right. Our copyright and IP laws weren't written with AI in mind. Is AI 'learning' or is it being 'trained'? Is there a meaningful distinction, and does it matter legally or ethically? These are complex questions with valid arguments on both sides, and the outcome will impact businesses and people's lives significantly. Should we create new laws, or adapt the existing ones? It’s a thorny issue, and navigating it will require all branches of government working together thoughtfully.

Finally, the proposal to improve AI literacy and help people thrive.

One of the most important things we can do as a society is to prioritize and invest in AI literacy. A more literate population means more people understanding AI's potential, opportunities, and risks—empowering individuals to make informed decisions and engage with technology responsibly.

Crafting the policy is the easy part. The challenge lies in implementation. Congress and regulatory agencies must recognize that the choice isn't between regulation and no regulation – it's between smart, innovation-enabling oversight and bureaucratic obstacles that could cede America's technological leadership to competitors.

This proposal offers a blueprint for maintaining American leadership while ensuring responsible AI development. It recognizes that in the global AI race, the U.S. must leverage its unique strengths: a culture of innovation, strong entrepreneurial ecosystem, and collaboration between established companies and startups.

The alternative – excessive regulation or fragmented industry approaches – would only benefit our competitors. China's state-directed AI development is accelerating, particularly in areas like robotics and autonomous systems. America's response must be to unleash its innovative potential while maintaining appropriate safeguards.

If you care about the future of technology, if you care about America staying ahead, it's time to take notice. We need collaboration, smart policies, and a unified approach to ensure that AI doesn’t just advance—but makes life better for everyone.