Researchers from Microsoft Research Asia have introduced a new framework called InstructDiffusion that provides a unified interface for diverse computer vision tasks. The paper "InstructDiffusion: A Generalist Modeling Interface for Vision Tasks" aims to develop a single model capable of handling multiple vision applications simultaneously.

The key innovation in InstructDiffusion is formulating vision tasks as human-intuitive image manipulation processes. Instead of predefined output spaces like categories or coordinates, it uses a flexible pixel space as output.

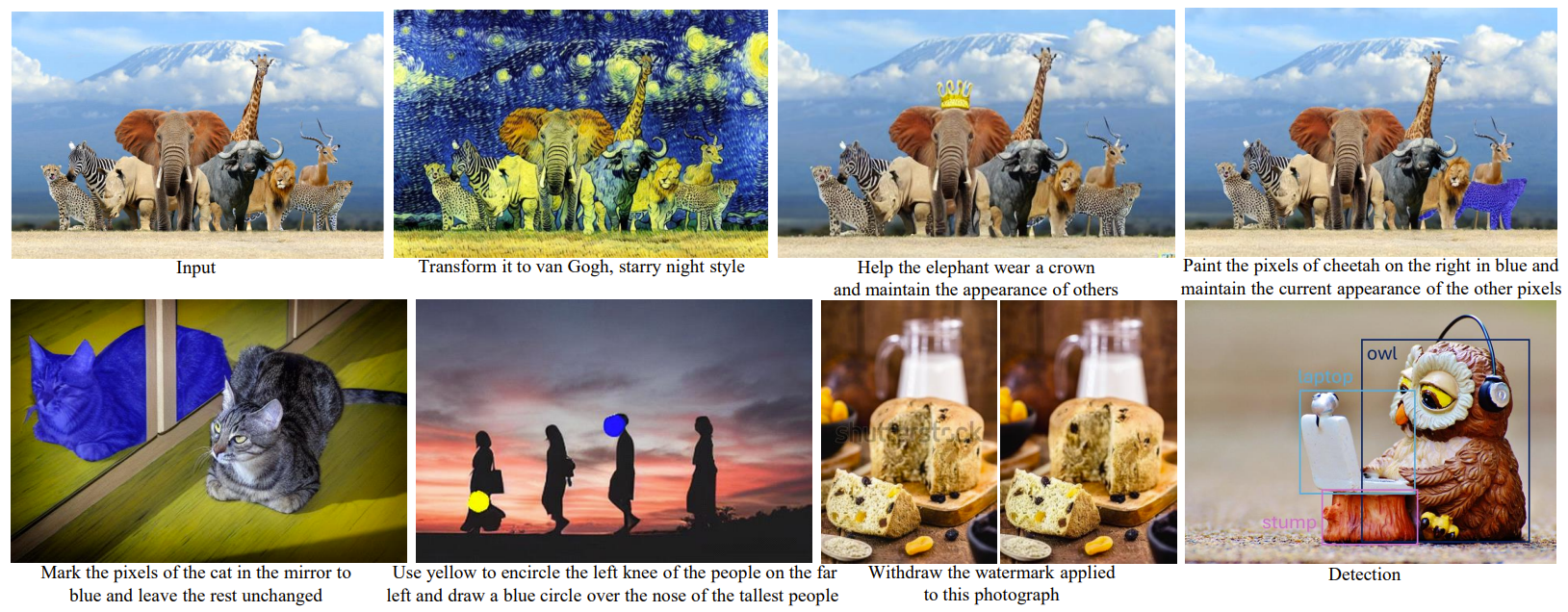

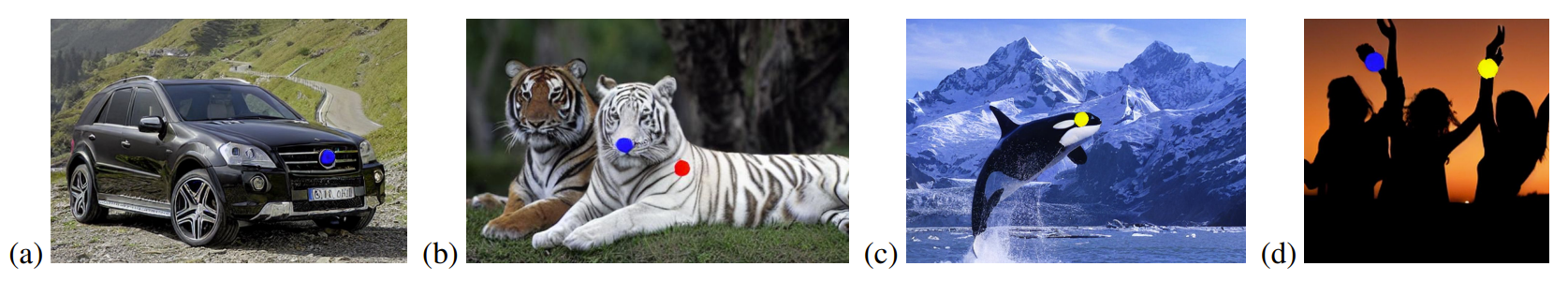

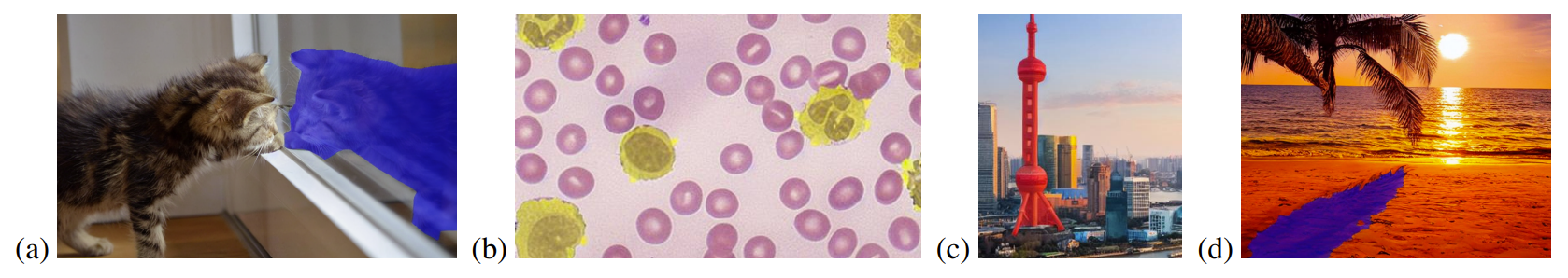

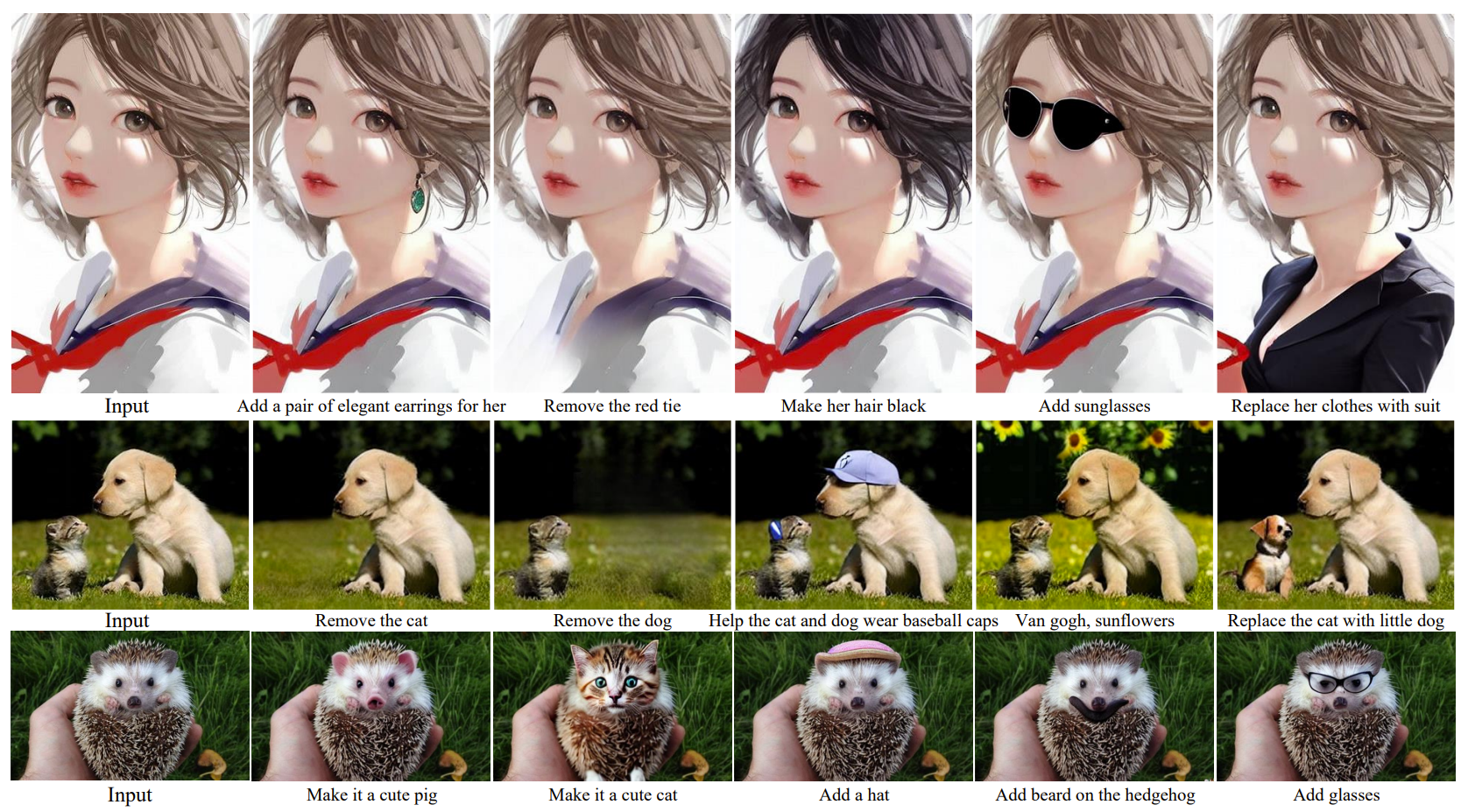

Specifically, the model is trained to modify input images according to textual instructions provided by the user. For example, an instruction could be "encircle the man's left shoulder in red" for keypoint detection or "apply a blue mask to the rightmost dog" for segmentation.

The underlying framework uses denoising diffusion probabilistic models (DDPM) to generate pixel outputs. The training data consists of triplets with an instruction, source image, and target output image.

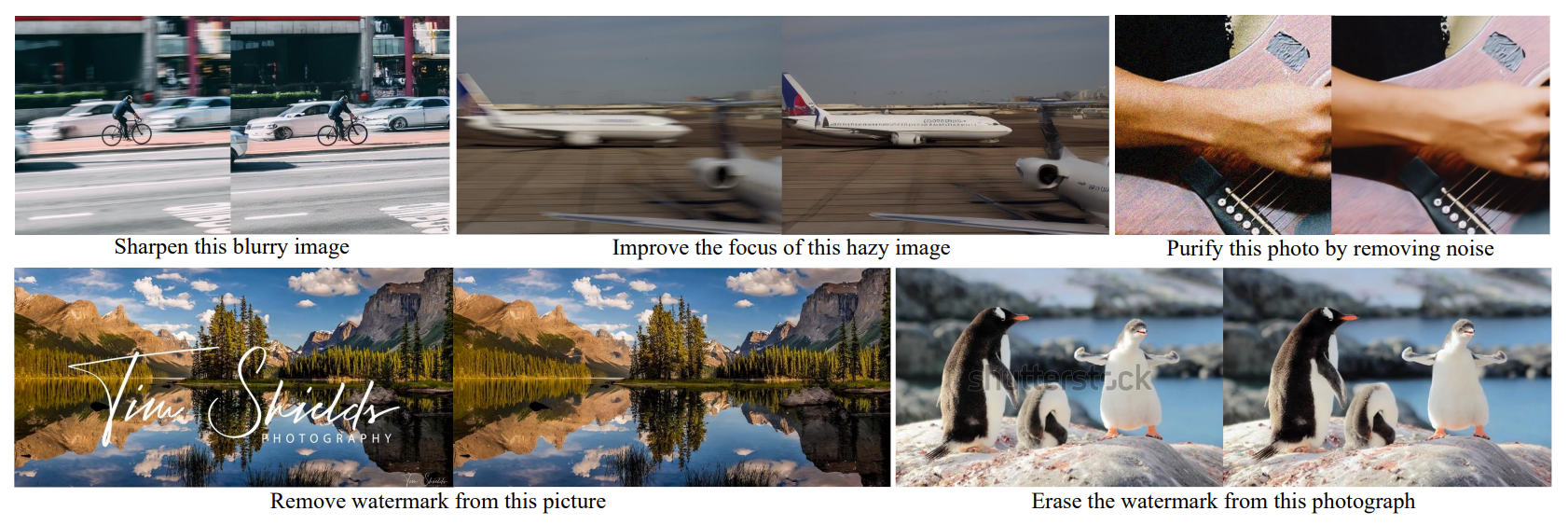

The researchers focused on three main output types - RGB images, binary masks, and keypoints. This covers common vision tasks like segmentation, keypoint detection, image editing, and enhancement.

Keypoint Detection

Segmentation

Low Level Tasks

Image Editing

Experiments demonstrate that InstructDiffusion achieves strong performance on individual tasks compared to specialized models. More importantly, the most striking feature of InstructDiffusion is its generalization capability. It can adapt to tasks it hasn't seen during training, showcasing a trait commonly associated with AGI. This is a monumental step towards a unified, flexible framework for computer vision, one that could significantly advance the entire field.

The researchers found that training the model concurrently on multiple diverse tasks substantially enhanced its ability to generalize to novel scenarios. InstructDiffusion performed significantly better on handling unseen tasks and datasets when trained in a multi-task fashion compared to models trained on any single task alone.

For instance, it showed strong performance on the HumanArt dataset and the AP-10K animal dataset for keypoint detection, despite these datasets having a distinct data distribution compared to the training data.

The researchers also observed that using highly detailed instructions was critical for the model's ability to generalize to new tasks and datasets. Simply providing the name of the task like "semantic segmentation" resulted in very poor performance, especially on novel types of data.

This highlights that the InstructDiffusion model learns not by memorizing biases and correlations for certain instructions, but by deeply understanding the specific meanings and intentions behind each element of the detailed instructions.

For example, an instruction like "encircle the cat's left ear in red" enables the model to comprehend the distinct concepts like "cat", "left ear", and "red circle". This level of granular comprehension allows the model to accurately locate key elements in new images and generalize effectively.

In contrast, broad instructions like "keypoint detection" force the model to learn biases tied to the task name which do not transfer well to new scenarios. The model essentially mimics expected outputs for those high-level instructions rather than gaining real understanding.

By training the model to follow highly detailed human-like instructions, the researchers ensured that it learns robust visual concepts and semantic meanings. This emphasis on comprehension rather than memorization is the key factor behind InstructDiffusion's impressive generalization capabilities.

InstructDiffusion represents a milestone towards developing generalist computer vision models. The ability to handle diverse tasks through intuitive instructions brings these models closer to human perception.

The proposed interface offers flexibility and interactivity lacking in most current vision systems and offers an intuitive approach for bridging the gap between human and machine understanding in computer vision. This research could accelerate the development of capable multi-purpose vision agents and the promising results demonstrate its potential to advance general visual intelligence.

To give InstructDiffusion, hop over to this handy web demo playground where you can upload a source image and write the instruction to conduct keypoint detection, referring segmentation, and image editing. Additionally, the researchers have shared the code for a pytorch implementation, the pre-trained stable diffusion model and other necessary resources required to run your own setup on GitHub.