Microsoft released its first annual Responsible AI Transparency Report, providing insights into the company's efforts to develop and deploy AI in an ethical and responsible manner. The report covers how Microsoft builds generative AI applications, makes decisions about releasing them, supports customers in building AI responsibly, and continuously learns and evolves its responsible AI practices.

Key highlights from the report include:

- 99% of Microsoft employees completed mandatory responsible AI training in 2023. The company expanded its responsible AI team from 350 to 400 personnel.

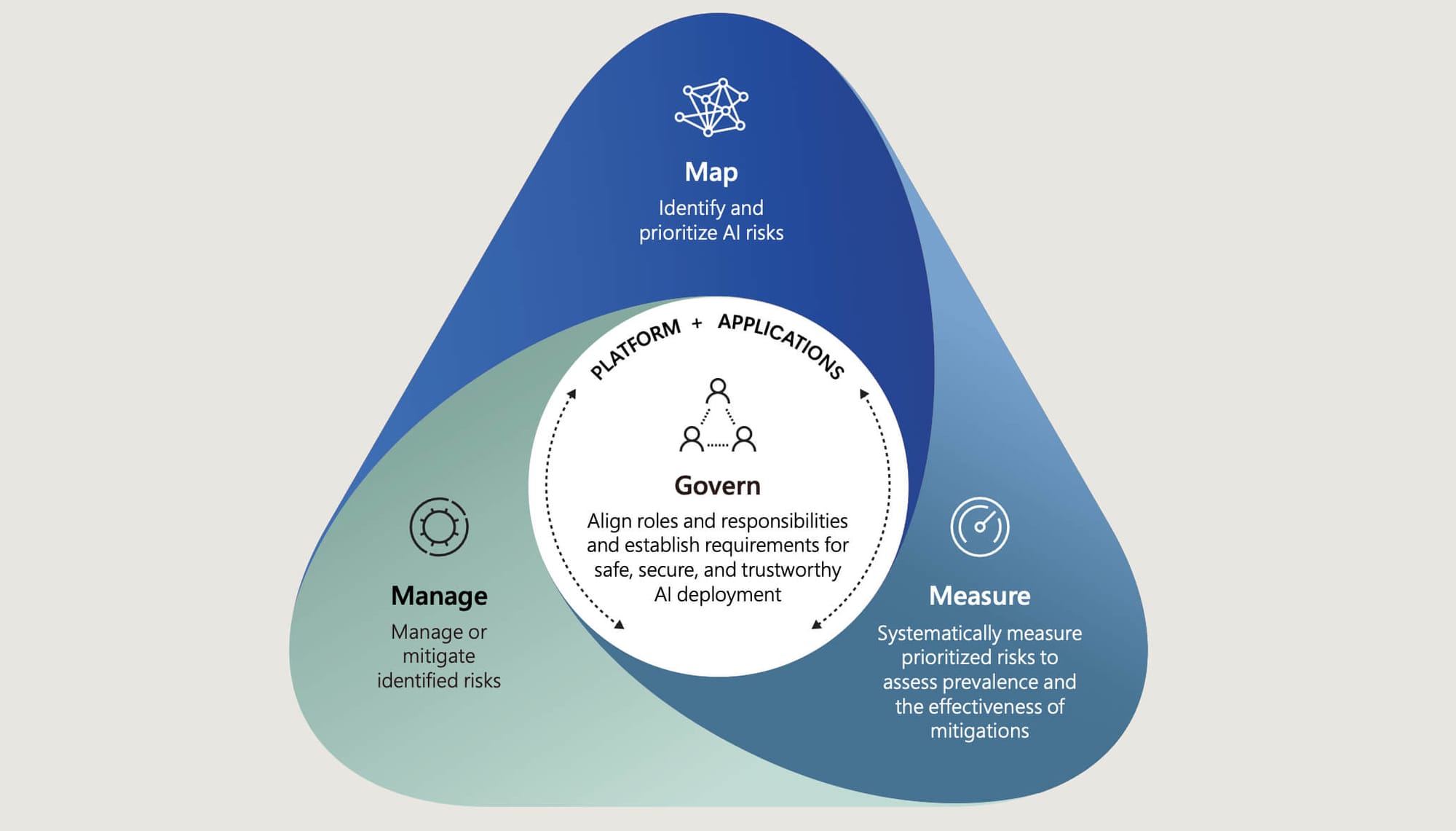

- Microsoft has implemented a comprehensive framework to map, measure, and manage risks associated with generative AI models and applications. This includes AI red teaming to uncover vulnerabilities, metrics to assess risks and mitigation effectiveness, and multiple layers of safeguards.

- Sensitive use cases of AI, such as those that could impact individual rights or cause injury, undergo additional scrutiny by Microsoft's Sensitive Uses program. Nearly 70% of cases reviewed in 2023 were related to generative AI.

- To support customers, Microsoft released over 30 responsible AI tools and features in Azure AI services. The company also made commitments to defend customers against copyright infringement claims resulting from use of its generative AI products.

- Recognizing the global impact of AI, Microsoft is engaging with stakeholders in developing countries to ensure its AI practices and governance reflect diverse perspectives. The company also co-chairs initiatives like the UNESCO AI Business Council to advance ethics guidelines.

The report acknowledges that responsible AI is an ongoing journey without a finish line. Some key challenges highlighted include measuring and mitigating the risk of AI generating misinformation, ensuring safe development of highly capable "frontier" AI models, and adapting responsible AI practices to rapidly evolving technology.

To address these issues, Microsoft is investing in research on AI transparency, partnering with academic institutions to democratize access to AI resources, and advocating for government policies to ensure AI benefits society. The company also emphasizes the need for an empowered responsible AI workforce and an inclusive approach that represents global concerns.

While the report showcases significant progress, it makes clear that much work remains to realize the potential of AI technology while mitigating its risks and negative impacts. Through continued learning, partnership, and investment, Microsoft aims to be a leader in the responsible development and deployment of AI.