As AI capabilities advance rapidly, Microsoft wants to spearhead responsible development of new technologies like generative AI. After a blockbuster year of delivering AI solutions to both enterprise and consumers, the tech giant has outlined core tenets governing its approach to privacy protection in tools like Microsoft Copilot.

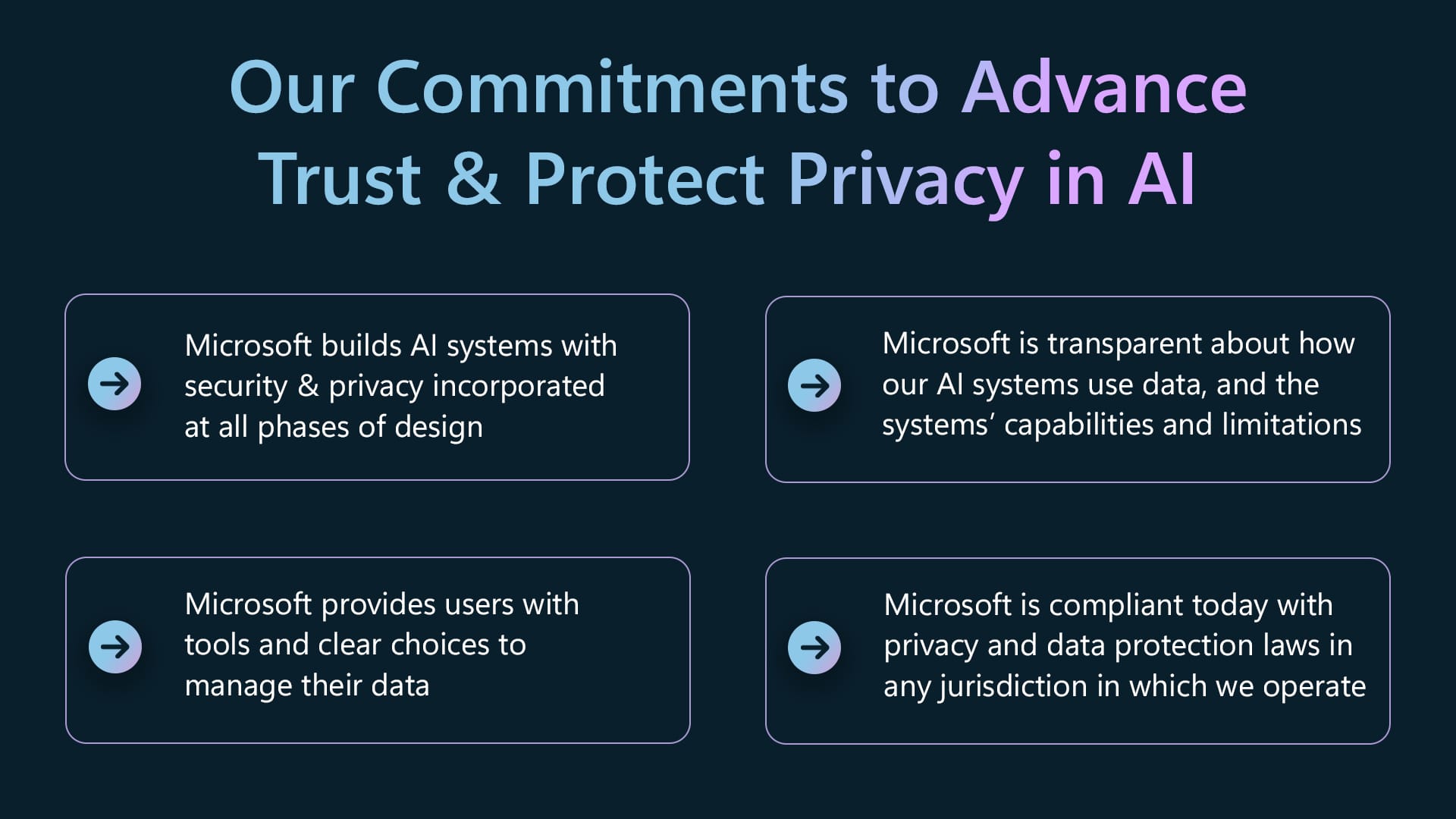

Now more than ever, it's important to keep a close eye on how foundation model providers like Microsoft are fortifying data security, enhancing transparency, expanding user controls, and aligning with evolving regulations worldwide. By integrating these priorities early in design phases, companies can build trust and mitigate risks associated with AI systems.

At the forefront of Microsoft's privacy strategy is the principle of data security. Recognizing that robust security measures are indispensable for maintaining user trust, Microsoft stresses it has embedded comprehensive security protocols across its suite of AI products. They point to how they are integrating Copilot into various services like Microsoft 365, Dynamics 365, Viva Sales, and Power Platform, each fortified with stringent security, compliance, and privacy policies.

This privacy by design approach is commendable and should be encouraged. It ensures that security is not an afterthought but a foundational element, woven into the very fabric of product development and deployment.

Transparency stands as another cornerstone of Microsoft's privacy approach. The company is committed to demystifying AI interactions, ensuring users are informed and comfortable with how AI systems operate. This commitment to clarity is evident in Microsoft Copilot, where users receive real-time information about AI functionalities, capabilities, and limitations. Such transparency not only enhances user understanding and control but also strengthens the trust in Microsoft's AI systems.

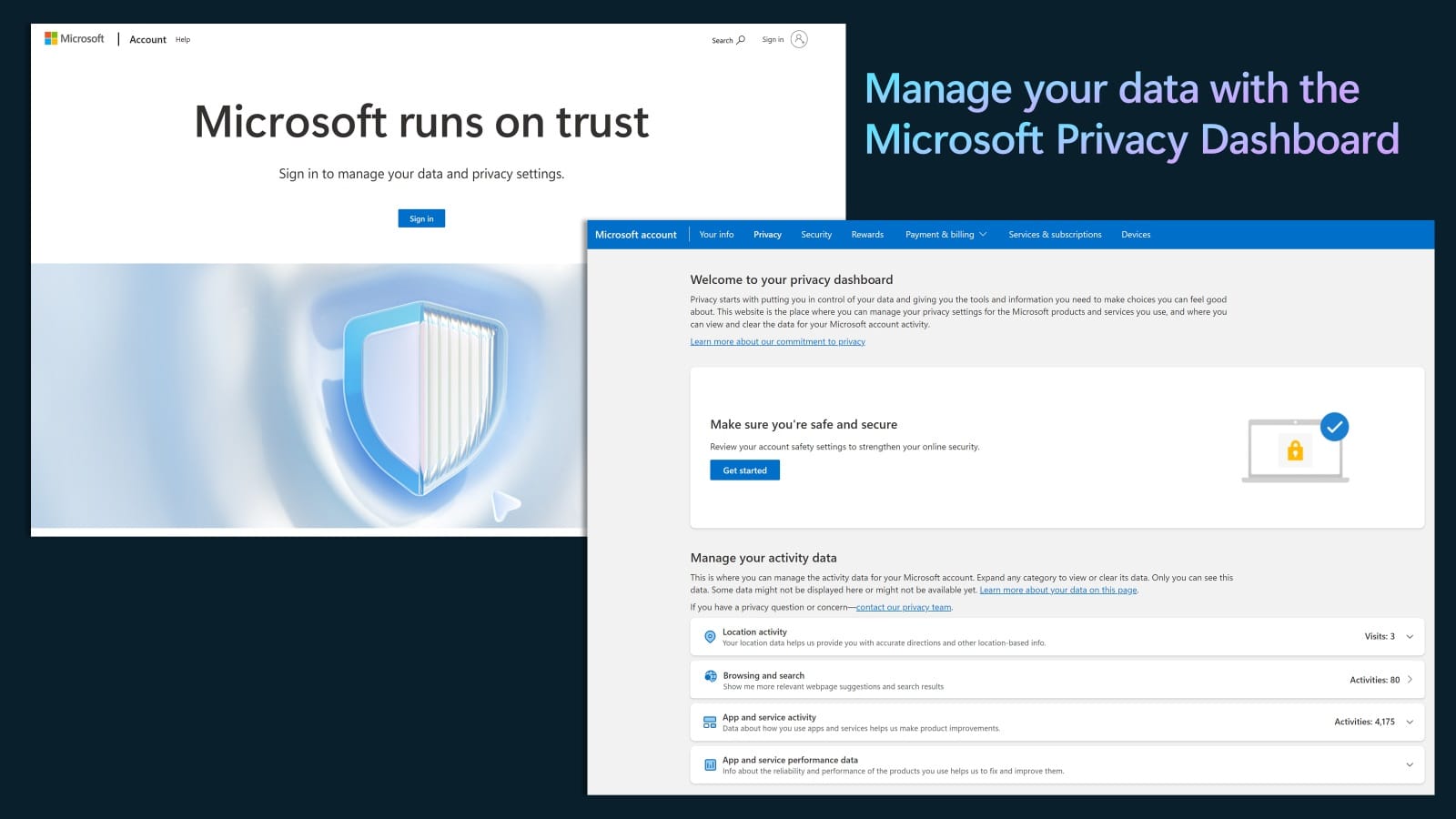

Importantly, the company places immense value on user autonomy over their personal data. For example, with the Microsoft Privacy Dashboard users have comprehensive control over all their data within Microsoft's ecosystem. Whether it's managing or deleting personal data and stored conversation history, Microsoft ensures that user preferences are respected and honored. This approach not only aligns with ethical standards but also fosters a sense of empowerment among users.

As AI continues to reshape our world, Microsoft's commitment to upholding privacy and fundamental human rights offers an exemplary blueprint for responsibly navigating the challenges and opportunities of this transformative era. By proactively integrating foundational safeguards like security, transparency, and user control into its AI systems, they are laying vital groundwork to earn public trust.

As an industry leader directing substantial AI resources, Microsoft bears immense responsibility for steering developments toward societally beneficial outcomes centered on human rights. Their comprehensive privacy strategy and ongoing collaboration with global regulators signal a commitment to ethical advancement of AI grounded in fundamental values like autonomy, understanding, and choice.

While risks undoubtedly remain, proactive efforts like this that install safeguards by default will hopefully inspire wider adoption of principles that can help us to realize AI's immense potential while keeping us safe, engendering trust, and protecting our privacy.