Microsoft Research has announced the release of Orca 2, the latest iteration of their small language model series aimed at expanding the capabilities of smaller AI models. Coming in at 7 billion and 13 billion parameters, Orca 2 demonstrates advanced reasoning abilities on par with or exceeding much larger models, even those 5-10 times its size.

Orca 2 builds on the original 13B Orca model released earlier this year, which showed improved reasoning by imitating the step-by-step explanations of more powerful AI systems like GPT-4. The key to Orca 2's success lies in its training: it is fine-tuned on high-quality, synthetic data derived from the LLAMA 2 base models, a method that has proven effective in elevating its reasoning capabilities.

The research team's approach is both innovative and strategic. They have recognized that different tasks benefit from tailored solution strategies, and smaller models may need different approaches than their larger counterparts.

As such, Orca 2 has been trained on a vast dataset demonstrating various techniques like step-by-step reasoning, extraction-and-generation, and direct answering. The data was obtained from a more capable "teacher" model, which allowed Orca 2 to learn when to apply different strategies based on the problem at hand. This flexible approach is what enables Orca 2 to match or surpass much bigger models.

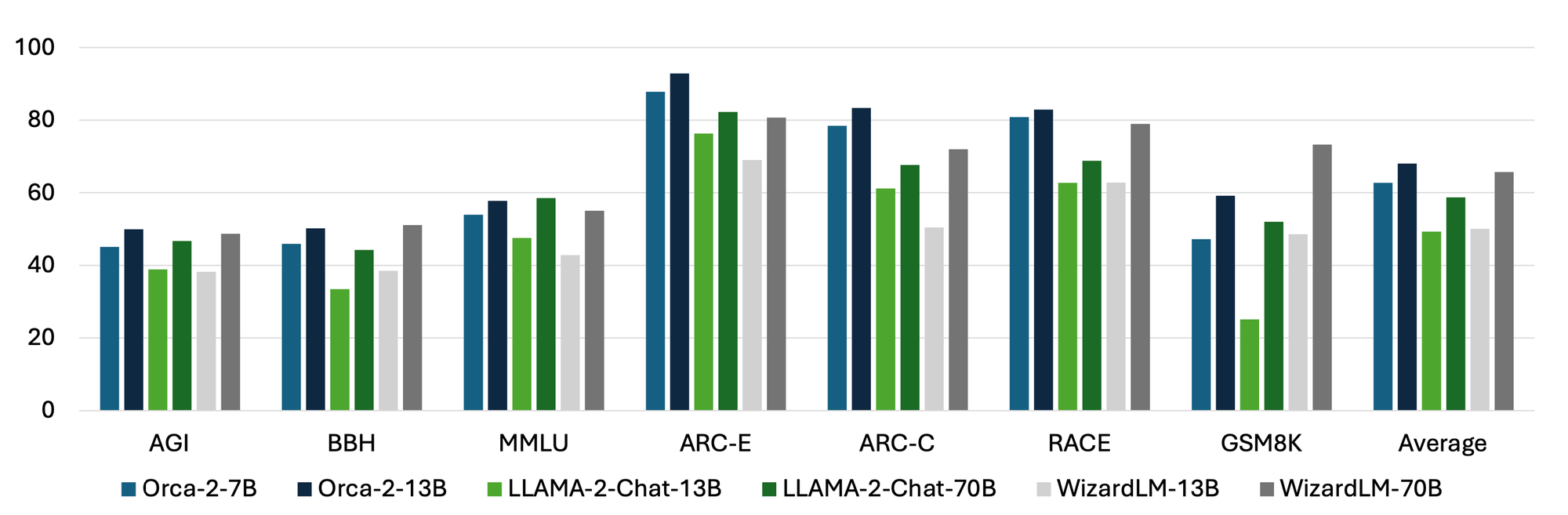

Comprehensive benchmarks show Orca 2 significantly outperforming other models of equivalent size on metrics related to language understanding, common sense reasoning, multi-step math problems, reading comprehension, summarization, and more. For instance, on zero-shot reasoning tasks, Orca 2-13B achieves over 25% higher accuracy than comparable 13B models and is on par with a 70B model.

While Orca 2 exhibits constraints inherited from its base LLaMA 2 model and shares limitations common to LLMs, its strong zero-shot reasoning highlights the potential for advancing smaller neural networks. Microsoft believes specialized training approaches like that used for Orca 2 can unlock new use cases balancing efficiency and capability for deployment.

Orca 2 has not undergone safety-focused RLHF tuning. However, Microsoft suggests tailored synthetic data could similarly teach safety and mitigation behaviors. They have open-sourced Orca 2 to spur further research into developing and aligning smaller but capable language models.