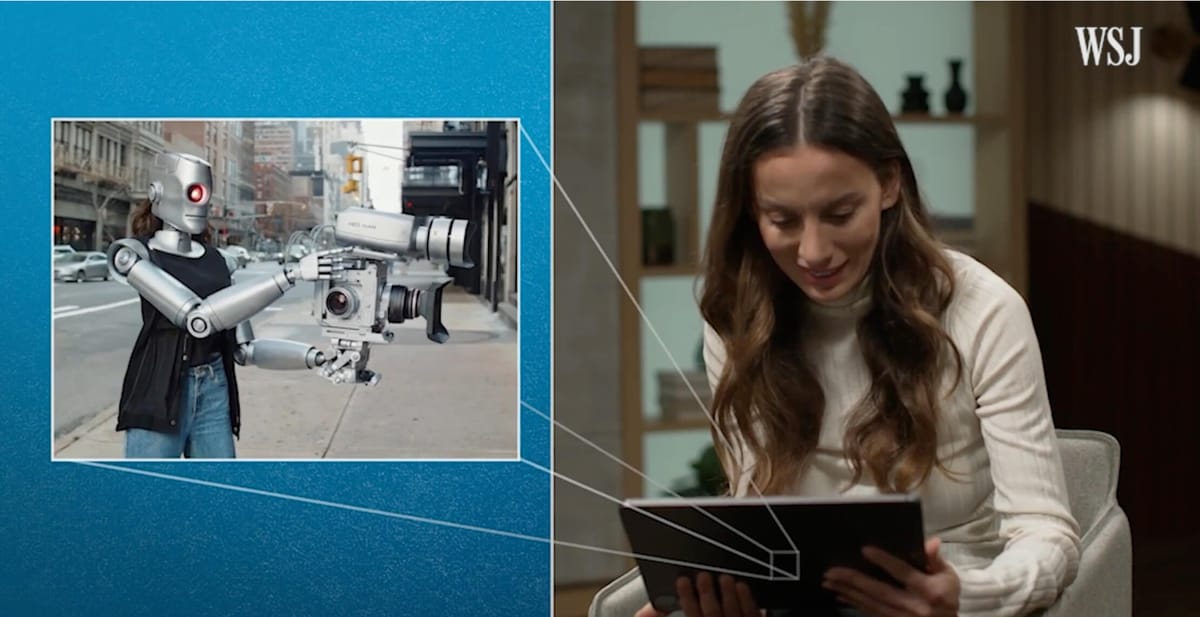

OpenAI's new text-to-video AI model, Sora, is set to revolutionize the video production landscape. In an exclusive interview with the Wall Street Journal, OpenAI's CTO Mira Murati provided insights into the capabilities and potential impact of this groundbreaking technology.

Sora's ability to generate highly realistic and detailed video clips from simple text prompts has left many in awe. From a bull strolling through a china shop to a mermaid reviewing a smartphone with her crab assistant, the AI-generated videos showcase an unprecedented level of sophistication. Murati explained that a 20-second, 720p-resolution clip takes only a few minutes to generate. Although the tool currently lacks sound capabilities, OpenAI plans to add it eventually.

The technology behind Sora involves diffusion transformer models, where the AI analyzes vast amounts of video data to identify objects and actions. Specifically, OpenAI trained text-conditional diffusion models jointly on videos and images of variable durations, resolutions and aspect ratios. Thet then leverage a transformer architecture that operates on spacetime patches of video and image latent codes.

The result is that when given a text prompt, the model sketches out the entire scene and fills in each frame. Its output easily surpasses what we have seen in similar tools from Runway, Pika and Stability AI.

Despite the impressive results, Sora's videos still exhibit noticeable AI tells, such as hand motion inaccuracies and continuity issues. However, as the technology continues to improve, distinguishing between real and AI-generated videos may become increasingly challenging. OpenAI plans to address this by incorporating watermarks and metadata to denote the origins of the videos.

The potential impact of Sora on the video production industry has sparked both excitement and concern. While some, like filmmaker Tyler Perry, see the technology as a cost-saving opportunity, others worry about the future of human jobs in the industry. Jeanette Moreno King, president of the Animation Guild, acknowledged that while humans will still be needed for artistic decisions, the future remains uncertain.

While OpenAI has faced copyright infringement lawsuits alleging the scraping of content without permission, Murati confirmed that the training data for Sora includes publicly available and licensed data, including content from Shutterstock.

OpenAI is taking a cautious approach to the release of Sora, focusing on red-teaming the tool to identify and address vulnerabilities, biases, and harmful results. The company is also engaging with artists and industry professionals to establish guidelines and limitations that balance creativity and responsibility.

As Sora continues to evolve and improve, it is clear that the technology has the potential to transform the way we create and consume video content. While the full extent of its impact remains to be seen, one thing is certain: the future of video production is about to undergo a seismic shift.