Earlier this year, Amazon Web Services (AWS) and NVIDIA announced a multipart collaboration focused on building next-generation infrastructure for training large language models (LLMs) and developing generative AI applications. The fruits of this collaboration have now materialized with the announcment of the general availability of the Amazon EC2 P5 instances.

Amazon Web Services (AWS) customers can now leverage the powerful NVIDIA H100 GPUs through the newly launched Amazon EC2 P5 instances, unlocking industry-leading performance for the latest generative AI models and more. With architectural innovations like Transformer Engines and fourth-generation Tensor Cores, the NVIDIA H100 delivers unprecedented AI training and inference capabilities.

The evolution of machine learning models into trillions of parameters has extended training times into multiple months, a trend echoed by high performance computing (HPC) customers dealing with increasing data fidelity and exabyte-scale datasets. P5 instances are designed to address these needs by dramatically reducing training times and enhancing scalability in AI/ML and HPC workloads.

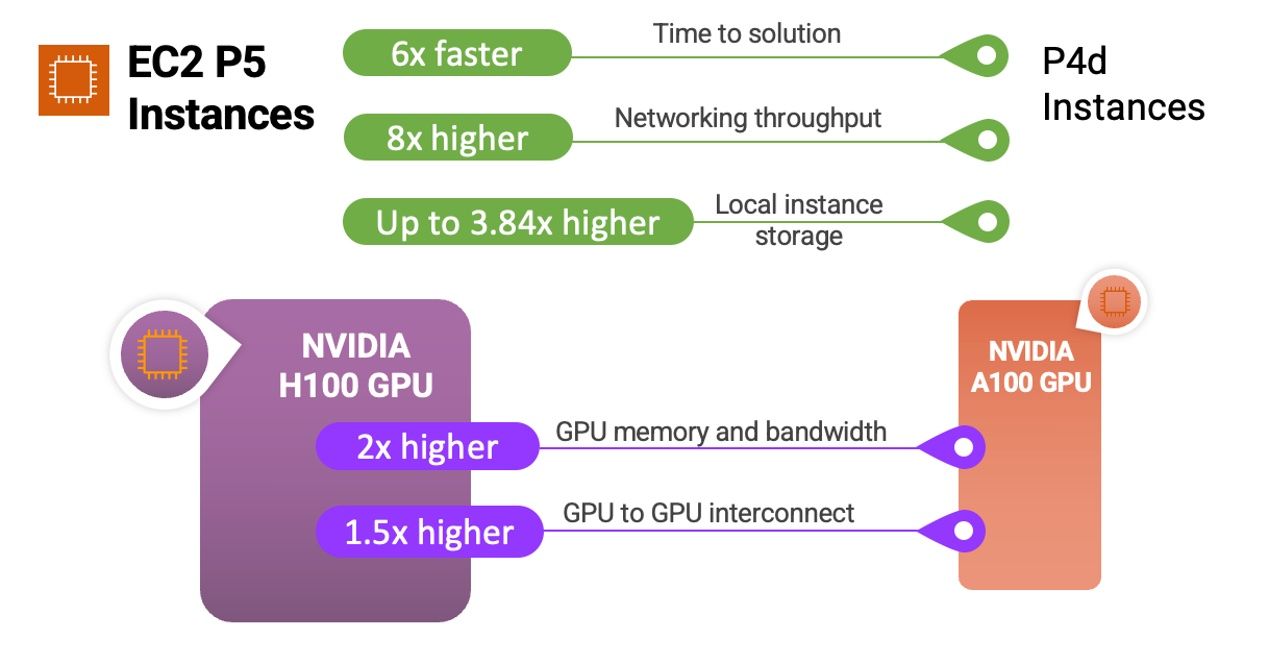

The new P5 instances, which offer eight NVIDIA H100 Tensor Core GPUs, 3rd Gen AMD EPYC processors, 2 TB of system memory, and 30 TB of local NVMe storage, are slated to reduce training time by up to six times compared to previous generation GPU-based instances. This leap in performance promises a 40 percent decrease in training costs for customers.

This timing is critical, as AI continues to reach mainstream adoption following its "iPhone moment" this year. With accessible large language models like ChatGPT, developers are rapidly uncovering innovative applications across industries. The NVIDIA H100 gives these emerging AI use cases the accelerated performance needed for real-world deployment.

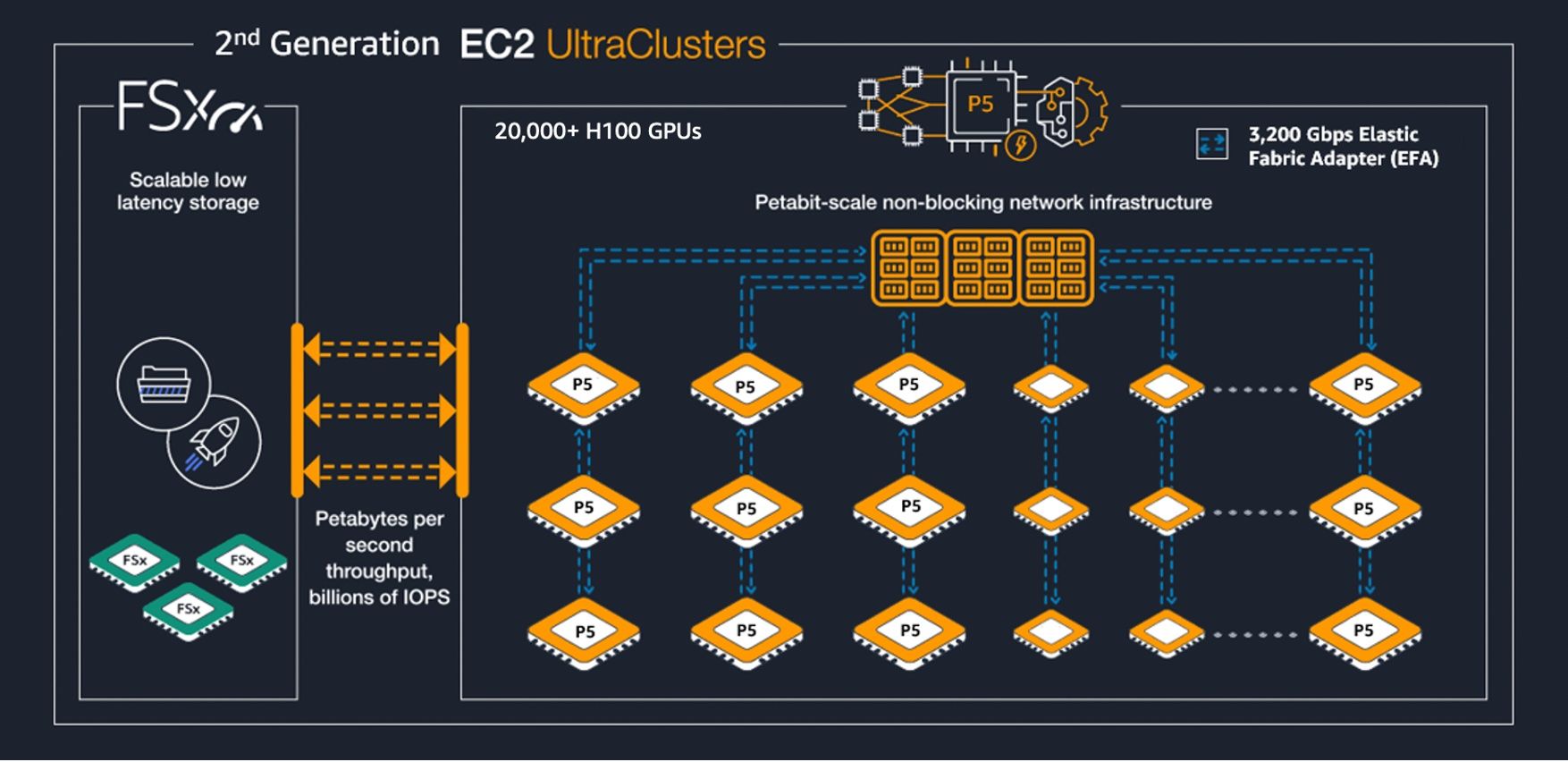

The P5 instances can flexibly scale AI workloads through Amazon EC2 UltraClusters, composed of high-speed compute, networking, and storage. Applications leveraging NVIDIA's Collective Communications Library can utilize up to 20,000 H100 GPUs simultaneously. The combination of H100 GPU muscle and AWS's petabit-scale network enables unprecedented scale for complex AI training and HPC applications.

Needless to say, customers are excited about the immense potential of this offering:

- Anthropic expects major price-performance gains to train next-gen LLMs.

- Cohere sees H100 unlocking faster business growth through language AI deployment.

- Hugging Face anticipates accelerated delivery of foundational AI models.

- Pinterest aims to bring new empathetic AI experiences to users.

The Amazon EC2 P5 instances are now available in the US East (N. Virginia) and US West (Oregon) regions. As the demand for advanced AI infrastructure continues to grow, this offering provides developers and researchers with the tools they need to explore previously unreachable problem spaces, iterate solutions more rapidly, and bring their innovations to market more quickly.